Data Integration Best Practices

Discover top data integration best practices to build a secure, scalable data ecosystem. Learn 7 strategies to optimise your data management today.

Beyond ETL: Unifying Your Data for Strategic Advantage

In today’s economy, organisations are inundated with data from countless sources: CRMs, ERPs, marketing platforms, and IoT devices. Yet, possessing more data does not automatically translate to better insights. The most common challenge is fragmentation, where valuable information remains locked away in disconnected silos. This leads directly to operational inefficiencies, inaccurate reporting, and significant missed opportunities. Overcoming this hurdle requires moving beyond basic ETL scripts and adopting a far more strategic approach to your data architecture.

This article delves into the essential data integration best practices that separate high-performing organisations from the rest. We will explore seven critical pillars that form the foundation of a modern, resilient, and scalable data ecosystem. These pillars cover everything from unified architecture and quality management to security and governance. For a general overview of recommended approaches, refer to this guide on data integration best practices before we dive into our detailed breakdown.

Moving beyond temporary fixes, these practices will guide you in building a unified data framework that drives intelligent automation, empowers data-driven decision-making, and creates a significant competitive advantage. Mastering these concepts is no longer optional; it is fundamental to achieving scalable growth and operational excellence for your business in 2025 and beyond.

1. Establish a Unified Data Architecture

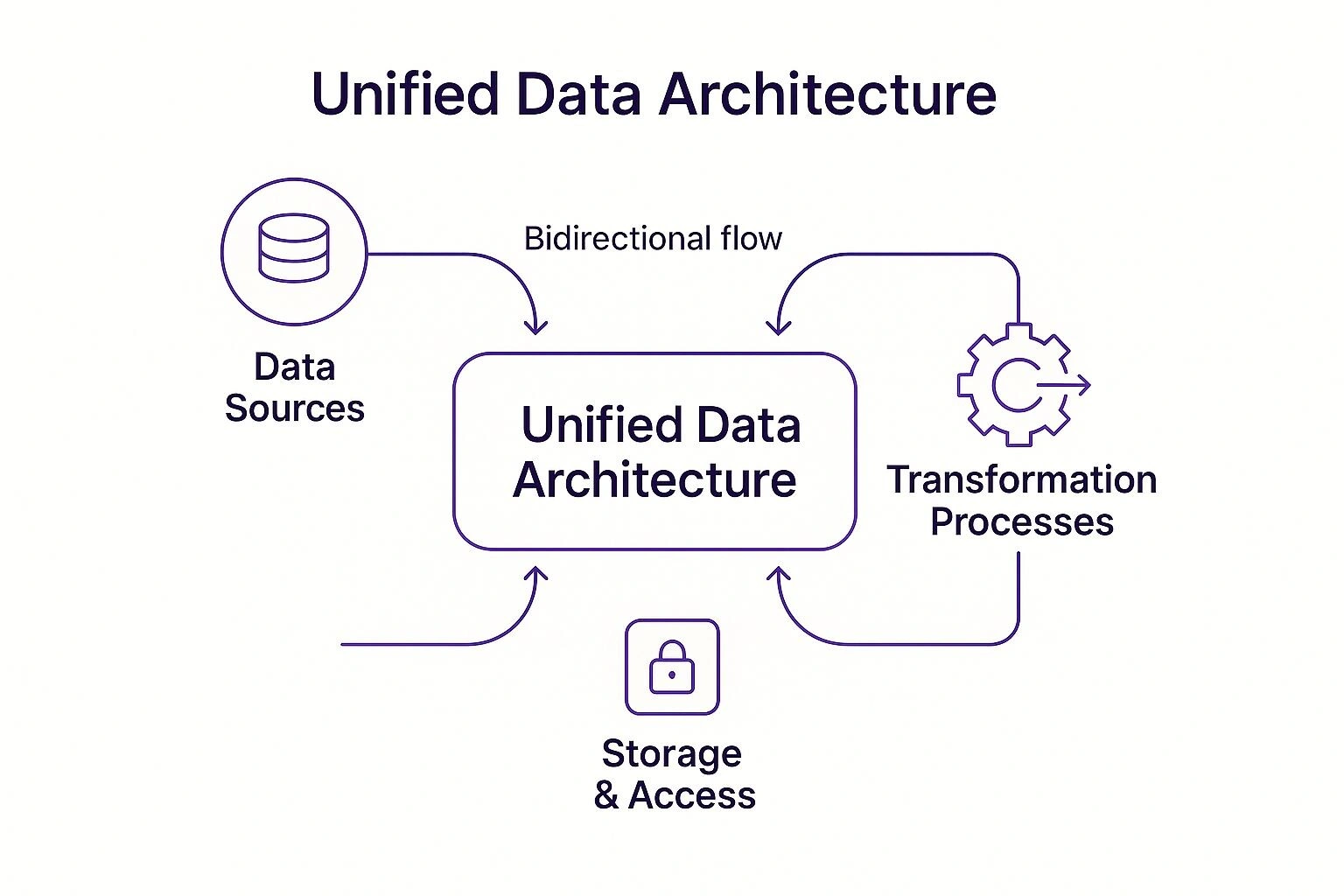

A foundational step in any mature data strategy is establishing a unified data architecture. This is more than just a technical diagram; it’s a comprehensive blueprint that dictates how data flows between systems, where it is stored, and how your organisation accesses it. Without this blueprint, data integration efforts often become fragmented, leading to data silos, inconsistencies, and scalability issues. Implementing this as one of your core data integration best practices ensures every piece of data has a clear purpose and path.

This architecture maps out all data sources, transformation processes, storage solutions, and consumption patterns. It provides a single source of truth that aligns technical implementation with business objectives. For example, Netflix’s architecture supports real-time recommendations for over 200 million users, while Uber’s microservices architecture seamlessly integrates rider, driver, and operational data streams.

The following concept map visualises the core components of a unified architecture and their interconnected relationship.

This visualisation highlights the central role of the architecture in orchestrating the continuous, bidirectional flow between data sources, transformation logic, and storage and access layers.

Implementation Strategy

Successfully creating a unified data architecture requires a structured, phased approach rather than a “big bang” overhaul.

- Audit and Map: Begin by conducting a thorough audit of your current data landscape. Map all existing data sources, from CRMs and ERPs to marketing platforms and financial software. When establishing a unified data architecture, consider how various systems will connect. Exploring available integrations can reveal pre-built connectors that simplify this complex process.

- Involve Stakeholders: Engage with department heads and end-users early. Their input is crucial for understanding data needs and ensuring the final architecture is practical and adds business value.

- Design for Scalability: Don’t just build for today’s needs. A robust architecture anticipates future data sources, increased volume, and new analytical requirements, ensuring long-term viability.

- Document Everything: Meticulous documentation of data models, flows, and standards is non-negotiable. It serves as the guide for all future development and integration projects. You can discover more about effective system integration to complement your data strategy.

2. Implement Data Quality Management

A systematic approach to ensuring data accuracy, completeness, consistency and reliability is critical in any robust data integration best practices framework. Implementing Data Quality Management means defining clear rules for what constitutes “good” data, continuously monitoring records as they move through pipelines and remediating issues before they propagate. Without these safeguards, organisations risk making strategic decisions on flawed insights and eroding stakeholder confidence.

Leading enterprises showcase the impact of strong data quality governance. JPMorgan Chase processes trillions of dollars in transactions using a rigorous data quality framework that flags anomalies in real time. Walmart leverages supplier data quality management to guarantee accurate product information across thousands of suppliers. Healthcare providers enforce quality checks on patient records to prevent clinical errors. Each example highlights why Data Quality Management holds its place among the top data integration best practices.

Implementation Strategy

- Define Metrics and KPIs: Establish quantitative measures for accuracy, completeness and timeliness. Track error rates, duplicate records and field-level consistency against agreed thresholds.

- Validation at Multiple Points: Embed quality checks at ingestion, transformation and loading stages. Catch schema mismatches or null values early in the pipeline.

- Establish Data Stewardship: Assign clear roles for data owners and stewards. Empower teams to resolve issues and authorise changes in master data domains.

- Use Automated Monitoring: Deploy tools that generate alerts on quality drift. When selecting platforms, explore available Integrations to ensure compatibility with your source systems.

- Create Feedback Loops: Implement processes for end users to report data issues and request corrections. Regularly review incident logs to refine quality rules.

- Continuous Improvement: Conduct quarterly data quality audits and revisit KPIs. Use insights to update validation logic and training materials.

Implement Data Quality Management when you integrate complex or high-volume data sources, operate in regulated industries or rely on real-time analytics. Doing so minimises downstream rework, boosts stakeholder trust and streamlines compliance. Learn more about creating a data management plan to complement your data quality strategy.

3. Adopt Real-Time and Batch Processing Strategies

A critical aspect of modern data management involves choosing the right data processing cadence. Organisations must strategically decide between real-time (streaming) and batch processing, or more commonly, a hybrid approach that leverages the strengths of both. Adopting the correct model based on business requirements, latency tolerance, and cost is one of the most impactful data integration best practices for ensuring efficiency and responsiveness.

This decision directly influences your ability to act on insights. For example, financial institutions depend on real-time processing to power fraud detection systems, analysing transactions in milliseconds. Similarly, e-commerce platforms use streaming data for live inventory management. In contrast, batch processing is ideal for less time-sensitive tasks like generating end-of-day financial reports or running complex analytics on large historical datasets, where processing data in large chunks is more cost-effective.

Understanding the architectural nuances between these two models is essential for effective implementation. The following video provides a clear overview of lambda and kappa architectures, which are common frameworks for combining batch and real-time data pipelines.

Implementation Strategy

Implementing a balanced processing strategy requires a clear-eyed assessment of your operational and analytical needs.

- Assess Business Requirements: Begin by categorising your data use cases. Determine the required data freshness for each. Does a business process need data in seconds (real-time), minutes, or hours (batch)? This analysis will guide your architectural choices.

- Use Appropriate Tooling: Select tools designed for the specific job. Use stream-processing platforms like Apache Kafka and Flink for real-time pipelines, and leverage engines like Apache Spark or traditional data warehouses for efficient, large-scale batch jobs.

- Implement Robust Error Handling: Data pipelines can fail. Design your systems with strong error handling and recovery mechanisms, including dead-letter queues for failed messages in streaming and checkpointing for resumable batch jobs.

- Monitor Performance Continuously: Track key metrics like data latency, throughput, and processing costs for both real-time and batch workflows. Continuous monitoring allows you to identify bottlenecks and optimise for performance and cost-effectiveness.

4. Establish Comprehensive Data Governance

Data governance provides the essential framework of policies, roles, and standards for managing an organisation’s data assets. This is not a restrictive set of rules but a strategic enabler that ensures data is accurate, consistent, secure, and handled ethically throughout its lifecycle. Adopting this as one of your core data integration best practices is crucial, as it builds the guardrails that allow for safe and compliant data sharing and consolidation across systems.

This framework defines everything from data ownership and quality benchmarks to access controls and regulatory adherence. For instance, Australian banks implement robust governance to meet prudential standards set by APRA, while healthcare organisations must align with the Australian Privacy Principles (APPs) to protect sensitive patient health information. In the public sector, government agencies rely on strict data classification systems to manage information security and accountability.

Implementation Strategy

Successfully embedding data governance requires a deliberate, business-first approach that focuses on value and risk mitigation rather than a rigid, top-down mandate.

- Start with Critical Data Assets: Begin your governance initiative by focusing on the most valuable and highest-risk data domains, such as customer financial data or personally identifiable information (PII). This targeted approach demonstrates value quickly and addresses major compliance gaps first.

- Involve Legal and Compliance Early: Engage your legal and compliance teams from day one. Their expertise is vital for navigating the complex Australian regulatory landscape, including the Privacy Act 1988, ensuring your integration architecture is compliant by design.

- Create Clear Roles and Responsibilities: Formally assign roles like Data Owners (accountable for a data asset), Data Stewards (responsible for its day-to-day management), and Data Custodians (who manage the technical environment). This clarity eliminates ambiguity and fosters accountability.

- Implement Automated Governance Tools: Leverage modern data governance platforms where possible. Tools can automate data cataloguing, lineage tracking, and policy enforcement, making the framework scalable and less manually intensive for your teams.

- Promote a Data-Aware Culture: Governance is a shared responsibility. Conduct regular training and awareness programs to educate all employees on the importance of data quality, security, and privacy, embedding these principles into your organisation’s culture.

5. Design for Scalability and Performance

A forward-thinking data integration strategy must anticipate growth. Designing for scalability and performance means building a data ecosystem that can gracefully handle increasing data volumes, more complex queries, and a growing number of users without faltering. Without this foresight, an integration solution that works today can quickly become a bottleneck tomorrow, leading to slow response times, system failures, and missed business opportunities. Prioritising this from the outset is one of the most critical data integration best practices for long-term success.

This principle is about creating an elastic architecture that adapts to demand. For instance, Amazon’s infrastructure is famously designed to scale massively to handle the extreme traffic surges of Black Friday and Prime Day. Similarly, Salesforce’s multi-tenant architecture allows it to deliver consistent performance to millions of users across thousands of organisations, demonstrating how scalable design can support a vast and varied customer base from a unified platform.

Implementation Strategy

Building a scalable and performant integration framework requires a proactive and strategic approach, focusing on architecture and continuous optimisation.

- Design with Future Growth in Mind: Avoid architectures that are rigid or tightly coupled. Opt for microservices-based or event-driven patterns that allow individual components to be scaled independently as data sources or processing needs expand.

- Use Distributed Processing Frameworks: For large-scale data transformation, leverage frameworks like Apache Spark or cloud services such as AWS Glue. These tools distribute the workload across multiple machines, dramatically speeding up processing time for terabytes or even petabytes of data.

- Implement Smart Caching: Introduce a caching layer for frequently accessed data. This reduces the load on your primary databases and source systems, significantly lowering latency for end-users and analytical queries.

- Monitor Performance Continuously: Utilise monitoring tools to track key metrics like data throughput, latency, and error rates. Establishing performance baselines allows you to proactively identify bottlenecks and address degradation before it impacts the business.

- Plan for Peak Load Scenarios: Do not just design for average usage. Conduct regular stress tests and capacity planning based on anticipated peak loads, such as end-of-quarter reporting or seasonal marketing campaigns, to ensure your system remains resilient.

6. Implement Robust Error Handling and Monitoring

Data integration pipelines are not infallible; they are complex systems where failures are a matter of when, not if. Implementing robust error handling and monitoring moves an organisation from a reactive “break-fix” model to a proactive, resilient one. It involves creating systems to detect, log, manage, and recover from errors. Adopting this as a key part of your data integration best practices ensures data integrity, minimises downtime, and builds trust in your data.

Leading technology companies demonstrate this approach’s power. For example, Netflix popularised Chaos Engineering, a method of deliberately injecting failures to test system resilience before they occur. Similarly, financial institutions rely on transaction monitoring with automatic rollback capabilities to guarantee data consistency during payment processing, ensuring partial failures do not corrupt the entire system.

Implementation Strategy

A successful strategy requires a multi-faceted approach combining technology, process, and people.

- Monitor at Multiple Layers: Implement observability across the entire data stack. This includes source system availability, network latency, transformation job performance, and data quality metrics. A holistic view prevents blind spots where errors can hide.

- Establish Clear Escalation Procedures: Document who is notified for specific errors, through which channels, and what the expected response is. Differentiate between critical alerts requiring immediate action and warnings for review during business hours.

- Automate Recovery Where Possible: For transient issues like network timeouts, build automated retry logic. For data quality issues, quarantine bad records for manual review instead of halting the entire pipeline. These principles are key to streamlining your operations with automation.

- Set Meaningful Alerting Thresholds: Avoid “alert fatigue” by tuning thresholds to actual business impact. A 5% data load failure might be an acceptable warning for an internal dashboard but a critical alert for a customer-facing billing system.

- Test Error Scenarios Regularly: Don’t wait for a real crisis. Regularly conduct “fire drills” by simulating common failure modes like API outages or corrupted files to validate your monitoring, alerting, and recovery playbooks.

7. Ensure Data Security and Privacy

In an interconnected data ecosystem, security cannot be an afterthought. Integrating security and privacy measures directly into your data pipelines is essential for protecting sensitive information as it moves between systems, where it is most vulnerable. This involves a proactive approach to safeguarding data from unauthorised access, breaches, and misuse. Adhering to this as one of your core data integration best practices is non-negotiable for maintaining customer trust and ensuring regulatory compliance.

This practice goes beyond simple firewalls. It encompasses a multi-layered defence strategy including encryption for data in transit and at rest, robust access controls, and data masking or tokenisation for sensitive fields. For example, Australian financial institutions use tokenisation to protect payment card information during transaction processing, while healthcare organisations implement strict controls to manage patient data in line with the Australian Privacy Principles (APPs). Similarly, global companies like Apple use advanced techniques such as differential privacy to analyse user behaviour without compromising individual identities.

Implementation Strategy

Successfully embedding security and privacy into your data integration framework requires a systematic and ongoing commitment.

- Implement ‘Security by Design’: Treat security as a foundational requirement from the very beginning of any integration project. This means security teams work alongside developers to architect secure data flows, APIs, and storage solutions, rather than attempting to patch vulnerabilities after development is complete.

- Use End-to-End Encryption: Encrypt all sensitive data, particularly Personally Identifiable Information (PII). This includes encryption in transit using protocols like TLS to protect data moving across networks, and encryption at rest for data stored in databases, data lakes, or cloud storage.

- Conduct Regular Audits: Proactively identify and remediate vulnerabilities by performing regular security audits, penetration tests, and risk assessments of your integration infrastructure. This helps ensure your defences remain effective against evolving threats.

- Train Staff on Security Protocols: Your employees are a critical part of your security posture. Implement mandatory and continuous training programs on data handling policies, phishing awareness, and incident response procedures to minimise the risk of human error.

- Stay Updated with Regulatory Requirements: The regulatory landscape is constantly changing. Assign responsibility for monitoring updates to legislation like the Australian Privacy Act and GDPR to ensure your integration processes remain fully compliant and avoid significant penalties.

7 Key Practices Comparison

| Item | Implementation Complexity | Resource Requirements | Expected Outcomes | Ideal Use Cases | Key Advantages |

|---|---|---|---|---|---|

| Establish a Unified Data Architecture | High initial complexity, phased approach | Significant upfront investment | Consistent, scalable data flow across org | Large enterprises needing integrated data | Reduces silos, improves scalability, compliance |

| Implement Data Quality Management | Moderate to high, ongoing effort | Continuous resources for monitoring | Accurate, reliable, and consistent data | Organisations prioritising data trust | Improves decision accuracy, reduces errors |

| Adopt Real-Time and Batch Processing | High complexity due to hybrid models | Specialised tools and skilled staff | Timely decisions with optimised resource use | Businesses needing low latency and batch | Enables timely decisions, reduces latency |

| Establish Comprehensive Data Governance | High due to policies and organisational buy-in | Significant organisational commitment | Secure, compliant, well-managed data | Regulated industries and data-sensitive orgs | Ensures compliance, security, and data trust |

| Design for Scalability and Performance | High complexity and cost initially | Specialised expertise and monitoring | Efficient handling of growing data volumes | Systems expecting rapid growth | Maintains performance, supports business growth |

| Implement Robust Error Handling & Monitoring | Moderate to high complexity | Additional infrastructure and experts | Reduced downtime, faster issue resolution | Mission-critical, high-availability systems | Improves reliability and operational visibility |

| Ensure Data Security and Privacy | High complexity with specialised expertise | Security tools and continuous training | Protected sensitive data, regulatory compliance | Any system handling sensitive or regulated data | Protects data, builds trust, ensures compliance |

From Practice to Performance: Activating Your Data Strategy

Navigating the seven pillars we have explored, from establishing a unified architecture to ensuring ironclad security, is more than an IT exercise. It represents a fundamental strategic shift in how your organisation values and activates its data. These are not disparate items on a checklist to be addressed in isolation. Instead, they are deeply interconnected components of a single, powerful engine designed to drive performance. True mastery of these data integration best practices is what separates market leaders from the rest.

The real value emerges when these principles work in concert. A robust data governance framework, for instance, is the bedrock upon which data quality management and security protocols are built. Similarly, designing for scalability is meaningless without the robust error handling and monitoring needed to maintain performance as demand grows. Adopting both real-time and batch processing strategies becomes truly transformative only when supported by a unified architecture that can handle both workflows efficiently. This holistic approach ensures you build a resilient, adaptable, and high-performing data ecosystem, not just a series of siloed projects.

Your Actionable Roadmap to Integration Excellence

Translating these concepts from theory into tangible business outcomes requires a deliberate and strategic plan. Rather than being overwhelmed by the scope, focus on a clear, phased approach to build momentum and demonstrate value early.

Here are your immediate next steps:

- Conduct a Comprehensive Audit: Begin by benchmarking your current data landscape against the seven best practices discussed. Analyse your existing architecture, quality controls, and governance policies to identify the most critical gaps and vulnerabilities.

- Prioritise for Impact: You cannot fix everything at once. Identify a single, high-impact business problem that better data integration can solve. This could be automating a manual reporting process or cleansing a critical customer dataset. A quick win will build the business case for broader investment.

- Build a Cross-Functional Team: Effective data integration is a team sport. Assemble a group of stakeholders from IT, operations, marketing, and leadership to ensure the project aligns with business-wide objectives and secures necessary buy-in from the entire organisation.

Unlocking Your Competitive Advantage

Ultimately, the goal of refining your data integration practices is to transform data from a passive asset into an active catalyst for growth. When data flows seamlessly, securely, and reliably across your organisation, you empower your teams to move beyond reactive problem-solving and embrace proactive innovation. This creates a powerful competitive advantage, enabling greater operational agility, deeper customer insights, and a scalable foundation for future growth and AI adoption. The journey requires commitment, but the return is an organisation that is not just data-driven, but data-centric.

Adopting these advanced data integration best practices can be complex, particularly when navigating legacy systems or resource constraints. Osher Digital specialises in implementing tailored business process automation and AI-driven solutions that turn these principles into a tangible competitive advantage. Discover how their vendor-agnostic approach can unlock hidden efficiencies and deliver measurable performance for your organisation at Osher Digital.

Jump to a section

Ready to streamline your operations?

Get in touch for a free consultation to see how we can streamline your operations and increase your productivity.