9 Key Data Warehouse Best Practices for 2025

Discover the top 9 data warehouse best practices for optimal performance. Explore expert tips on architecture, governance, security, and ETL optimisation.

In a data-driven economy, a well-optimised data warehouse is no longer a luxury but a critical strategic asset. It is the core engine that powers analytics, fuels business intelligence, and ultimately drives competitive advantage for enterprises of all sizes. However, building and maintaining an effective data warehouse is fraught with common challenges, from spiralling operational costs and sluggish query performance to significant data quality issues that erode user trust and compromise decision-making. Overcoming these obstacles is essential for unlocking the true value of your organisation’s data.

This guide moves beyond generic advice to provide a definitive framework of data warehouse best practices. We will detail nine essential strategies covering architecture, governance, security, and performance optimisation that modern organisations must master. By implementing these proven techniques, you can transform your data infrastructure from a simple repository into a high-performance analytics powerhouse. This ensures every strategic decision is backed by accurate, timely, and reliable data, turning your raw information into measurable business outcomes and a distinct competitive edge. We’ll explore actionable steps and practical insights to elevate your data strategy and maximise your return on investment.

1. Dimensional Modeling

At the core of many successful data warehouse implementations lies dimensional modelling, a design technique popularised by Ralph Kimball. This approach organises data into logical structures that mirror business processes, making it one of the most fundamental data warehouse best practices. It separates numerical data (facts or measures) from descriptive contextual data (dimensions), creating a structure that is both intuitive for business users and highly optimised for query performance.

A dimensional model typically results in a star or snowflake schema. The central fact table contains business metrics like sales_amount or units_sold, while surrounding dimension tables hold attributes describing the context, such as customer_name, product_category, or store_location. This organisation drastically simplifies complex queries, enabling faster, more efficient data retrieval and analysis.

Why It’s a Best Practice

Dimensional modelling directly addresses the primary goal of a data warehouse: delivering fast, understandable insights to the business. Its structure is purpose-built for high-performance querying and reporting, unlike normalised models (like 3NF) which are optimised for transactional efficiency (OLTP) but often too complex for analytical queries (OLAP). For instance, retail giants like Walmart utilise dimensional models to analyse sales data across countless products, stores, and time periods with remarkable speed.

Actionable Implementation Tips

To effectively implement dimensional modelling, focus on these key steps:

- Define the Business Process: Start by identifying a core business process to model, such as order fulfillment or claims processing. This ensures the model directly answers relevant business questions.

- Declare the Grain: Explicitly define what a single row in your fact table represents. For example, the grain could be “one line item on a customer’s order”. This is the most critical step for a robust design.

- Identify Dimensions and Facts: Based on the grain, determine the descriptive dimensions (who, what, where, when) and the numeric facts (how much, how many) that correspond to the business process.

- Plan for Change: Implement a strategy for handling Slowly Changing Dimensions (SCDs) from the beginning. This allows you to track historical changes to dimensional attributes, like a customer’s address, without losing valuable context.

2. Extract, Transform, Load (ETL) Optimisation

The backbone of any data warehouse is the process that populates it with information: Extract, Transform, and Load (ETL). ETL optimisation is a systematic approach to efficiently move data from diverse source systems into the warehouse. This discipline focuses on refining each stage of the process to ensure data integration is not only fast and reliable but also scalable and cost-effective, making it one of the most critical data warehouse best practices.

An optimised ETL pipeline is designed for performance, implementing techniques like parallel processing, incremental loading, and robust error handling. The goal is to minimise latency and resource consumption while maximising data throughput and quality. For organisations dealing with unstructured or semi-structured data in image formats, leveraging OCR for data extraction becomes a vital part of the initial “Extract” phase, converting visual information into analysable data.

Why It’s a Best Practice

Poorly performing ETL processes create bottlenecks that directly impact the business’s ability to make timely decisions. Optimised ETL ensures that the data warehouse contains fresh, accurate data without causing performance degradation on source systems or the warehouse itself. For example, Uber relies on highly optimised ETL pipelines to process immense volumes of ride data, using incremental loading strategies to provide near real-time analytics on trip patterns, pricing, and driver availability. This capability is powered by a finely tuned data integration framework. You can explore more about data integration best practices on osher.com.au.

Actionable Implementation Tips

To effectively optimise your ETL processes, consider these key actions:

- Implement Change Data Capture (CDC): Instead of reloading entire tables, use CDC to identify and extract only the data that has changed since the last load. This drastically reduces processing time and network load.

- Use a Staging Area: Load raw data into a staging area first. This intermediate step allows for complex transformations, data cleansing, and validation to occur without impacting the performance of the production data warehouse.

- Optimise Transformations: Push down transformations to the source or target database where possible, as they are often more powerful at processing set-based operations than ETL tools. Use bulk load/insert operations instead of row-by-row updates.

- Monitor and Log Relentlessly: Implement comprehensive logging for all ETL jobs to track execution times, data volumes, and errors. This creates a foundation for ongoing performance tuning and quick troubleshooting.

3. Data Quality Management

High-quality data is the lifeblood of any effective data warehouse; without it, even the most sophisticated analytics are rendered useless. Data Quality Management is a comprehensive framework that ensures data is accurate, complete, consistent, and reliable. This discipline is one of the most critical data warehouse best practices, as it establishes trust in the insights generated from the warehouse. It involves a systematic approach to profiling, validating, cleansing, and monitoring data throughout its lifecycle.

Implementing a robust Data Quality Management framework means moving beyond one-off fixes to a continuous process. It treats data as a strategic asset that requires active governance and maintenance. This process identifies anomalies, standardises formats, and resolves inconsistencies before they can corrupt downstream reporting and decision-making. The goal is to create a single source of truth that the entire organisation can rely on with confidence.

Why It’s a Best Practice

Poor data quality directly leads to flawed business intelligence, eroding user trust and potentially causing significant financial or operational damage. Data Quality Management prevents this by embedding checks and balances directly into data pipelines. For example, financial institutions like JPMorgan Chase rely on stringent data quality programs to ensure regulatory reporting is accurate, while healthcare providers such as Kaiser Permanente implement patient data quality initiatives to improve care outcomes and analytics. These organisations recognise that proactive data quality is far less costly than reactive data correction.

Actionable Implementation Tips

To embed data quality into your data warehouse architecture, focus on these actionable steps:

- Establish Data Quality Metrics: Define clear Key Performance Indicators (KPIs) for data quality, such as completeness percentage, accuracy rate, or number of duplicate records. These metrics make quality measurable and transparent.

- Implement Checks at Multiple Stages: Don’t wait until data is loaded into the warehouse. Apply validation and cleansing rules during ingestion (ETL/ELT), upon entry to the staging area, and before it is made available to end-users. Learn more about effective data cleansing techniques.

- Create Data Steward Roles: Assign ownership of specific data domains to data stewards. These individuals are responsible for defining quality rules, resolving issues, and acting as the subject matter expert for their data.

- Use Automated Tools: Leverage data quality tools (like Informatica Data Quality or Talend Data Quality) to automate profiling, cleansing, and continuous monitoring. Manual checks are not scalable for modern data volumes.

4. Slowly Changing Dimensions (SCD) Implementation

A critical challenge in data warehousing is managing how dimension attributes change over time. A robust methodology for handling these changes, known as Slowly Changing Dimensions (SCD), is one of the most vital data warehouse best practices. This approach, popularised by Ralph Kimball, provides a framework for tracking historical data accurately, ensuring that business facts remain associated with the correct dimensional context from the time they occurred.

SCD techniques allow an organisation to decide whether to overwrite old data, add new records to track changes, or create new fields to store limited historical information. For example, a bank needs this to track a customer’s address changes over the life of a loan, or a retailer uses it to manage product re-categorisations without corrupting historical sales analysis. This preserves the integrity of time-based reporting and analysis.

Why It’s a Best Practice

Implementing an SCD strategy is fundamental for maintaining historical accuracy, a non-negotiable requirement for any reliable data warehouse. Without it, updating a dimension attribute like a sales representative’s assigned territory would retroactively alter all past sales records, making historical performance analysis impossible. SCDs solve this by creating a framework that preserves the past while accurately reflecting the present. This enables complex, time-sensitive queries that are essential for trend analysis, compliance reporting, and understanding long-term business evolution.

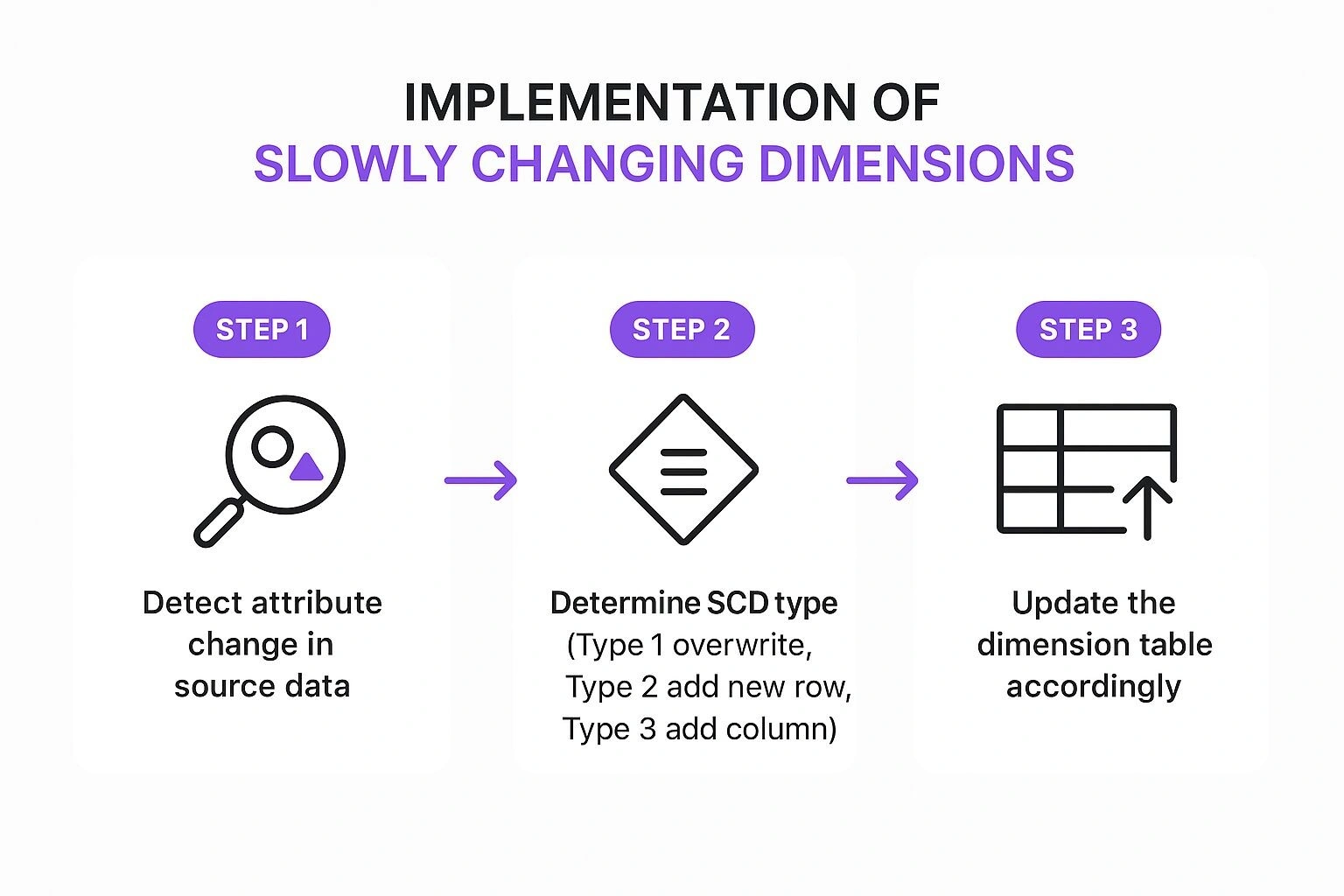

The following infographic illustrates the core decision-making process within an SCD workflow.

This process flow highlights the systematic approach required, from detecting a change in the source system to making a deliberate choice about how to update the dimension table based on predefined business rules.

Actionable Implementation Tips

To effectively implement SCDs, consider these critical steps:

- Choose the Right SCD Type: Select an SCD type (e.g., Type 1, 2, or 3) based on specific business requirements. Type 2 (adding a new row) is the most common for preserving full history, but may not be necessary for every attribute.

- Use Surrogate Keys: Always use system-generated surrogate keys as the primary key for dimension tables instead of natural business keys. This is essential for uniquely identifying different historical versions of the same dimension member in an SCD Type 2 implementation.

- Automate SCD Processing: Build the SCD logic directly into your ETL/ELT pipelines. This ensures that changes are detected and handled consistently and automatically as new data is loaded, reducing manual effort and potential errors.

- Implement Effective Dating: When using SCD Type 2, include

start_dateandend_datecolumns (or a current flag) in the dimension table to clearly define the time period for which each version of the record was active. This simplifies historical queries.

5. Data Partitioning and Indexing Strategy

As data volumes explode, maintaining query performance becomes a significant challenge. This is where a robust data partitioning and indexing strategy becomes one of the most critical data warehouse best practices. This technique involves physically dividing large tables into smaller, more manageable pieces called partitions, and creating targeted indexes to speed up data retrieval. By doing so, the database engine can scan only the relevant partitions instead of the entire table, drastically reducing query times.

For instance, a table containing years of sales data can be partitioned by month or quarter. When a user queries for sales in a specific month, the system only accesses that month’s partition, ignoring all others. This approach, popularised by platforms like Oracle and PostgreSQL, not only enhances query performance but also simplifies data management tasks like backups and archiving by allowing operations on individual partitions.

Why It’s a Best Practice

A well-designed partitioning and indexing strategy directly tackles the performance bottlenecks common in large-scale data warehouses. It enables parallel processing, as queries can run concurrently across different partitions, and improves maintenance efficiency. Without it, queries on multi-terabyte tables would be impractically slow, rendering the warehouse ineffective for timely analysis. For example, eBay partitions its massive transaction tables by date to enable fast historical analysis, and Netflix partitions viewing data by user segments to power its recommendation engine efficiently.

Actionable Implementation Tips

To effectively implement partitioning and indexing, focus on these key steps:

- Partition Based on Query Patterns: Analyse your most frequent and critical queries. Partition tables based on the columns most commonly used in

WHEREclauses, such as date, region, or customer ID. - Use Date-Based Partitioning for Time-Series Data: For any data that has a time component, like logs or sales transactions, partitioning by a date range (day, month, year) is almost always the optimal choice.

- Implement Partition Pruning: Ensure your queries are written to take advantage of partitioning. This means including the partition key in the

WHEREclause, allowing the database to “prune” or ignore irrelevant partitions. - Regularly Maintain Indexes and Statistics: An effective indexing strategy is crucial. Create indexes on columns frequently used for filtering and joining. Regularly update table statistics and rebuild or reorganise indexes to prevent fragmentation and ensure the query optimiser makes informed decisions.

6. Master Data Management (MDM)

Master Data Management (MDM) is a comprehensive discipline for defining and managing the critical data of an organisation to provide a single, trusted point of reference. It ensures that core business entities like customers, products, suppliers, and locations have a unified, authoritative source of truth. Implementing MDM is a crucial data warehouse best practice as it eliminates the data inconsistencies and redundancies that often undermine analytics and operational efficiency.

The core principle of MDM involves creating a “golden record” for each master data entity. This is achieved by consolidating data from various source systems, cleansing it, removing duplicates, and enriching it with additional information. This single source of truth is then synchronised across all relevant applications and analytical platforms, including the data warehouse, ensuring consistency enterprise-wide.

Why It’s a Best Practice

MDM directly tackles the “garbage in, garbage out” problem that plagues many data initiatives. Without it, a data warehouse may be technically sound but filled with conflicting information, such as multiple records for the same customer or different names for the same product. This leads to inaccurate reporting and a lack of trust in the data. For instance, Coca-Cola’s global MDM initiative enables a consistent view of customers across more than 200 countries, allowing for more effective, unified marketing and sales strategies.

Actionable Implementation Tips

To successfully integrate MDM into your data warehouse strategy, consider these practical steps:

- Prioritise Data Domains: Begin with the most critical and impactful master data domains. For most organisations, this means starting with either “Customer” or “Product” data, as these typically offer the highest business value.

- Establish Data Governance: Clearly define data ownership and stewardship roles. Assign responsibility for maintaining the quality and integrity of each master data domain. This governance structure is vital for long-term success.

- Implement Data Quality Rules: Define and enforce data quality rules at the point of entry and within the MDM hub. This includes standards for formatting, validation, and de-duplication to keep the master data clean.

- Invest in Change Management: An MDM implementation is as much a people and process change as it is a technology project. Invest in training and communication to ensure business users understand and adopt the new processes for managing master data.

7. Data Security and Governance Framework

Establishing a comprehensive data security and governance framework is a non-negotiable component of modern analytics. This approach moves beyond basic access controls to create a holistic strategy that protects sensitive data through encryption, auditing, and strict policy enforcement. As a critical data warehouse best practice, it ensures data confidentiality, integrity, and availability while aligning with complex legal and business requirements.

This framework involves defining clear policies for data ownership, usage, privacy, and regulatory adherence. By classifying data and implementing role-based access, organisations can safeguard their most valuable asset. This structured approach is essential for building trust with customers and stakeholders and mitigating the significant financial and reputational risks associated with data breaches.

Why It’s a Best Practice

In an era of stringent regulations like GDPR and HIPAA, a robust security and governance framework is not just good practice; it’s a legal necessity. It prevents unauthorised access and misuse of data, which is paramount for industries handling sensitive information. For example, financial institutions like Goldman Sachs rely on multi-layered security to protect financial data, while the Mayo Clinic implements strict HIPAA-compliant governance to manage patient information, ensuring both privacy and regulatory compliance.

Actionable Implementation Tips

To build an effective security and governance framework, focus on these foundational steps:

- Classify Data by Sensitivity: Categorise your data into levels like public, internal, confidential, and restricted. This allows you to apply security controls that are appropriate for the data’s risk level.

- Implement the Principle of Least Privilege: Grant users the minimum level of access necessary to perform their job functions. This simple but powerful principle significantly reduces the attack surface.

- Automate Compliance Monitoring: Use automated tools to continuously monitor for compliance with regulations and internal policies. This enables real-time alerts and simplifies reporting for audits.

- Conduct Regular Audits: Perform regular security audits and penetration testing to identify and remediate vulnerabilities before they can be exploited by malicious actors.

8. Scalable Architecture Design

A modern data warehouse must be built to grow. Scalable architecture design is an approach that ensures your system can handle increasing data volumes, more concurrent users, and greater query complexity without degrading performance. This is one of the most critical data warehouse best practices for long-term success, moving beyond fixed on-premises hardware to embrace adaptable, cloud-native solutions. It involves designing for elasticity, where resources can be provisioned and de-provisioned on demand.

The core principle is to decouple storage and compute resources, allowing each to scale independently. This is a hallmark of platforms like Snowflake, Amazon Redshift, and Google BigQuery. For data warehouses, designing a scalable architecture often involves leveraging cloud computing for scalable application development, allowing for flexible resource allocation and growth. This architectural foresight prevents performance bottlenecks and costly migrations as business needs evolve.

Why It’s a Best Practice

A scalable architecture future-proofs your analytics platform, ensuring it remains performant and cost-effective as data gravity intensifies. Organisations like Netflix and Spotify rely on this to serve millions of users with real-time analytics and recommendations. Without it, a data warehouse quickly becomes a bottleneck, unable to deliver timely insights and hindering the organisation’s digital transformation initiatives. It shifts the paradigm from capacity planning to capacity management, enabling agility and innovation. For more on this, learn about digital transformation best practices.

Actionable Implementation Tips

To build a truly scalable data warehouse architecture, concentrate on these strategies:

- Design for Horizontal Scaling: From the outset, build your system to scale out by adding more nodes or clusters, rather than scaling up with more powerful, expensive hardware. This is the foundation of cloud elasticity.

- Adopt Infrastructure as Code (IaC): Use tools like Terraform or CloudFormation to define and manage your infrastructure programmatically. This ensures consistent, repeatable, and version-controlled deployments.

- Use Containerisation: Employ technologies like Docker and Kubernetes to package and deploy components. This provides portability across environments and simplifies the management of microservices-based architectures.

- Implement Comprehensive Monitoring: Set up robust monitoring and alerting to track performance metrics, resource utilisation, and query costs. This allows you to proactively manage scaling and optimise efficiency.

9. Performance Monitoring and Optimisation

A high-performing data warehouse is not a “set and forget” asset; it demands continuous attention. Performance monitoring and optimisation is the systematic practice of tracking key metrics, identifying system bottlenecks, and proactively tuning the environment to ensure it meets service-level agreements. This proactive approach is a critical data warehouse best practice that prevents performance degradation before it impacts business users and critical operations.

The process involves establishing performance baselines and using tools like those from Datadog or Grafana Labs to continuously measure against them. When deviations occur, administrators can analyse query execution plans, resource utilisation, and data pipeline latencies to pinpoint the root cause. This ensures the data warehouse remains fast, reliable, and cost-effective, directly contributing to user satisfaction and trust in the data.

Why It’s a Best Practice

Without diligent monitoring, a data warehouse’s performance inevitably degrades over time as data volumes grow and query complexity increases. This leads to slow reports, failed ETL jobs, and frustrated users, eroding the value of the entire investment. Proactive optimisation addresses these issues before they become critical. For example, LinkedIn continuously monitors its vast data pipelines in real-time to guarantee timely data delivery, while Salesforce meticulously manages the performance of its customer data processing to uphold its platform’s responsiveness.

Actionable Implementation Tips

To effectively implement performance monitoring and optimisation, concentrate on these key actions:

- Establish Baseline Metrics: Before optimising, you must know what “good” looks like. Document baseline performance for critical queries and ETL processes during normal operation. This provides a benchmark for all future tuning efforts.

- Use Query Execution Plans: The execution plan is the database’s roadmap for running a query. Regularly analyse the plans for slow or expensive queries to identify inefficiencies like full table scans or incorrect join types.

- Monitor Technical and Business Metrics: Track both system-level metrics (CPU, memory, I/O) and business-facing metrics (report load time, data freshness). This provides a holistic view of performance and its impact on the business.

- Implement Automated Testing: Integrate performance testing into your CI/CD pipeline. This allows you to catch performance regressions caused by new code or schema changes before they reach production.

9 Key Data Warehouse Practices Comparison

| Item | Implementation Complexity | Resource Requirements | Expected Outcomes | Ideal Use Cases | Key Advantages |

|---|---|---|---|---|---|

| Dimensional Modeling | Moderate to high; requires upfront business understanding | Moderate; design and maintenance effort | Intuitive business-friendly data structures; excellent query performance | Analytical reporting, business intelligence | Simplifies reporting; supports drill-down/roll-up; reduces redundancy |

| ETL Optimization | High; complex design and maintenance | High; specialized tools and expertise | Faster data processing; improved data quality | Data integration, batch and real-time loading | Faster processing; improved quality; automated error recovery |

| Data Quality Management | High; ongoing monitoring and cultural change | Moderate to high; tools and staffing | Trusted, accurate, and consistent data | Data governance, compliance, analytics | Increases data trust; reduces errors; supports compliance |

| Slowly Changing Dimensions | Moderate; careful planning and ETL design | Moderate; storage and processing overhead | Maintains historical accuracy; supports trend analysis | Handling time-variant dimension data | Preserves history; flexible reporting over time |

| Data Partitioning and Indexing | Moderate to high; design and tuning needed | Moderate; extra storage for indexes | Dramatically improved query performance | Large datasets, time-series analysis | Faster queries; efficient maintenance; better resource use |

| Master Data Management (MDM) | Very high; significant organizational change | High; governance, tools, and processes | Single authoritative data source; consistent master entities | Enterprise-wide reference data management | Eliminates inconsistencies; improves reliability and compliance |

| Data Security and Governance | High; complex security and compliance efforts | High; encryption, monitoring, audits | Protected sensitive data; regulatory compliance | Sensitive data protection, regulated industries | Ensures data confidentiality; reduces breach risks |

| Scalable Architecture Design | High; cloud and distributed systems expertise | High; cloud resources and tools | Handles growth efficiently; flexible resource allocation | Large-scale, growing data platforms | Supports scalability; cost-optimization; future-proofing |

| Performance Monitoring | Moderate; requires dedicated tools and expertise | Moderate; monitoring infrastructure | Proactive issue detection; optimized system usage | Operational efficiency; SLA compliance | Improves user experience; prevents degradation; data-driven tuning |

From Theory to Transformation: Your Next Steps in Data Warehouse Mastery

We’ve explored a comprehensive suite of nine foundational data warehouse best practices, from architectural design to ongoing operational excellence. Viewing these principles not as a checklist but as interconnected components of a cohesive strategy is the first step towards true data mastery. The journey from a siloed, legacy system to a high-performing, centralised data warehouse is a significant undertaking, but the rewards are transformative.

By embracing dimensional modelling and a scalable architecture, you lay the groundwork for future growth. Optimising your ETL processes and implementing robust data quality frameworks ensures the information flowing into your warehouse is reliable and timely. These technical foundations are crucial, but they realise their full potential only when supported by strong organisational structures. This is where a clear data governance framework, robust security protocols, and a dedicated Master Data Management (MDM) strategy become non-negotiable. They are the pillars that transform raw data into a trusted, enterprise-wide asset.

Bridging the Gap Between Knowledge and Action

Understanding these best practices is one thing; implementing them effectively is another. The real challenge lies in weaving these concepts into the fabric of your organisation’s daily operations. This requires a cultural shift towards data-driven decision-making, supported by continuous performance monitoring and a commitment to iterative improvement.

Your immediate next steps should involve a candid assessment of your current data warehouse maturity against the principles outlined:

- Audit Your Architecture: Does your current model support complex queries efficiently? Is your architecture designed for scalability?

- Evaluate Data Pipelines: Are your ETL jobs optimised, resilient, and well-documented? Where are the bottlenecks?

- Assess Governance and Security: Who owns the data? Are access controls and security measures adequate to protect sensitive information and ensure compliance?

Answering these questions will illuminate your path forward, highlighting priority areas for investment and improvement. This journey is not a one-time project but a continuous cycle of optimisation.

The Strategic Value of a Modern Data Warehouse

Ultimately, mastering these data warehouse best practices is about more than just technology; it’s about empowering your organisation. A well-executed data warehouse breaks down departmental silos, provides a single source of truth, and equips your teams with the insights needed to innovate, optimise operations, and outmanoeuvre the competition. It is the engine that drives strategic growth, turning your data from a passive resource into your most powerful competitive advantage. By committing to this holistic approach, you ensure your data infrastructure is not just a repository of past events, but a dynamic platform for shaping your future success.

Ready to translate these best practices into tangible results? The team at Osher Digital specialises in designing and implementing modern data solutions that drive efficiency and growth. Partner with us to build a data warehouse that not only meets today’s analytical demands but is also engineered for the opportunities of tomorrow.

Jump to a section

Ready to streamline your operations?

Get in touch for a free consultation to see how we can streamline your operations and increase your productivity.