AI failures: How to prevent them and get real value from AI

When an AI project goes off the rails, it’s rarely because of a single line of bad code. The real culprits are usually found much deeper: in the quality of the data, the choice of the model, or how the whole thing fits into your existing business operations. Why so many AI projects stumble Ever […]

When an AI project goes off the rails, it’s rarely because of a single line of bad code. The real culprits are usually found much deeper: in the quality of the data, the choice of the model, or how the whole thing fits into your existing business operations.

Why so many AI projects stumble

Ever had that sinking feeling when a new, expensive AI tool just isn’t delivering the magic it promised? You’re definitely not alone. Many organisations invest heavily in AI only to find their projects fall flat, leaving them with more problems than they started with.

To get to the bottom of this, let’s think about building an AI system like cooking a gourmet meal. It’s a precise process with several crucial steps. If you get just one part wrong, the final dish is destined for the bin.

The recipe for AI failure

Let’s say your AI model is the fancy recipe, the sophisticated instructions designed to produce a brilliant result. But even the world’s best recipe is completely useless without high-quality ingredients. In the AI world, your data is your ingredients.

If your ingredients are stale, incomplete, or simply the wrong type, the meal is guaranteed to be a disaster. It’s exactly the same with AI. Poorly managed or irrelevant data is the leading cause of AI project failure.

Then there’s your kitchen, which represents your business systems and processes. You could have a Michelin-star recipe and the freshest organic ingredients, but if your oven is broken or your pans are filthy, you’re not cooking anything. This is what happens when a new AI tool is plugged into outdated, incompatible software or a workflow that no one actually follows.

This cooking analogy helps break down the complexities of AI failure into a few core problems that are much easier to diagnose. Let’s look at the four common reasons things go wrong.

Four common reasons AI projects fail

This table gives a quick summary of the main failure points that businesses encounter when implementing AI solutions.

| Failure Point | Simple Explanation (The Cooking Analogy) | Business Impact |

|---|---|---|

| Data Problems | Using stale, incorrect, or irrelevant ingredients. | Inaccurate outputs, biased decisions, and completely wasted investment. |

| Model Issues | The recipe is flawed or can’t handle a different type of oven. | The AI makes poor predictions and can’t adapt to real-world changes. |

| Integration Challenges | The kitchen setup can’t support the recipe’s instructions. | The AI tool doesn’t work with your existing software, causing chaos. |

| Unclear Goals | You started cooking without knowing what meal you wanted to make. | The project has no direction, costs spiral, and it solves no real problem. |

Seeing it laid out like this makes it clear: these aren’t just technical glitches. They are fundamental business and process issues that need to be sorted out from the very beginning.

AI projects don’t fail because the technology is bad. They fail because the foundational elements, like data quality and clear business objectives, were not properly addressed from the very beginning.

This isn’t just theory. In Australia, it’s a sobering fact that up to 80% of AI projects fail to deliver their intended value. According to recent studies, this alarmingly high rate is driven by poor data quality, a lack of in-house expertise, and misaligned business goals. It’s a stark reminder of the risks of diving into AI without a solid plan. You can find more insights about why Australian AI projects fail in recent analyses.

Understanding these common pitfalls is the first step toward avoiding them. In the sections that follow, we’ll dive deeper into each of these failure points, showing you how to spot them and, more importantly, how to get your AI recipe right from the start.

The hidden problem of poor data quality

Let’s get straight to the biggest cause of AI headaches. If your AI model is the engine, then data is its fuel. Trying to run an AI on poor quality data is like pouring dirty, watered-down petrol into a high-performance race car. It’s never going to run properly, and you risk damaging the engine permanently.

This isn’t just a technical problem; it’s a business one. Bad data directly causes most AI failures. It leads to wasted time, money, and a deep distrust in the technology across your whole organisation. Imagine spending months and a small fortune training a new model, only to realise its predictions are completely useless because the data was flawed from the start.

This exact situation is more common than you might think. Among Australian firms with AI already in production, a huge 85% report inaccurate or misleading results. This is driven by poor data quality and information trapped in separate systems, a problem made worse by the rush to adopt AI without strong foundations.

What bad data looks like in business

When we talk about ‘bad data’, we’re not just talking about a few typos in a spreadsheet. In a business context, it takes many forms, each one capable of derailing your AI project. Understanding these specific issues is the first step to fixing them.

Here are a few of the most common culprits:

- Incomplete records: This could be customer profiles missing phone numbers or sales entries without postcodes. An AI trying to find geographic sales trends will fail if it doesn’t have complete location data to work with.

- Outdated information: Think about financial models using last year’s market data or marketing AI targeting customers who have long since changed their preferences. The world changes quickly, and stale data leads to irrelevant conclusions.

- Inconsistent formats: One department might list dates as “DD/MM/YYYY” while another uses “Month Day, Year”. An AI can’t make sense of this without a huge clean-up effort, which is where solutions for automated data processing become so valuable.

- Siloed data: This is a classic. Your sales data lives in one system, your marketing data in another, and your customer service logs are somewhere else entirely. The AI can’t see the whole picture, so it misses vital connections that a human might spot easily.

The core principle is simple: garbage in, garbage out. No matter how advanced your AI model is, it can’t create accurate insights from flawed or incomplete information. It’s like asking a detective to solve a case when half the clues are missing and the other half are false.

The real cost of flawed data

The consequences of these data issues are severe. An AI trained on incomplete customer records might develop biases, unfairly favouring one demographic over another simply because there’s more data available for that group. This can lead to compliance headaches and damage your brand’s reputation.

Furthermore, models trained on outdated figures will make wildly inaccurate forecasts, causing your business to misallocate resources, miss sales targets, or overspend on inventory. Each of these AI failures erodes confidence. Team members start to doubt the technology, leaders question the investment, and the entire project loses momentum.

To prevent AI failures stemming from flawed inputs, using quality data is crucial, and exploring options like the best data enrichment tools can be highly beneficial. Ultimately, cleaning and organising your data is not just a preliminary step; it is the most critical part of the entire AI journey.

When your AI model misses the mark

Alright, so you’ve taken all the advice from the previous section to heart. Your data is clean, organised, and ready for action. Yet, when you finally put your AI to work, it starts making some truly bizarre, illogical decisions. What’s going on here?

This brings us squarely into the second major category of AI failures: the ones that happen inside the model itself. Think of the model as the ‘brain’ of your AI system. And just like a human brain, even with perfect information, it can still learn the wrong lessons or develop some pretty unhelpful habits.

We’ve all known a student who crams for a test by memorising flashcards. They might ace the exam by regurgitating specific answers, but they haven’t actually understood the subject. The moment you ask them to apply that knowledge to a new, unfamiliar problem, they’re completely lost. This is exactly how many AI models behave. They get top marks in training but fail spectacularly in the real world.

The problem of overfitting

One of the most common model-related AI failures is a classic problem called overfitting. This is the technical term for our cramming student. The AI becomes so fixated on the tiny details and statistical noise in its training data that it essentially memorises it, warts and all.

Instead of learning the general patterns and underlying rules, it learns the exceptions. When this overfitted model encounters new, live data from your business, it struggles because the real world never perfectly matches its training textbook.

This usually leads to some tell-tale symptoms:

- The model performs exceptionally well during testing but its accuracy plummets the second it goes live.

- It makes confident, but completely wrong, predictions on data that’s only slightly different from what it’s seen before.

- The model is brittle; it can’t adapt to even minor shifts in customer behaviour or market conditions.

This memorisation issue is a huge reason why a technically sound AI can still fail to deliver any real business value. It has learned the answers, not the method.

When the world changes but your AI doesn’t

Another critical type of model failure is known as model drift or concept drift. This happens when the statistical properties of the world change after your AI has been trained. The patterns and relationships the model learned are no longer valid, causing its predictions to become progressively more inaccurate over time.

Think of it like trying to navigate a modern city using a street directory from 2005. The main roads are probably still there, but you’ll be completely thrown by new motorways, changed intersections, and entire suburbs that didn’t exist back then. Your map is dangerously out of date.

An AI model is a snapshot of the world at the moment it was trained. It doesn’t automatically adapt as customer preferences, economic conditions, or supply chains evolve. Without regular updates, its performance will inevitably degrade.

A classic example would be an AI trained to predict product demand before 2020. It would have been rendered completely useless when global supply chains were turned upside down. The fundamental rules it had learned about logistics and consumer behaviour changed almost overnight.

This drift is often subtle but it’s always happening. A failure to continuously monitor and retrain models is a guarantee of future failure. Recent research highlights how even top-tier models can fail on simple real-world tasks, spiralling into errors when they encounter situations not perfectly covered in their training. They can invent code that doesn’t exist or get stuck in repetitive loops, showing just how fragile they can be outside of a controlled lab environment.

These model-centric AI failures prove that good data is just the starting line. You also need a well-taught, adaptable model that can generalise its knowledge, not just memorise the past. Getting this right is a complex challenge, but it’s one that expert AI consultants are equipped to help you solve.

The challenge of weaving AI into your business

Building a brilliant AI model is one thing. Actually getting it to work smoothly with your existing business systems and processes? That’s where the real test begins. It’s at this critical point that many promising AI projects fall over, leading to costly integration and governance failures.

Think of it like buying a powerful, top-of-the-line engine for your car. On its own, it’s a masterpiece of engineering. But if you can’t connect it to the car’s wheels, transmission, or fuel tank, it’s utterly useless. It just sits there, an expensive monument to a great idea that never quite connected with reality.

This is precisely what happens when businesses focus only on the AI model without a concrete plan for how it will plug into the real world. You can have the most accurate predictive model imaginable, but if it can’t talk to your inventory system or your CRM, it can’t deliver a single dollar of value.

When new tech meets old systems

One of the biggest hurdles is purely technical. Many organisations operate on a patchwork of software systems cobbled together over years, sometimes even decades. A shiny new AI trying to communicate with a ten-year-old finance system is like trying to plug your latest smartphone into a rotary telephone. They speak fundamentally different languages.

This mismatch creates a serious bottleneck. Data gets trapped, processes grind to a halt, and the seamless automation you were sold on turns into a frustrating mess of manual workarounds. These are exactly the kinds of complex challenges where deep expertise in system integrations is non-negotiable for bridging the gap between old and new.

Beyond the tech stack, there’s the human element. Employees are often wary of new automated processes, especially if they don’t understand them or feel their roles are under threat. If the people who are meant to use the AI don’t trust it or find it adds more complexity to their day, they will simply work around it.

An AI tool is only as good as its adoption. If it creates more friction than it removes, your team will reject it, and the project will fail regardless of how brilliant the underlying technology is.

This resistance is a major reason why AI adoption has been slow across many industries. In fact, a recent report found that only 17% of Australian businesses have managed to implement AI organisation-wide. This is despite 51% of leaders using AI tools in their personal lives, revealing a huge gap between personal comfort and business execution.

Who’s responsible when the AI gets it wrong?

This brings us to the crucial, and often overlooked, topic of governance. When an AI makes a mistake, who is accountable? If an AI model incorrectly denies a customer a loan or orders the wrong amount of stock, who wears the consequences? Without clear rules and ownership, you’re creating a recipe for chaos.

Effective AI governance means establishing clear guidelines for:

- Decision ownership: Defining who is ultimately responsible for the AI’s outputs.

- Error handling: Creating a clear process for what to do when the AI makes a mistake.

- Monitoring and oversight: Assigning a team to keep a close watch on the AI’s performance to catch issues before they escalate.

Without this framework, even a perfectly integrated AI can quickly become a liability. A lack of clear governance is one of the most subtle but damaging types of AI failures. It can erode trust, create internal conflict, and expose the business to significant risk. Successfully plugging AI into your company requires far more than clever code; it demands a thoughtful strategy that considers your technology, your people, and your processes in unison.

Before we dive deeper into each failure mode, it’s helpful to have a high-level view of how to get ahead of these problems. A proactive approach is always better than a reactive one, especially when the stakes are high.

The following table lays out a simple but effective checklist. Think of it as a foundational guide for your team to start building the right habits and processes from day one.

Preventing AI failures: A practical checklist

| Failure Category | Key Prevention Strategy | Who Is Responsible |

|---|---|---|

| Data Failures | Establish a robust data validation and cleaning pipeline before any data touches the model. | Data Engineering & Data Science Teams |

| Model Failures | Implement continuous model monitoring (drift, bias, performance) with automated alerts. | MLOps & Data Science Teams |

| Integration Failures | Conduct thorough integration testing in a staging environment that mirrors production systems. | System Integrators & IT Operations |

| Governance Failures | Create a formal AI governance charter defining ownership, accountability, and escalation paths. | Executive Leadership & Legal/Compliance |

| Security Failures | Perform regular penetration testing and threat modelling specific to the AI system and its data. | Cybersecurity & MLOps Teams |

This checklist isn’t exhaustive, but it covers the core pillars of a resilient AI strategy. By assigning clear responsibilities and embedding these practices into your workflow, you can significantly reduce your exposure to the most common pitfalls we’re about to explore.

A practical plan to prevent and fix AI failures

Knowing what can go wrong with an AI project is one thing. Knowing how to stop it from happening in the first place? That’s the real game changer. Moving from talking about problems to actively building solutions is how you flip the script on the high rate of AI failures. It all comes down to having a straightforward, actionable plan before a single line of code is ever written.

For any medium or large organisation, a reactive approach is just a recipe for wasted budgets and mounting frustration. The smarter path is a proactive, four-step process: prevention, detection, monitoring, and remediation. This approach turns a high-risk gamble into a calculated business strategy, essential for successfully launching and scaling your AI initiatives.

Start small to win big

The very first step in preventing AI failures is surprisingly simple: don’t bite off more than you can chew. So many ambitious projects implode because they try to solve a massive, business-wide problem all at once. A far better strategy is to start with a small, tightly-defined problem where you can score a clear and measurable win.

Think of it this way. Instead of building an AI to overhaul your entire supply chain, start with one that just optimises the delivery routes for a single warehouse. By narrowing the scope, the benefits become immediately clear:

- Define success clearly: It’s far easier to measure the impact on one specific, isolated process.

- Manage data more easily: You only need a clean, relevant dataset for one task, not the entire company’s data lake.

- Build momentum: A quick, successful pilot project builds confidence and, crucially, secures buy-in for bigger initiatives down the line.

This method effectively de-risks the whole process. You learn invaluable lessons on a smaller, more manageable scale, making any mistakes far less costly and success much more probable.

Detect problems before they escalate

Once your AI model is deployed, you can’t just set it and forget it. The world changes constantly, and your AI’s performance can degrade over time without anyone noticing. This is where active detection becomes non-negotiable.

Detection is all about setting up automated alerts that serve as an early-warning system. You need to establish the key performance indicators (KPIs) for your model and have a system that flags you the moment its performance dips below an acceptable threshold.

This is like the check engine light in your car. You don’t wait for smoke to start pouring out of the bonnet to realise there’s a problem. The light gives you a heads-up that something needs attention before a catastrophic failure occurs.

This proactive stance allows you to catch issues like model drift or data quality degradation early, long before they have a chance to negatively impact business decisions or customer experiences.

Monitor everything continuously

While detection is about automated alerts, monitoring is the essential human side of the equation. It involves ongoing, regular checks by your team to ensure the entire AI system, not just the model, is healthy. Think of it as a routine health check-up for your AI.

This process goes far beyond just looking at model accuracy. It means assessing the entire pipeline, from the raw data and the model itself through to its integration with other systems and the governance framework wrapped around it.

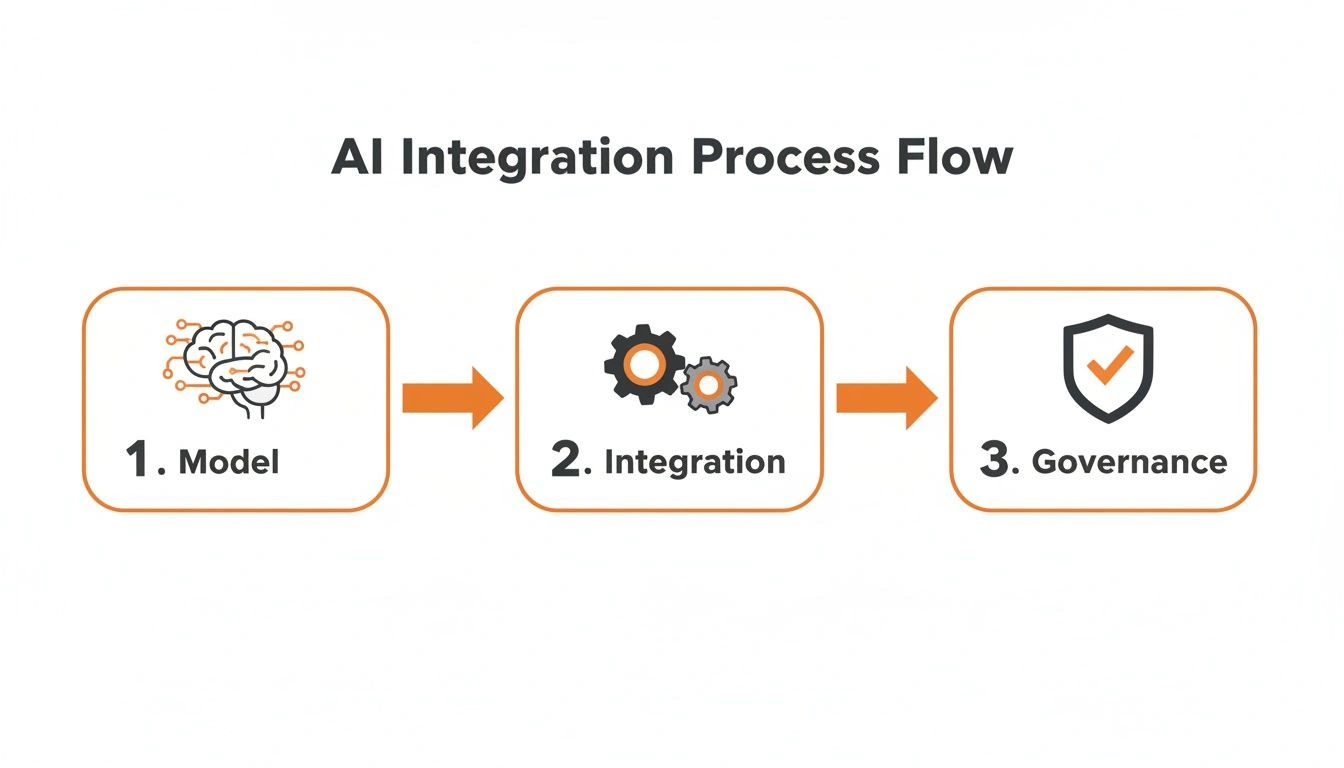

This infographic visualises the core stages of a successful AI integration process, highlighting the need to manage the model, its integration, and overall governance.

As the flow shows, technical success (the model) and operational success (integration) are both underpinned by strong governance, which acts as the ultimate safety net.

Regular monitoring ensures the AI’s outputs stay aligned with your business goals and ethical standards. To get ahead of potential issues and maintain control, it’s worth exploring established AI Governance Best Practices.

Have a clear remediation plan

Finally, even with the best prevention and monitoring in the world, things can still go wrong. When an AI failure happens, you need a clear, pre-defined plan to fix it. This is your remediation strategy.

This plan should outline exactly who is responsible for what, the steps needed to diagnose the problem, and how to safely roll back the AI’s decisions if necessary. Having this documented and understood prevents panic and ensures a swift, organised response, which is critical for minimising the business impact.

How expert guidance helps you avoid a crisis

Trying to navigate the complex world of AI on your own can feel a bit like sailing into a storm without a compass. You can see the destination, a more efficient, intelligent business, but the path is littered with hidden reefs and unpredictable currents. This is precisely where having an experienced partner makes all the difference, helping you sidestep the common AI failures we’ve just covered.

An expert partner isn’t just a technician; they’re a translator, turning complex technical jargon into clear business outcomes. They start by helping you pick the right battles, focusing on small, achievable wins that build momentum instead of trying to boil the ocean with a single, massive project. This strategic focus keeps you from pouring resources into projects that are too ambitious or poorly defined from the outset.

Building a strong foundation first

Any seasoned specialist will tell you that success starts with the foundations. Before a single line of code is written for a model, their first job is to get your data house in order. They’ll ensure your data is clean, organised, and actually fit for purpose. This step alone neutralises the single most common cause of AI project failure, making sure your investment is built on solid rock, not quicksand.

They also obsess over how the solution will fit into your existing operations. A brilliant AI model is completely useless if it can’t talk to your other systems or if your team doesn’t know how to use it. A good partner manages this entire integration, ensuring the new technology works with your people and processes, not against them.

A successful AI project is not just about having the best technology. It is about applying the right technology to the right problem in the right way, with a clear focus on delivering measurable business value from day one.

Ultimately, bringing in a strategic partner is all about managing risk. It’s about ensuring your investment actually pays off with improved efficiency, tangible growth, and a genuine competitive edge. It’s the difference between hoping for a good outcome and executing a clear plan to achieve one.

If you’re ready to make AI a true success story for your business, consider working with a team of AI consultants who can guide you every step of the way.

Common questions about AI failures

We get asked a lot about what can go wrong with AI projects. Here are some of the most common questions from business leaders trying to get it right.

What’s the single biggest reason AI projects fail?

It almost always comes back to the data. Poor data quality is, by a long shot, the number one project killer.

Think of it like building a house. Your data is the foundation. If that foundation is cracked, uneven, or made from substandard materials, it doesn’t matter how brilliant your architect is or how expensive your fixtures are, the whole structure is compromised. The same goes for AI. A sophisticated model fed with junk data will only produce junk results, leading to flawed predictions and a complete breakdown of trust in the system.

How do we actually know if our AI project is succeeding or failing?

You can only measure success against clear, pre-defined business goals. Forget the technical metrics for a second and ask yourself: what tangible problem are we trying to solve?

Success isn’t found in a model’s accuracy score; it’s measured by real-world impact. You need to tie the project to specific key performance indicators (KPIs) from day one.

- Are we aiming to cut customer service wait times by 20%?

- Is the objective to boost qualified sales leads by 15%?

- Do we need to reduce manual data entry mistakes by half?

An AI project has failed if it doesn’t meaningfully improve the business metric it was designed to address. It doesn’t matter how well the tech works in a controlled environment if it doesn’t deliver in the real world.

Should we build our own AI or just buy an off-the-shelf solution?

This is a classic “build vs. buy” dilemma, and the right answer really depends on your unique situation, your specific needs, your budget, and the talent you have in-house.

An off-the-shelf solution is almost always faster to implement and more budget-friendly for common use cases, like a customer support chatbot or a standard data analytics tool. You’re getting a product that’s already been tested and refined.

On the other hand, if you’re tackling a highly specialised business problem or need deep integration with proprietary internal systems, a custom-built AI solution might be your only viable path. The risk of AI failure is often lower with proven, existing tools, but a successful custom build can deliver a far greater competitive advantage.

At Osher Digital, we help businesses work through these very challenges every day. Our team of AI consultants can work with you to build a robust strategy, ensuring your AI initiatives deliver real, measurable value from the start.

Jump to a section

Ready to streamline your operations?

Get in touch for a free consultation to see how we can streamline your operations and increase your productivity.