A Business Guide to AI Regulation in Australia

Diving into AI is a bit like getting the keys to a high-performance car. You can feel the power and potential, but you also know you need to learn the rules of the road before you hit the accelerator. That’s pretty much what AI regulation is: the collection of guidelines and laws being drawn up […]

Diving into AI is a bit like getting the keys to a high-performance car. You can feel the power and potential, but you also know you need to learn the rules of the road before you hit the accelerator. That’s pretty much what AI regulation is: the collection of guidelines and laws being drawn up to make sure these powerful tools are used safely, fairly, and openly for everyone.

Making Sense of AI Regulation for Your Business

It helps to think of the global conversation around AI regulation not as a series of heavy roadblocks, but more like marking the lines on a brand-new footy field. Everyone’s keen to play, but we all agree that rules are needed to keep the game fair and stop anyone from getting hurt. For businesses here in Australia, this means getting to grips with the new expectations for using AI-powered tools and automation.

The government’s goal isn’t to slow things down. Far from it. The aim is to build a reliable framework that gives businesses like yours the confidence to try new things. It’s all about creating a predictable environment where the expectations are clear, letting you build incredible solutions without the nagging worry of running into legal trouble later on.

Why Is Governance Suddenly Such a Big Deal?

The reason for all this focus is simple: AI is becoming incredibly capable. As soon as you use an AI system to make decisions that affect people, whether that’s screening job applicants, approving loans, or personalising customer offers, those decisions have to be fair, transparent, and easy to understand.

Good governance isn’t just a box-ticking exercise to avoid fines; it’s the foundation of trust. When your customers and employees see you’re using AI responsibly, they’re far more likely to get on board with the technology and, by extension, your business.

This is where a solid AI governance plan becomes your internal rulebook. It helps you get ahead of the tough questions before they turn into real problems:

- How do we decide which AI tools are right for us? A good plan helps you assess vendors and technologies based on their safety and reliability, not just their marketing hype.

- Is our customer data being handled properly? It ensures you’re in line with existing privacy laws like the Privacy Act, which have never been more critical.

- Who is responsible when an AI system makes a mistake? It defines clear lines of responsibility within your organisation, so everyone knows who owns what.

Building a Foundation for Smart AI Adoption

Getting your business ready for AI regulation doesn’t need to be a massive headache. It really starts with building a solid understanding of the core principles. Think of it as your safety briefing before a big expedition. You learn about the terrain, check your equipment, and go over best practices to make sure the journey is a success.

This guide is designed to be that briefing. We’ll walk you through Australia’s emerging approach to AI safety, show you how to identify the risk levels of different AI projects, and give you a practical framework for compliance. Our goal is to equip you to make smart, confident decisions about AI.

By getting comfortable with the new rules of the road, you can unlock the full potential of AI to drive your business forward, both securely and ethically. If you need an experienced guide for this new terrain, our team of AI consultants is here to help you build a responsible and highly effective AI strategy.

How Australia Is Approaching AI Safety

When you picture a government tackling AI regulation, you might imagine a team locked away, drafting a thousand-page rulebook from scratch. The reality of Australia’s plan is far simpler and, frankly, much more practical. The government isn’t trying to reinvent the wheel.

Instead, the core strategy is to adapt the robust laws we already have. It’s a bit like road safety. We don’t need a specific new law for “accidents caused by electric cars” because our existing rules already cover unsafe driving, regardless of what powers the vehicle. Australia is taking that same commonsense approach to AI.

Building on a Solid Legal Foundation

Rather than getting bogged down in creating a complex new set of AI-specific legislation, the focus is on applying our current legal frameworks. This includes powerful laws that Australian businesses are already well-acquainted with, such as:

- The Privacy Act: This is a major one. If your AI tool collects, processes, or uses personal information, it must comply with Australian privacy principles just like any other system.

- Australian Consumer Law: This law is all about protecting customers from misleading claims or unfair practices. If you say your AI can perform a certain task, it has to deliver on that promise safely and reliably.

- Competition Laws: These frameworks are in place to ensure a fair marketplace, preventing any single AI technology from creating a monopoly or an unfair advantage that harms consumers or other businesses.

This approach is good news for businesses because it means you aren’t stepping into a completely alien legal world. You’re largely operating in a predictable environment, building on compliance measures you should already have in place.

The central idea is that good AI regulation should feel like a natural extension of good business practice. It’s all about accountability, fairness, and transparency, principles already at the heart of Australian commercial law.

What this really means is that your first step isn’t memorising new AI jargon. It’s about ensuring your current operations, especially around data handling and consumer rights, are fully compliant with existing laws.

A Risk-Based Approach, Not One-Size-Fits-All

Of course, not all AI tools carry the same level of consequence. An AI that suggests which movie to watch next is worlds apart from one that helps a doctor make a medical diagnosis. The government gets this, which is why it’s adopting a risk-based approach.

Think of it like adjusting your safety gear for different activities. You’d wear a helmet for a casual bike ride but a full harness and rope for a serious rock climb. In the same way, AI regulation in Australia will apply different levels of scrutiny depending on the application.

A low-risk AI, like a chatbot answering basic customer questions, will face minimal regulatory oversight. On the other hand, a high-risk AI used in a critical sector like finance or healthcare will have to meet much stricter requirements to prove it is safe, accurate, and fair. This tiered system allows innovation to flourish in low-risk areas while ensuring the highest standards are applied where they matter most.

The New AI Watchdog on the Block

To support this strategy, the Australian Government is establishing a dedicated body to keep an eye on things. As part of its National AI Plan, the government has committed AUD 29.9 million to set up the AI Safety Institute by early 2026. This move signals a clear shift away from earlier talk of mandatory rules for high-risk AI, reinforcing the preference for using our existing legal frameworks. You can read more about Australia’s AI policy roadmap and its key initiatives.

You can think of the AI Safety Institute as an independent testing agency, much like ANCAP provides safety ratings for cars. Its job won’t be to write the laws, but to test AI models, monitor for emerging risks, and share its findings with both the public and regulators. For your business, this institute will become a key source of insight into AI capabilities and potential harms, helping you make smarter, safer technology choices.

Identifying Your AI Risk Level

Not all AI is created equal, and regulators know this better than anyone. Figuring out the risk level attached to your AI tools is the first real step toward smart, efficient compliance. It’s the difference between applying heavy-handed governance where it isn’t needed and freeing your team up to innovate.

Think of it like a traffic light system. Some AI applications get a clear green light for go. Others need a more cautious, yellow-light approach. And some require a full red-light stop-and-review before they ever see the light of day. Knowing where your projects sit on this spectrum is crucial. It lets you move fast with low-risk tools while managing the high-stakes applications responsibly.

To help you get a clearer picture, here’s a simplified breakdown of the risk categories, along with some practical business examples to help you classify your own AI projects.

AI Application Risk Levels Explained

| Risk Level | Description and Analogy | Business Example |

|---|---|---|

| Low Risk | The Helpful Assistant. Think of this AI like a calculator. It speeds up a task and is highly reliable, but it doesn’t make independent, life-altering decisions. | An inventory management system that predicts stock needs based on sales data, or a simple chatbot answering “What are your opening hours?” |

| Medium Risk | The Co-pilot. This AI provides powerful recommendations but needs a human to take the controls for the final decision. It suggests a flight path, but the pilot makes the final call. | An AI tool that screens CVs to create a shortlist for a hiring manager, who then decides who to interview. |

| High Risk | The Autonomous Surgeon. This AI operates in a critical field where a mistake has severe consequences for a person’s wellbeing, rights, or safety. It requires immense precision and oversight. | An AI making automated credit scoring decisions, a diagnostic tool analysing medical scans for cancer, or a self-driving vehicle. |

Understanding these distinctions is the foundation of a solid AI governance strategy, ensuring you apply the right level of rigour to the right tools.

Green Light: Low-Risk AI

Let’s start with the easy one: low-risk AI. These are the tools that have a minimal, almost negligible, impact on people’s lives or fundamental rights. You can think of them as smart assistants that automate straightforward tasks but don’t wade into significant, life-altering decisions.

For the most part, these systems are safe to use with standard business oversight.

- A chatbot on your website: An AI that handles basic customer queries like “Where can I find your returns policy?” is a classic example.

- Inventory management software: An AI predicting stock levels to optimise warehouse ordering is another.

- Simple data sorting tools: Systems that automatically categorise incoming emails or files based on keywords fall squarely in this camp.

With these green-light applications, your main compliance focus should be on transparency. It’s simply good practice to let users know they’re interacting with an AI and to ensure the tool works as advertised. Heavy-handed regulation is highly unlikely to ever apply here.

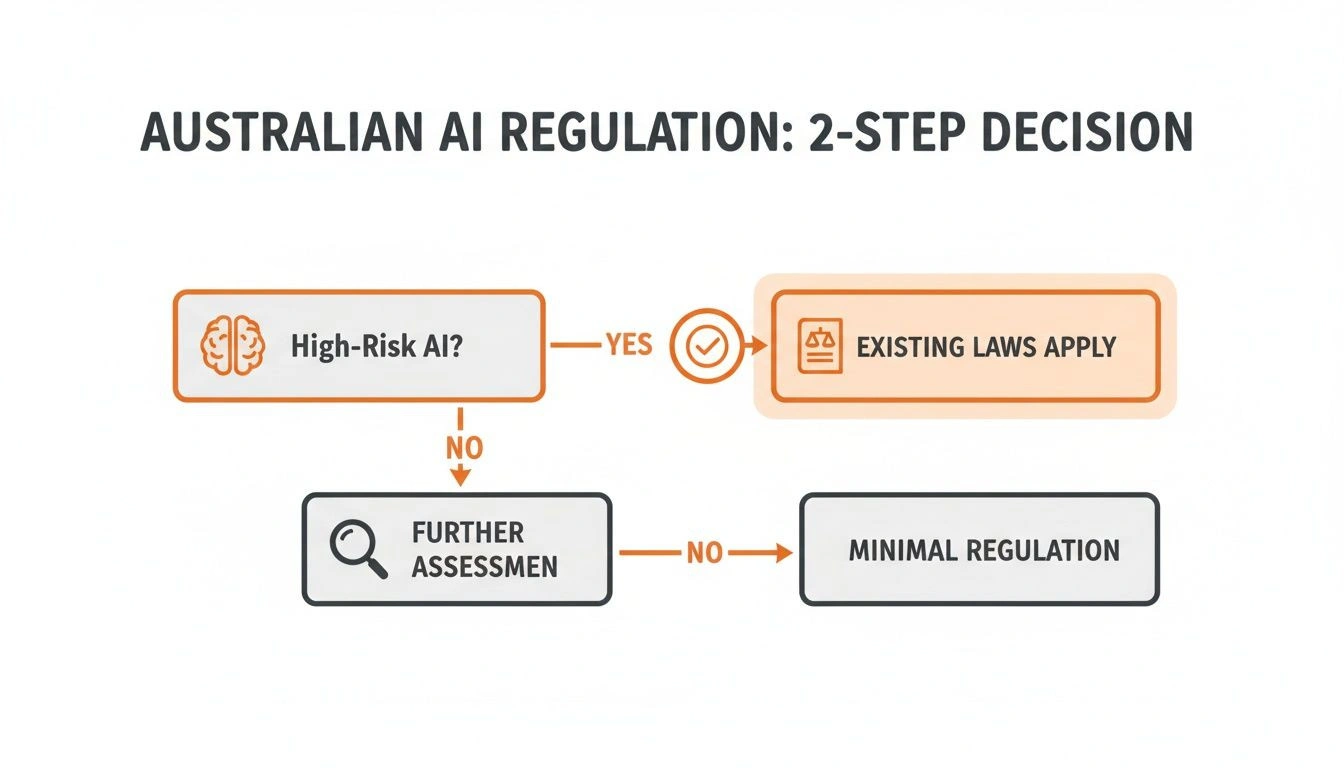

The flowchart below shows the simple, two-step decision process that reflects Australia’s current thinking on AI regulation.

As you can see, the main regulatory concern kicks in when AI is classified as high-risk, which is then managed through existing legal frameworks rather than entirely new laws.

Yellow Light: Medium-Risk AI

Next up is the yellow-light category. This is where the stakes get higher and a mistake could have a meaningful, negative consequence for an individual. These tools are often designed to assist human decision-making, not replace it entirely.

This is where you need to tread more carefully.

With medium-risk AI, the key is ensuring there is a “human in the loop”. This means having a person who can review, question, and ultimately override the AI’s recommendation. This oversight is absolutely critical for maintaining fairness and accountability.

A prime example is an AI tool used to screen job applications. It might filter thousands of CVs down to a manageable shortlist, but the final decision to interview or hire someone must rest with a person. In the same vein, an AI that flags potentially fraudulent financial transactions for a human analyst to review would fall into this category.

For these systems, your governance needs to be much more robust. This means conducting thorough testing for bias, making sure you’re transparent about how the AI works, and establishing crystal-clear processes for human review.

Red Light: High-Risk AI

Finally, we arrive at red-light, or high-risk, AI. These are the systems used in critical situations where an error could lead to severe consequences, directly affecting someone’s safety, rights, or access to essential services. Unsurprisingly, these applications will face the highest level of regulatory scrutiny.

Examples of high-risk AI are pretty clear-cut:

- Medical diagnostic tools: An AI that analyses medical scans to detect diseases.

- Autonomous vehicles: Self-driving cars operating on public roads.

- Credit scoring systems: An AI that makes automated, final decisions on loan approvals.

If your business is exploring high-risk AI, you’re stepping into a domain that demands rigorous governance from day one. To get this right, using a modern guide to the gap assessment process can help you accurately map out your specific risks. Many businesses venturing into these advanced systems also turn to custom AI development to ensure the final product is built from the ground up to meet strict safety and compliance standards. This level of risk requires comprehensive assessments, meticulous documentation, and the ability to prove your AI is safe, fair, and reliable long before it ever goes live.

Building a Practical AI Compliance Framework

Understanding the different AI risk levels is a great start, but the real work begins when you turn that knowledge into action. This is where you build a practical compliance framework, a term that sounds far more intimidating than it actually is.

Think of it less like drafting a dense legal document and more like creating a pre-flight checklist for every AI project you launch. It’s all about establishing clear, repeatable processes that give your teams the confidence to innovate without accidentally steering into dangerous territory. A solid framework isn’t about restriction; it’s about putting up the guardrails that enable speed and scale.

Start with a Clear Internal AI Policy

Your first move should be to establish a straightforward internal policy on using AI. This document doesn’t need to be a hundred pages long. Its main job is to set the ground rules and answer the most pressing questions your people will have.

This policy becomes your organisation’s single source of truth for all things AI. It defines what’s acceptable, who’s responsible for oversight, and how new tools get introduced. A good policy will usually cover these core areas:

- Permitted Uses: Clearly state which types of AI tools are approved for general use (like grammar checkers) and which require a formal review (like a tool that analyses customer sentiment).

- Data Handling Rules: Reiterate your company’s absolute commitment to data privacy. Be explicit: no sensitive or personal customer information should ever be fed into public AI models.

- Accountability: Assign clear ownership. Who is responsible for reviewing and approving new AI applications? Is it a line manager, a specific person, or a dedicated committee?

An internal guide like this demystifies AI for your entire organisation, turning abstract principles into concrete, everyday actions.

Establish a Review Process for New Tools

With a policy in place, you now need a process for evaluating new AI tools before they’re widely adopted. This is your quality control step. It’s like getting a mechanic to inspect a used car before you buy it. You’re looking under the bonnet to make sure everything is in order.

A simple review could involve a checklist that scores any new AI vendor or application against key criteria. For any business building out its AI compliance, it’s critical to understand how to prevent privacy fines under regulations like GDPR and CCPA, as this knowledge directly shapes how you assess potential vendors.

Your review process is your primary defence against unforeseen risks. It ensures that any new technology lines up with your company’s ethical standards and legal obligations before it becomes deeply embedded in your workflows.

When you’re assessing a third-party AI vendor, you have to ask the right questions and not just take their marketing claims at face value. A thorough vetting should include:

- Data Security: How do they protect your data? Is it encrypted both in transit and at rest? Where is it physically stored?

- Model Transparency: Can they explain how their AI arrives at its decisions? Is there a risk of inherent bias baked into their training data?

- Compliance: Do they adhere to Australian privacy laws and other regulations relevant to your industry?

This proactive approach to vendor management is a true cornerstone of responsible AI governance.

Conduct Regular Audits and Training

Finally, an AI compliance framework is not a ‘set and forget’ document. It’s a living system that has to adapt as technology and regulations evolve. To keep it effective, you need regular check-ins and ongoing team training.

Schedule periodic reviews, perhaps quarterly or bi-annually, to assess how your AI tools are actually being used. Are people following the policy? Have any new risks popped up? These audits help you spot small issues before they snowball into major problems.

Just as important is education. Host short, informal training sessions to keep your team up to date on AI developments and to reinforce your internal guidelines. The goal is to build a culture of awareness where everyone understands their role in using AI responsibly. Navigating all this can be tricky, which is where expert AI consulting can provide the specific guidance needed to build a framework that is both robust and practical.

This continuous cycle of review and education ensures your framework remains a valuable asset, helping you scale your AI initiatives with confidence.

Why Customer Trust in AI Is Your Competitive Edge

In business, a powerful new tool is only as good as the trust people have in it. Artificial intelligence is no different. You can have the most advanced system on the planet, but if your customers and employees are wary of it, its potential will never be fully realised. This is precisely where the conversation around AI regulation stops being a legal debate and starts being a direct factor in your business success.

It’s not some abstract concept happening in government offices; it’s about public perception and confidence. When people hear about AI, they often think about its risks just as much as its rewards. This isn’t just a vague feeling, either, as the data shows a clear and growing demand for oversight. Getting this right is about more than just avoiding penalties; it’s about building a brand that people genuinely believe in.

The Public Is Calling for Guardrails

Recent surveys paint an incredibly clear picture of public sentiment here in Australia. People are genuinely excited about the possibilities of AI, but they are also deeply concerned about its potential downsides. And this isn’t a small minority expressing caution. It’s a significant majority of the population asking for stronger rules of the road.

A KPMG survey, for example, highlighted this public mood quite starkly. It found that a massive 77% of Australians agree that specific AI regulation is necessary to ensure the technology is used safely. This sentiment is amplified by the fact that only 30% feel that our current laws and safeguards are adequate to protect them from potential AI-related harm. You can learn more about these insights into Australia’s AI regulatory climate and what they mean for businesses.

These aren’t just abstract fears. The public has specific concerns, with 87% demanding laws to combat AI-generated misinformation and 80% favouring oversight from government bodies. These figures send an unmistakable message to every business using AI: your customers are watching, and they expect you to act responsibly.

Turning Compliance into a Competitive Advantage

This is where your opportunity lies. Instead of viewing AI regulation as another compliance hurdle to clear, you can reframe it as a roadmap for building trust. When you can genuinely show your customers that your AI practices are transparent, fair, and secure, you create a powerful point of difference.

Trust is the currency of the modern economy. A proactive and ethical approach to AI isn’t just a compliance task; it’s one of the most effective brand-building strategies you can adopt today.

Being transparent about how you use AI can completely transform customer relationships. Imagine two companies using AI to personalise marketing. One does it silently in the background, leaving customers feeling a bit uneasy and manipulated. The other openly explains that it uses AI to offer more relevant suggestions, gives customers control over their data, and guarantees a human is always available to help. Which company are you more likely to trust and stay loyal to? The answer is obvious.

Future-Proofing Your Business Operations

Aligning your AI strategy with community expectations is also just smart business. It’s about future-proofing your operations. The regulatory environment is still taking shape, but the direction of travel is clear. Stricter rules around data privacy, algorithmic transparency, and accountability are on the horizon.

By building a strong ethical framework now, you put your business on the front foot. You’ll be prepared for future changes in AI regulation, rather than having to scramble to catch up later. This proactive stance reduces risk and creates a much more stable foundation for long-term growth.

Consider these practical benefits:

- Stronger Brand Reputation: Becoming known as a company that uses AI ethically builds a positive reputation that attracts both customers and top talent.

- Increased Customer Loyalty: When customers trust you, they are more likely to stick with you, even if a competitor offers a slightly lower price.

- Smoother AI Adoption: Your own team is far more likely to embrace new AI tools when they trust that the technology is being implemented fairly and for their benefit, not to replace them without thought.

Ultimately, winning with AI isn’t just about having the smartest technology. It’s about being the smartest, most responsible user of that technology. If you need help turning these principles into a practical strategy, our team of AI consultants can guide you in building a framework that fosters trust and drives real results.

Your Actionable AI Governance Roadmap

So, how do we put all this theory into practice? Building an AI governance framework isn’t about memorising legal texts. It’s about establishing a clear, repeatable process that your team can actually follow. Think of it as a recipe that ensures every AI tool and agent you use is built on a solid foundation of trust and safety from the very beginning.

This is your practical guide to modernising how you work without tripping over regulatory wires. As your automation partner, our goal is to see you get those real efficiency gains while building an AI ecosystem that’s sustainable and trustworthy. A clear governance roadmap is what gets you there.

Your Four-Step AI Governance Plan

Consider this your blueprint for getting started. It’s a straightforward sequence designed to be adapted to your specific business needs, making sure you’ve covered the essentials before you start scaling your AI initiatives.

- Initial Assessment and Inventory: First things first, you need to know what you’re working with. You might be surprised to find out where AI is already being used in your business. Create a simple inventory of all AI tools, who uses them, and what they’re used for. This gives you a clear baseline to work from.

- Develop a Clear Use Policy: Now, draft a straightforward document outlining the do’s and don’ts of AI within your company. Focus on the big-ticket items: data privacy (no customer PII in public tools!), transparency in decision-making, and clear lines of accountability.

- Create a Vetting Process: For any new AI tool under consideration, you need a quick quality control checklist. This should include basic questions about the vendor’s data security practices, the potential for algorithmic bias, and their overall reliability. This isn’t about slowing things down; it’s about making smarter choices.

- Implement and Educate: Once your policy is set, it’s time to roll it out and get your team on board. The aim here is to build a shared understanding and culture of responsible AI use right across the organisation.

This roadmap isn’t about adding red tape; it’s about removing risk so you can innovate with confidence. It makes your approach to AI predictable and transparent, aligning your operations with emerging AI regulation.

Putting these steps into action is fundamental for any business serious about scaling its use of AI. The process is especially critical for tasks involving automated data processing, where the stakes for accuracy and compliance are highest. If you need a hand navigating this landscape, our team of AI consultants is here to guide you every step of the way.

Frequently Asked Questions About AI Regulation

Trying to make sense of AI regulation can feel a bit like reading a map while driving. To help clear things up, we’ve put together answers to some of the most common questions we’re hearing from Australian businesses.

Should My Business Stop Using AI Until Regulations Are Final?

Absolutely not. You wouldn’t stop driving just because new road rules might be introduced next year, would you? The Australian government’s goal is to encourage innovation, not stifle it. They’re focused on adapting existing laws rather than creating a whole new, restrictive rulebook from scratch.

The key is to act responsibly now. Focus on being transparent about how you use AI, make sure your data handling practices are iron-clad under the Privacy Act, and start developing your own internal AI policies. This kind of proactive approach shows you’re on the front foot and ready for whatever comes next.

How Does AI Regulation Apply to Tools from Overseas Companies?

This is a great question. Even if you’re using an AI tool from a company based in Europe or the US, your business is still operating on Australian soil and has to play by Australian rules. Your obligations under our privacy and consumer protection laws don’t just disappear because the tech is from overseas.

This is why vetting any third-party provider is so critical. You need to be asking tough questions about their data security, how their models are trained, and what their approach to risk management looks like. An independent partner can help you assess these tools objectively and make sure they’re a safe bet for your business.

What Is the Most Important First Step to Prepare?

The single most important step you can take right now is to build a culture of responsible AI within your team. And the best way to get that started is by creating a clear, internal AI governance policy.

This document is more than just a compliance checklist; it becomes your company’s North Star for AI. It’s about creating a shared understanding of how to use these powerful tools thoughtfully and safely.

Your policy should lay out acceptable uses for AI, establish a simple review process for new projects based on their risk level, and assign clear responsibilities for oversight. Taking this step now doesn’t just prepare you for future regulation, it builds immediate trust with both your team and your customers.

At Osher Digital, we help businesses build robust frameworks for responsible and effective AI adoption. If you need a partner to help you navigate this new terrain, our team of AI consulting experts is here to guide you.

Jump to a section

Ready to streamline your operations?

Get in touch for a free consultation to see how we can streamline your operations and increase your productivity.