10 Best Data Integration Solutions in 2025

Streamline your data workflows with these top 10 data integration solutions. Explore the best platforms for ETL, ELT, and data management in 2025.

Connecting the Dots: Why Data Integration Matters

For Australian businesses seeking growth, effective data integration solutions are crucial.Disparate data sources create inefficiencies. This listicle presents ten leading data integration solutions – from Osher Digital and Informatica PowerCenter to Matillion ETL – to help your organisation unify data, improve insights, and optimise decision-making. Discover the best tool to modernise your workflows and unlock your data’s potential, whether you’re a start-up or a large enterprise.

1. Osher Digital

Osher Digital positions itself as a strategic partner for businesses wanting to transform their operations through sophisticated process automation and AI-driven data integration solutions.Their focus on medium to large enterprises highlights their capacity to handle complex integrations across various departments, from sales and marketing to finance and data warehousing. This makes them a compelling option for organisations grappling with data silos and seeking to streamline workflows for enhanced decision-making. Osher Digital’s promise lies in unlocking hidden efficiencies by connecting disparate systems and automating repetitive tasks, ultimately driving productivity gains and cost savings.This resonates strongly with businesses striving for operational agility and scalable growth in today’s competitive landscape.

One of Osher Digital’s key strengths is its vendor-agnostic approach to data integration solutions.Rather than being tied to a specific platform, they assess each client’s unique needs and recommend the optimal technologies to achieve their objectives. This flexibility ensures that businesses receive tailored solutions that integrate seamlessly with existing infrastructure, minimising disruption and maximising return on investment.Their services extend across a broad spectrum, encompassing custom AI agent development, sales automation, robotic process automation, automated data processing, and custom system integrations. This comprehensive offering caters to a variety of business needs, addressing inefficiencies that impede growth, compromise accuracy, and escalate costs.

A significant differentiator for Osher Digital is its commitment to client satisfaction, backed by a 30-day money-back guarantee. This demonstrates confidence in their ability to deliver tangible results. They boast impressive metrics, claiming a typical 30% productivity increase, 20% cost savings, and a 90% reduction in manual work for their clients. Testimonials from senior industry leaders further substantiate these claims, with reports of manual workloads being reduced by up to 80% through seamless and effective automation experiences.This positions Osher Digital not just as a technology provider, but as a strategic enabler of business transformation.

Osher Digital adopts a holistic approach to implementation, providing end-to-end support from initial process audits and tailored workflow design to rigorous quality assurance, ongoing training, and maintenance. This comprehensive support structure ensures smooth transitions and empowers clients to fully leverage the benefits of their new data integration solutions.However, potential clients should be aware that implementation timelines can vary significantly depending on the complexity of their existing processes, potentially ranging from several weeks to several months.

While Osher Digital’s comprehensive services and proven track record make it a strong contender in the data integration solutions market, the lack of publicly available pricing information is a potential drawback.Prospective clients must engage in a personalised consultation to obtain a quote, which may deter some businesses seeking transparent upfront pricing. This lack of transparency can make it difficult to compare their offerings directly with competitors.However, given their focus on tailored solutions and the potential for significant ROI, the consultation process may be worthwhile for organisations seeking bespoke data integration solutions.

Implementation Tips for Osher Digital Solutions:

- Thorough Process Audit:Collaborate closely with Osher Digital’s consultants during the initial process audit to identify key pain points and opportunities for automation.

- Clear Communication: Maintain open communication throughout the implementation process to ensure alignment between your business objectives and the proposed solutions.

- Change Management: Prepare your teams for the changes associated with new workflows and automation through adequate training and support.

- Ongoing Monitoring: Regularly monitor the performance of your integrated systems and leverage Osher Digital’s ongoing support to address any issues or optimise workflows.

Osher Digital’s website (https://osher.com.au) provides further details and case studies showcasing their successful data integration projects.For Australian businesses seeking a comprehensive and tailored approach to data integration, Osher Digital warrants serious consideration. They offer a strong combination of technical expertise, a vendor-agnostic approach, and a commitment to client success, ultimately enabling organisations to achieve significant operational improvements and drive sustainable growth.

2. Informatica PowerCenter

Informatica PowerCenter is a stalwart in the world of enterprise data integration solutions, offering a robust and comprehensive platform for Extract, Transform, and Load (ETL) processes.Its strength lies in handling complex data integration scenarios, making it a popular choice for large organisations across various industries in Australia and globally.For businesses dealing with high volumes of data from disparate sources, PowerCenter provides the tools and functionalities needed to consolidate, cleanse, and transform data into actionable insights.This positions it as a powerful solution for companies looking to modernise their data management strategies and gain a competitive edge.It’s particularly relevant for Australian businesses seeking to streamline operations, enhance decision-making, and comply with increasingly stringent data governance regulations.

PowerCenter’s core functionality revolves around its visual mapping designer, allowing developers to create complex ETL workflows without extensive coding. This drag-and-drop interface simplifies the process of connecting to various data sources, defining transformation rules, and loading data into target systems.Support for over 500 data sources and targets, including databases, cloud applications, and file systems, makes it highly versatile for integrating virtually any data type.For Australian enterprises facing the challenge of integrating legacy systems with modern cloud platforms, this broad connectivity is invaluable.

Advanced data transformation capabilities, such as data cleansing, aggregation, and validation, ensure data quality and consistency throughout the integration process.PowerCenter supports both batch and real-time processing, catering to diverse data integration needs.Real-time integration is crucial for applications requiring up-to-the-minute data, such as fraud detection and supply chain management, while batch processing is ideal for large-scale data warehousing and reporting. This flexibility makes PowerCenter a suitable data integration solution for a wide range of use cases within Australian organisations.

Furthermore, Informatica PowerCenter provides robust data governance and security features, enabling organisations to maintain control over their data assets and comply with regulatory requirements. Metadata management capabilities provide a centralised view of data lineage and transformations, facilitating data discovery and impact analysis.These features are increasingly important for Australian businesses navigating the complexities of data privacy regulations.

While PowerCenter boasts numerous advantages, it’s important to acknowledge its drawbacks.The platform is known for its high licensing costs and implementation expenses, potentially making it prohibitive for smaller organisations.The complex setup and configuration process often requires specialised expertise, and the steep learning curve can be challenging for new users.Additionally, PowerCenter’s resource-intensive infrastructure requirements necessitate significant hardware investments.

For a deeper dive into data integration concepts and solutions, you can learn more about Informatica PowerCenter. This resource provides valuable insights into system integration services relevant to the Australian market.

In terms of pricing, Informatica typically employs a tiered licensing model based on the number of CPUs or data volume processed.Precise pricing details are usually obtained through direct contact with Informatica or its authorised partners.Technical requirements vary depending on the specific implementation, but generally involve powerful servers, ample storage capacity, and a supported operating system.

Compared to other data integration solutions, such as Talend Open Studio or Apache Kafka, Informatica PowerCenter occupies the enterprise-grade segment.While open-source alternatives offer cost advantages, PowerCenter excels in its scalability, reliability, and comprehensive feature set, making it a compelling option for large enterprises with complex data integration needs.

Implementing PowerCenter effectively requires careful planning and execution.Engaging experienced consultants can streamline the implementation process and ensure optimal configuration.Thorough testing and validation are crucial for ensuring data quality and accuracy.Furthermore, investing in training for users can maximise the platform’s potential and minimise the learning curve.

In conclusion, Informatica PowerCenter remains a powerful data integration solution for Australian enterprises seeking to manage and leverage their data assets effectively. Its comprehensive features, scalability, and robust security make it suitable for organisations with complex data landscapes.However, potential users should carefully consider the high licensing costs and resource requirements before committing to this platform.By weighing the pros and cons, businesses can make informed decisions about whether Informatica PowerCenter aligns with their specific data integration needs and budget.

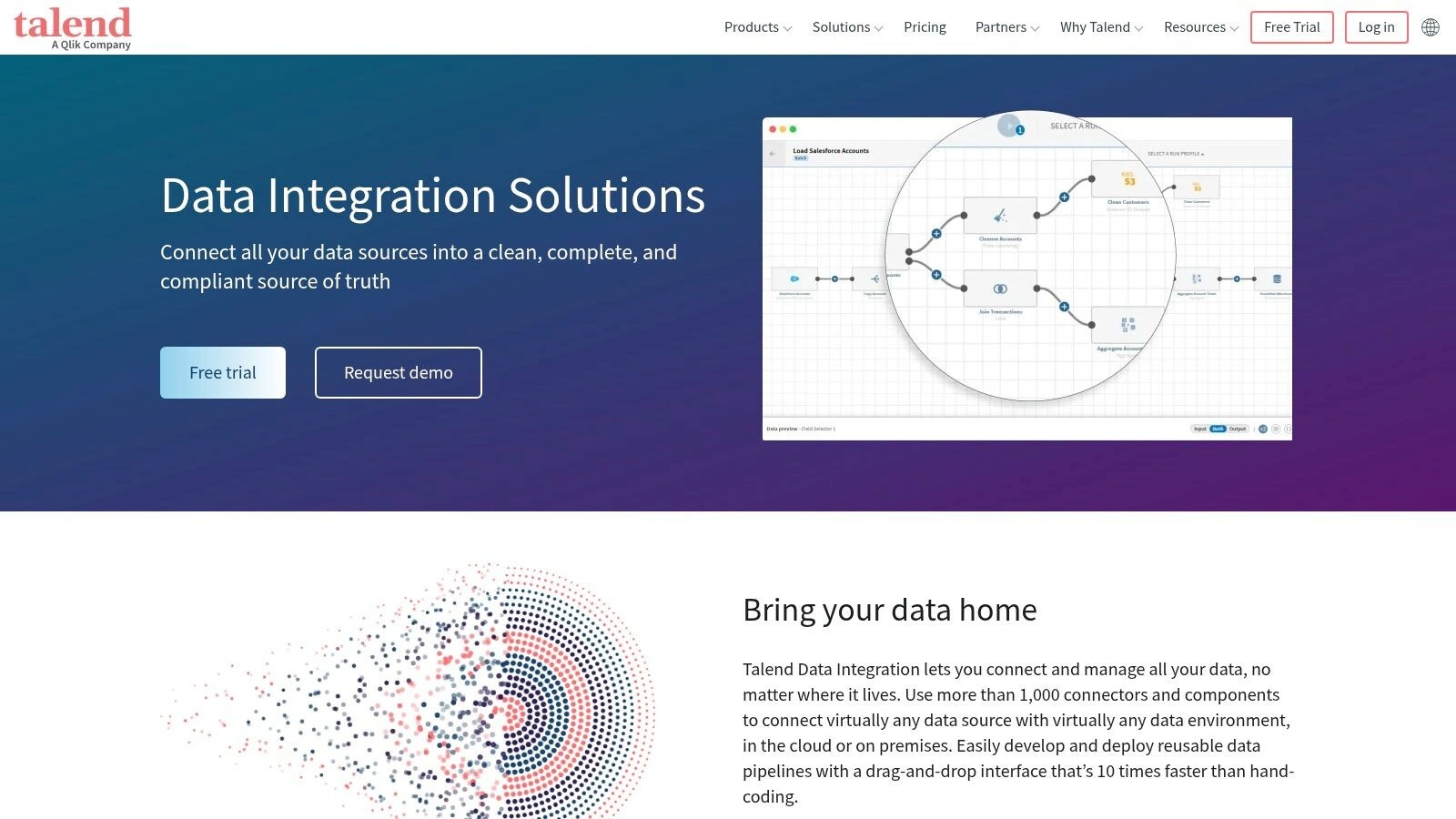

3. Talend Data Integration

Talend Data Integration stands out as a robust data integration solution, catering to a diverse range of organisations from startups to large enterprises. Its dual offering of open-source and commercial versions makes it a flexible choice for businesses in the AU region seeking to modernise their data management processes. Whether you’re looking to streamline legacy workflows, enhance business intelligence, or fuel data-driven decision-making, Talend Data Integration provides the tools to connect, transform, and manage data from various sources. This adaptability is a key reason for its inclusion in this list of top data integration solutions.

Talend Data Integration offers a comprehensive suite of features encompassing Extract, Transform, Load (ETL), Extract, Load, Transform (ELT), and broader data management capabilities.Its intuitive drag-and-drop interface empowers users to design and orchestrate complex data pipelines without extensive coding, democratising access to data integration across the organisation.This user-friendly design accelerates development and reduces the learning curve, allowing IT teams to focus on strategic initiatives rather than wrestling with complex code.

For organisations working with large datasets and leveraging big data technologies, Talend provides native support for Hadoop and Spark. This capability enables seamless integration with modern data architectures, empowering businesses to extract valuable insights from vast data lakes and data warehouses.Furthermore, Talend’s cloud-native integration capabilities facilitate the connection and management of data across various cloud platforms, supporting hybrid and multi-cloud deployments which are becoming increasingly prevalent in the Australian business landscape. Real-time data streaming and Change Data Capture (CDC) functionalities allow businesses to respond to changing market conditions with agility and maintain a competitive edge.

Cost-Effectiveness and Flexibility: Talend’s availability in both open-source and commercial editions provides flexibility in terms of cost and features. The open-source version offers a cost-effective entry point for smaller organisations or those looking to pilot data integration projects.However, it’s important to note that the open-source version has some performance limitations and lacks certain advanced features found in the commercial edition.The enterprise version unlocks additional features, scalability, and dedicated support, catering to the needs of larger enterprises with more demanding data integration requirements.

Addressing Specific Business Needs: Talend Data Integration provides significant benefits to various roles and departments within an organisation. For IT directors and CTOs responsible for systems integration, Talend simplifies the process of connecting disparate systems, reducing complexity and improving data flow.Sales and marketing teams benefit from automated account-based strategies powered by integrated customer data, leading to more targeted and effective campaigns.Operations managers can leverage Talend to boost efficiency and productivity by automating data-driven workflows, while C-level executives gain valuable insights for strategic decision-making.

Comparison with Similar Tools: Compared to other data integration solutions like Informatica PowerCenter and MuleSoft Anypoint Platform, Talend differentiates itself with its open-source offering and focus on big data integration. Informatica is known for its robust enterprise-grade features, but often comes with a higher price tag. MuleSoft excels in API management and application integration, but may not be as specialised for complex ETL processes. Talend offers a compelling balance between cost, functionality, and ease of use.

Implementation and Setup Tips:A successful Talend implementation requires careful planning and consideration of your specific requirements. Start by clearly defining your integration goals and identifying the data sources involved.Leverage the extensive online documentation and community support available, which is a significant advantage of choosing Talend.For larger projects, consider engaging Talend’s professional services team for expert guidance.Also, thoroughly test your integration jobs before deploying them to production to ensure data quality and accuracy.

Technical Requirements and Pricing: Specific technical requirements for Talend Data Integration depend on the chosen deployment model (cloud, on-premises, or hybrid) and the scale of your data operations.Pricing for the commercial edition is typically subscription-based and varies based on the features and support level required.Contact Talend directly for detailed pricing information tailored to your specific needs.

Conclusion:Talend Data Integration offers a compelling value proposition for organisations in the AU region looking to modernise their data integration processes.Its combination of open-source and commercial options, user-friendly interface, and powerful features makes it a versatile solution capable of addressing a wide range of data management challenges.From streamlining operations to empowering data-driven decision-making, Talend helps businesses unlock the full potential of their data.For more information, visit the Talend website: https://www.talend.com/products/data-integration/

4. Microsoft Azure Data Factory

Microsoft Azure Data Factory is a robust cloud-based data integration solution ideal for organisations looking to modernise their data management strategies. As a fully managed Platform-as-a-Service (PaaS) offering, Azure Data Factory empowers businesses to create, schedule, and orchestrate complex data workflows at scale, without the burden of managing infrastructure. Its hybrid capabilities bridge the gap between on-premises and cloud data sources, offering a unified platform for all your data integration needs. This makes it a powerful contender amongst other data integration solutions, especially for enterprises already invested in the Microsoft ecosystem.

Azure Data Factory’s visual pipeline designer simplifies the process of building Extract, Transform, Load (ETL) and Extract, Load, Transform (ELT) pipelines. This intuitive interface allows users to drag and drop components, define data flows, and monitor pipeline execution with ease. For Australian businesses dealing with large volumes of data, this visual approach can significantly reduce development time and improve overall productivity. The platform’s serverless architecture automatically scales resources based on demand, ensuring optimal performance and cost efficiency. This pay-as-you-use pricing model allows businesses to only pay for the resources consumed, making it a cost-effective solution for organisations of all sizes.

One of Azure Data Factory’s key strengths lies in its seamless integration with the broader Azure ecosystem. It connects effortlessly with services like Azure Blob Storage, Azure Data Lake Storage, Azure SQL Database, and Azure Synapse Analytics, enabling a cohesive and streamlined data management environment. This tight integration makes it particularly attractive for organisations already leveraging Azure services. For example, a sales and marketing team can use Azure Data Factory to automate the integration of customer data from various sources into Azure Synapse Analytics for advanced analytics and reporting, enabling more targeted and effective account-based marketing strategies.Similarly, operations managers can leverage Azure Data Factory to streamline data flows between operational systems and data warehouses, boosting efficiency and enabling data-driven decision-making.

However, Azure Data Factory’s close ties to the Azure ecosystem also present a potential drawback.Its functionality is primarily limited to the Azure cloud environment, which may not be suitable for organisations with a multi-cloud or hybrid cloud strategy that includes other cloud providers like AWS or Google Cloud.While it offers some capabilities for connecting to on-premises data sources, its full potential is realised within the Azure environment. Furthermore, while the visual interface simplifies many tasks, there can be a learning curve for users unfamiliar with the Microsoft ecosystem.

For Australian businesses considering Azure Data Factory, understanding the pricing structure is crucial.The cost is determined by factors like the number of pipeline runs, data transfer volumes, and the compute resources used for data transformation. While the pay-as-you-use model offers flexibility, costs can escalate with high data volumes or complex transformations.It’s vital to carefully evaluate your data integration needs and projected usage to accurately estimate costs.

From a technical perspective, setting up Azure Data Factory requires an active Azure subscription.Users need appropriate permissions to create and manage resources within the Azure portal.Familiarity with core Azure concepts, like resource groups and virtual networks, is beneficial for a smoother implementation.Microsoft provides extensive documentation and tutorials to guide users through the setup and configuration process.

In comparison to other data integration solutions, such as Informatica PowerCenter or Talend Open Studio, Azure Data Factory stands out for its cloud-native architecture, serverless capabilities, and tight integration with the Azure ecosystem.While Informatica and Talend offer more platform-agnostic solutions, Azure Data Factory provides a compelling option for organisations committed to the Microsoft cloud.

For Australian enterprises seeking a scalable and cost-effective data integration solution within the Azure environment, Microsoft Azure Data Factory offers a powerful suite of tools.Its visual interface, hybrid capabilities, and seamless integration with other Azure services make it a valuable asset for modernising data workflows and unlocking the full potential of your data.Website: https://azure.microsoft.com/en-us/products/data-factory/

5. IBM InfoSphere DataStage

IBM InfoSphere DataStage is a powerful enterprise-grade ETL (Extract, Transform, Load) tool designed for complex data integration challenges.As part of the IBM Information Server suite, it offers a comprehensive platform for developing, managing, and executing data integration jobs that efficiently move and transform data across various sources and targets.Its robust architecture, combined with parallel processing capabilities, makes it particularly well-suited for high-volume data processing needs in large enterprises across Australia.This positions DataStage as a valuable data integration solution for organisations looking to modernise their data management processes and gain a competitive edge.

DataStage’s inclusion in this list is warranted due to its proven track record in handling complex data integration scenarios. It offers a visual interface for designing data integration jobs, simplifying the process and reducing the need for extensive hand-coding. This graphical job design capability allows users to drag and drop components to create complex data pipelines, making it more accessible to a wider range of users, even those without deep programming skills. The platform’s support for both real-time and batch processing provides flexibility in handling different data integration requirements.Organisations can use DataStage to process data in real-time for immediate insights or in batches for large-scale data warehousing tasks.This adaptability makes DataStage a versatile solution for various data integration needs.

One of DataStage’s key strengths is its parallel processing engine, which allows for high-performance data processing by distributing workloads across multiple processors or servers.This feature is particularly beneficial for large enterprises dealing with massive datasets and demanding service level agreements. The platform’s enterprise-grade security and governance features ensure data integrity and compliance with regulatory requirements, a critical aspect for organisations operating in regulated industries. DataStage also offers advanced data transformation capabilities, including data cleansing, standardisation, and enrichment, ensuring high-quality data for downstream applications.

For Australian businesses, DataStage can address a wide range of data integration use cases.For instance, it can streamline the integration of customer data from various sources, creating a unified view of the customer for improved marketing and sales efforts. It can also facilitate data migration projects, such as consolidating data from legacy systems into a modern data warehouse, enabling better reporting and analytics.Furthermore, DataStage can support operational data integration, ensuring consistency and accuracy of data across different operational systems.

While DataStage offers significant benefits, it’s important to acknowledge its drawbacks. Licensing and maintenance costs can be substantial, making it a significant investment, particularly for smaller organisations.The platform’s complexity can also lead to increased administration and setup overhead, requiring specialised skills and training. While DataStage has made strides in cloud integration, its cloud-native capabilities are still somewhat limited compared to some newer cloud-based data integration tools.

Implementation of DataStage requires careful planning and execution. Organisations should assess their data integration requirements thoroughly and design their DataStage jobs accordingly.Leveraging IBM’s extensive documentation and training resources is crucial for successful implementation.Engaging with IBM’s professional services or experienced DataStage consultants can also help streamline the implementation process and ensure optimal configuration.

Pricing for IBM InfoSphere DataStage is typically based on a combination of factors including processor value units (PVUs),the number of cores used, and the specific features required.Contacting IBM directly is recommended to obtain accurate pricing information based on your specific needs. Technical requirements vary depending on the chosen deployment model (on-premises, cloud, or hybrid) and the scale of data processing.These requirements typically include specific operating systems, database platforms, and hardware configurations.IBM provides detailed documentation outlining the technical specifications for each deployment option.

Compared to other ETL tools like Informatica PowerCenter or Talend Open Studio, DataStage stands out with its robust parallel processing engine and enterprise-grade features. While Informatica PowerCenter offers similar capabilities, it often comes at a higher price point.Talend Open Studio, while providing a cost-effective open-source option, may lack the enterprise-grade scalability and security features offered by DataStage. Choosing the right tool depends on your organisation’s specific needs and budget.For large enterprises in Australia with complex data integration needs and a focus on performance and security, IBM InfoSphere DataStage offers a compelling data integration solution.Website: https://www.ibm.com/products/infosphere-datastage

6. Fivetran

Fivetran is a compelling data integration solution for Australian businesses seeking a predominantly automated approach to consolidate data from various sources.This cloud-native platform distinguishes itself through its Extract, Load, Transform (ELT) methodology, streamlining the movement of data into a central data warehouse before applying transformations.This approach offers several advantages, especially for organisations dealing with high volumes of data. By performing transformations within the powerful environment of a data warehouse, Fivetran allows for more complex and efficient processing compared to traditional ETL (Extract, Transform, Load) approaches.This efficiency makes Fivetran a strong contender in the crowded landscape of data integration solutions, particularly for medium to large enterprises aiming to modernise their data management processes.For companies looking to leverage the power of AI in their data integration strategies, you can learn more about Fivetran and similar solutions in this insightful article: Learn more about Fivetran.

Fivetran’s strength lies in its extensive library of pre-built connectors. These connectors cater to a wide range of popular SaaS applications, databases, and data warehouses commonly used by Australian businesses, such as Salesforce, Marketo, NetSuite, MySQL, PostgreSQL, Snowflake, and Google BigQuery. This pre-built nature dramatically reduces the time and effort required for initial setup and ongoing maintenance of data pipelines.Instead of writing complex custom integration scripts, IT teams can leverage these connectors to quickly establish reliable data flows. This is particularly valuable for fast-growing businesses needing to scale their data infrastructure rapidly.

Automated schema change handling is another key differentiator for Fivetran.As source systems evolve and data structures change, Fivetran automatically adapts the data pipelines, ensuring continuous data synchronisation without manual intervention. This feature significantly reduces the risk of data errors and pipeline breakages, freeing up IT resources for more strategic initiatives.For companies undergoing digital transformation or experiencing rapid growth, this automatic adaptation is invaluable for maintaining data integrity and operational efficiency.

While Fivetran shines in its automated capabilities, it’s essential to consider its limitations.The platform’s transformation capabilities are primarily geared towards the loading and replication of data, with less emphasis on in-pipeline data transformation. While transformations can be performed within the destination data warehouse, this might require additional tooling or expertise. Furthermore, the reliance on pre-built connectors, while advantageous for ease of use, can be a constraint for organisations requiring highly customised integrations with niche systems.

Fivetran’s pricing is consumption-based, meaning costs scale with the volume of data processed. While this model offers flexibility, it can become expensive for businesses dealing with extremely large datasets.Evaluating the projected data volume and associated costs is crucial during the vendor selection process.Alternatives like Matillion and Informatica PowerCenter offer more comprehensive transformation capabilities and potentially more cost-effective solutions for specific use cases, albeit often with increased setup and maintenance overhead.

For Australian companies considering Fivetran, some implementation tips can ensure a smoother integration process.Firstly, clearly define your data integration requirements and map your existing data sources. This helps determine which Fivetran connectors are suitable and identify any potential gaps.Secondly, leverage Fivetran’s extensive documentation and support resources during the setup phase. Finally, thoroughly test the data pipelines to ensure data accuracy and integrity before deploying to production.

Fivetran’s zero-maintenance philosophy and automated capabilities are a boon for Australian organisations struggling with the complexities of traditional data integration. While the platform’s focus on ELT and reliance on pre-built connectors may not suit every use case, its ease of use, reliability, and ability to handle schema changes make it a compelling solution for businesses prioritising speed and efficiency in their data management strategies. This is particularly relevant for medium and large enterprises, IT directors, CTOs, sales and marketing teams, operations managers, and C-level executives seeking to unlock the full potential of their data.Even company owners and founders looking to scale their operations can benefit from Fivetran’s streamlined approach to data integration.However, considering factors like data volume, transformation requirements, and budget is crucial to ensuring a successful implementation.By understanding Fivetran’s strengths and limitations, Australian businesses can make an informed decision on whether it is the right data integration solution to meet their unique needs.

7. Stitch Data

Stitch Data, now a part of Talend, presents a compelling data integration solution, particularly for organisations seeking a cloud-based ETL (Extract, Transform, Load) service that prioritises simplicity and developer friendliness.It shines in its ability to streamline the process of moving data from diverse sources into data warehouses, making it a valuable tool for businesses in the AU region looking to modernise their data infrastructure and unlock valuable insights. Its focus on ease of use and transparent, row-based pricing makes it a strong contender in the crowded field of data integration solutions.

Stitch Data distinguishes itself with a simplified setup and configuration process.This makes it ideal for organisations that need to quickly establish reliable data pipelines without the overhead of complex configuration. Its developer-friendly APIs and tools further enhance its appeal, empowering technical teams to integrate Stitch seamlessly into existing workflows.The transparent, row-based pricing model provides predictable cost management, a critical factor for budget-conscious businesses. For Australian businesses dealing with the fluctuating AUD, this predictability can be particularly beneficial.Stitch Data’s comprehensive range of data source connectors allows it to ingest data from a multitude of platforms, including databases, SaaS applications, and marketing tools, ensuring compatibility with diverse data ecosystems.Automated data replication and monitoring features ensure data integrity and pipeline stability, freeing up valuable IT resources.

For medium to large enterprises in Australia seeking to modernise legacy workflows, Stitch Data provides a straightforward path to consolidating data into a central warehouse.IT directors and CTOs appreciate the ease of implementation and the ability to quickly establish robust data pipelines.The platform’s developer-friendly nature empowers teams to build custom integrations and automate data flows. For sales and marketing teams aiming to automate account-based strategies, Stitch Data can facilitate the integration of crucial customer data from various sources, enabling a more holistic view of each account.This empowers teams to personalise marketing campaigns and improve customer engagement. Operations managers focused on boosting efficiency and productivity will find value in Stitch Data’s ability to streamline data processes, freeing up staff to focus on higher-value tasks.The transparent pricing allows for accurate cost forecasting, contributing to better operational budget management.C-level executives prioritising scalable growth and cost optimisation will be drawn to Stitch Data’s predictable pricing model and its capacity to handle increasing data volumes.The platform’s ease of use minimises the need for extensive technical expertise, reducing implementation costs and time to value.Company owners and founders looking to scale can leverage Stitch Data to build a robust data foundation that supports informed decision-making and drives business growth.

While Stitch Data excels in its simplicity and ease of use, it does have limitations.Its data transformation capabilities are less extensive than some enterprise-grade ETL tools.Users requiring complex data transformations may need to supplement Stitch with other tools.Similarly, it offers fewer advanced features compared to more comprehensive platforms, potentially limiting its suitability for extremely complex integration scenarios. While transparent, row-based pricing is beneficial for predictability, costs can accumulate with high data volumes. Organisations dealing with massive datasets should carefully evaluate the potential costs against the benefits.

Compared to other data integration solutions like Fivetran and Matillion, Stitch Data positions itself as a more accessible and developer-centric option.While Fivetran boasts a wider range of pre-built connectors and more sophisticated data transformation capabilities, Stitch Data often offers a more affordable entry point, particularly for businesses with moderate data volumes.Matillion, on the other hand, provides a more robust ETL platform with extensive transformation features but often comes with a steeper learning curve and higher price tag.

Implementing Stitch Data is typically straightforward.The web-based interface guides users through the connection process for various data sources.Users select their desired data sources, configure the integration settings, and schedule data replication.Detailed documentation and readily available support further simplify the setup process.

In conclusion, Stitch Data offers a compelling data integration solution for Australian organisations seeking a balance of simplicity, affordability, and reliability. Its focus on ease of use, developer-friendly tools, and transparent pricing makes it a valuable asset for businesses looking to modernise their data workflows and unlock the potential of their data.You can explore more about Stitch Data on their website: https://www.stitchdata.com/

8. Apache NiFi

Apache NiFi is a powerful open-source data integration solution that stands out for its visual flow-based programming model and robust real-time data handling capabilities.It empowers organisations to connect disparate systems, transform data, and automate complex workflows, making it a valuable asset in modern data architectures.Its origins within the NSA speak to its focus on security and robust performance, while its subsequent open-sourcing has fostered a thriving community and continuous development. This makes NiFi a compelling option for Australian businesses seeking a flexible and scalable data integration platform.

NiFi truly shines in complex data routing and transformation scenarios.Imagine a retailer needing to consolidate sales data from various online and physical stores, cleanse it, and then route it to different analytics platforms, marketing automation tools, and inventory management systems.NiFi can orchestrate this entire process seamlessly, handling high volumes of data in real time.Its web-based visual flow designer allows users to drag and drop pre-built processors, configure them, and connect them to create sophisticated data pipelines without writing a single line of code.This visual approach significantly simplifies development and maintenance, making it easier for teams to collaborate and manage complex data flows.

The platform’s extensible processor architecture is another key strength. While it may have limited built-in transformation capabilities compared to some commercial tools, this extensibility allows developers to create custom processors tailored to specific needs. This opens a world of possibilities, enabling integration with virtually any data source or destination.Furthermore, NiFi’s data provenance tracking feature provides unparalleled visibility into the journey of data as it flows through the system.This is crucial for compliance, debugging, and data governance, allowing users to trace the origin, transformations, and destinations of each data element.

For Australian enterprises, NiFi’s open-source nature translates to significant cost savings.Being completely free to use, modify, and distribute, it eliminates licensing fees associated with proprietary data integration solutions. This cost advantage makes it particularly attractive for startups and SMEs looking to implement robust data integration capabilities without hefty upfront investments.However, it’s important to acknowledge that while the software itself is free, there are associated costs related to infrastructure, deployment, and maintenance. Organisations will need to invest in servers, storage, and potentially skilled personnel to manage the NiFi environment effectively.

Compared to other open-source data integration tools like Apache Kafka or Apache Camel, NiFi offers a more user-friendly visual interface and focuses on data flow management rather than solely message queuing or routing. While Kafka excels at high-throughput message streaming, NiFi provides a broader set of functionalities for data transformation and processing.Camel, on the other hand, is a more code-centric integration framework requiring greater developer expertise.NiFi’s visual approach simplifies complex integration tasks, making it accessible to a wider range of users.

Implementing NiFi successfully requires careful planning and consideration of technical requirements.Organisations should assess their data volume, velocity, and variety to determine the appropriate infrastructure and resources needed.While the web-based interface is intuitive, a certain level of technical expertise is necessary for installation, configuration, and ongoing maintenance.The learning curve for the flow-based programming model can be initially challenging but becomes manageable with practice and community support.

Key Features:

- Web-based visual flow designer

- Real-time data flow monitoring

- Extensible processor architecture

- Data provenance tracking

- Cluster support for high availability

Pros:

- Completely free and open-source

- Intuitive visual interface

- Strong data lineage and provenance features

- Active community and regular updates

Cons:

- Requires technical expertise to deploy and maintain

- Limited built-in transformation capabilities (mitigated by extensibility)

- Can be resource-intensive

- Learning curve for flow-based programming model

Website: https://nifi.apache.org/

In conclusion, Apache NiFi offers a powerful and flexible data integration solution, particularly suited for organisations dealing with complex data flows and real-time processing requirements. Its open-source nature, combined with its visual programming model and robust features, makes it a valuable tool for Australian businesses seeking to modernise their data architectures and unlock the full potential of their data.While technical expertise is needed for successful implementation, the benefits of cost savings, flexibility, and community support make NiFi a worthy contender in the data integration landscape.

9. Pentaho Data Integration (Kettle)

Pentaho Data Integration (Kettle) is a powerful open-source ETL (Extract, Transform, Load) tool that offers comprehensive data integration solutions for businesses of all sizes, particularly appealing to small and medium-sized organisations in the AU region. Its inclusion in this list is warranted due to its flexibility, robust transformation capabilities, and the availability of a free community edition, making it an accessible entry point for organisations exploring data integration.Kettle provides a visual, code-free environment for developing complex data pipelines, allowing users to connect to various data sources, transform data, and load it into target systems with ease.This makes it a strong contender amongst other data integration solutions, particularly for those seeking cost-effective options without sacrificing functionality.

One of Kettle’s standout features is its visual transformation designer, known as Spoon.Spoon provides a drag-and-drop interface where users can create and manage complex data transformations without writing code.This intuitive approach significantly reduces the learning curve and allows even non-technical users to build sophisticated data pipelines. The extensive library of built-in transformation steps covers a wide range of data manipulation tasks, from simple data cleansing and validation to advanced data aggregation and calculations.This breadth of functionality empowers organisations to tailor their data integration processes to meet specific business needs.

For Australian businesses seeking to modernise their legacy workflows, Kettle offers a viable pathway.Imagine migrating data from outdated systems to a modern data warehouse or cloud platform.Kettle can handle these complex migrations with its support for various data sources, including databases, flat files, web services, and big data platforms.For IT directors and CTOs responsible for systems integration, the platform’s cross-platform compatibility and robust transformation engine make it a valuable asset.

Beyond its technical capabilities, Kettle’s dual licensing model offers flexibility for organisations at different stages of growth. The community edition provides a cost-effective way to experiment with the tool and develop proof-of-concept projects.As data volumes and integration needs grow, businesses can seamlessly upgrade to the enterprise edition, unlocking advanced features such as enhanced support, clustering capabilities, and access to a wider range of connectors.

While specific pricing details for the enterprise edition are not readily available and generally require contacting Hitachi Vantara directly, the open-source nature of the community edition removes the financial barrier to entry, making it an attractive proposition for smaller organisations or those wanting to test its capabilities before committing to the enterprise version.Technical requirements are relatively modest, with Kettle being compatible with standard operating systems and Java Runtime Environment.

Compared to commercial tools like Informatica PowerCenter or Talend, Kettle’s community edition might have limitations in terms of enterprise-grade features, scalability for extremely large datasets, and frequency of updates. However, for medium-scale projects and organisations with constrained budgets, Kettle offers an excellent balance of functionality, ease of use, and affordability.Learn more about Pentaho Data Integration (Kettle). It can be particularly useful for automating business solutions and boosting growth, aspects crucial for any Australian business looking to thrive in the current competitive landscape.

For sales and marketing teams aiming to automate account-based strategies, Kettle can play a pivotal role in consolidating data from various sources, such as CRM systems, marketing automation platforms, and social media.This unified view of customer data can enhance targeting, personalisation, and ultimately drive better campaign performance. Operations managers focused on boosting efficiency and productivity can leverage Kettle to automate data-driven workflows, freeing up valuable time and resources.

C-level executives prioritising scalable growth and cost optimisation will find Kettle’s open-source nature and scalability appealing.Company owners and founders looking to scale their businesses can leverage Kettle’s data integration capabilities to gain valuable insights into business performance, identify growth opportunities, and make data-driven decisions.

Implementing Kettle effectively requires careful planning and consideration.Begin by defining clear project scope and objectives.Identify the relevant data sources and target systems, and map out the necessary transformation steps.Leverage Spoon’s visual interface to design and test your data pipelines.For complex projects, consider engaging with the vibrant Kettle community or seeking professional support from experienced consultants.Ensure your hardware infrastructure meets the requirements for your expected data volumes, and monitor performance regularly to identify and address any bottlenecks.

While Kettle might face performance challenges with truly massive datasets and lacks some of the cloud-native features of newer tools, its maturity, open-source nature, and strong community support make it a reliable and cost-effective data integration solution for many Australian businesses.Its ability to empower users to visually design complex data transformations without requiring extensive coding skills makes it a particularly attractive option for organisations seeking to democratise access to data and empower business users.With its user-friendly interface and strong transformation capabilities, Pentaho Data Integration (Kettle) deserves its place among the top data integration solutions available today.

10. Matillion ETL

Matillion ETL secures its spot on this list of top data integration solutions due to its cloud-native architecture and focus on delivering high-performance ETL within popular cloud data warehouses.Designed specifically for cloud environments, Matillion streamlines the process of building and orchestrating data pipelines, offering a compelling option for Australian businesses looking to modernise their data infrastructure and unlock the full potential of their cloud investments. It empowers organisations to connect, transform, and load data efficiently, enabling data-driven decision-making and improved business outcomes.This platform is particularly well-suited for medium to large enterprises already committed to a cloud-first strategy and seeking to maximise the value of their cloud data warehouses.

Matillion ETL shines in its ability to seamlessly integrate with leading cloud data warehouses like Amazon Redshift, Google BigQuery, and Snowflake. This tight integration ensures optimal performance and leverages the inherent scalability and power of these platforms. Its intuitive visual interface simplifies the complex process of ETL pipeline development, enabling users to build and manage workflows with drag-and-drop ease.This drastically reduces the need for extensive hand-coding, democratising data integration and empowering non-technical users to contribute to data-driven initiatives.Pre-built connectors for a variety of cloud and SaaS applications further streamline the process, allowing organisations to quickly connect to and integrate data from diverse sources.

For Australian businesses navigating the challenges of a rapidly evolving digital landscape, Matillion ETL offers a robust solution for data integration.Consider the practical application for sales and marketing teams aiming to automate account-based strategies.By integrating data from CRM systems, marketing automation platforms, and website analytics tools, Matillion ETL allows for the creation of a unified view of the customer, enabling targeted campaigns and personalised messaging. Similarly, operations managers focused on boosting efficiency and productivity can leverage Matillion to integrate data from various operational systems, providing real-time insights into key performance indicators and enabling data-driven optimisation efforts.

Technically, Matillion ETL is a pure cloud play. While this offers significant advantages in terms of scalability and flexibility, it also presents limitations.On-premises deployments are not supported, making it less suitable for organisations not fully committed to the cloud. The platform’s pricing model, based on instance hours used, offers competitive pricing for cloud-first organisations.However, costs can escalate with increased usage, a factor that requires careful consideration and monitoring.While Matillion ETL boasts a rich set of features, including Git integration for version control and auto-scaling for performance optimisation, some advanced features found in more established enterprise platforms might be lacking.

Implementing Matillion ETL typically involves selecting the appropriate cloud instance size based on anticipated data volumes and processing requirements.Connecting to data sources is facilitated by the platform’s pre-built connectors, and the visual drag-and-drop interface simplifies the process of defining transformations and building data pipelines.Thorough testing and validation are crucial steps in any implementation, ensuring data accuracy and integrity.

Comparing Matillion ETL to similar data integration solutions, like Informatica PowerCenter or Talend, reveals key differences.While Informatica and Talend offer broader platform support, including on-premises options, Matillion ETL excels in its cloud-native architecture and focus on cloud data warehouses. This specialisation allows for superior performance and optimisation within cloud environments, making it a compelling choice for organisations prioritising a cloud-first approach.

For C-level executives prioritising scalable growth and cost optimisation, Matillion ETL offers a compelling value proposition.Its cloud-native architecture aligns perfectly with modern data strategies, enabling businesses to leverage the scalability and elasticity of the cloud.By streamlining data integration processes and empowering data-driven insights, Matillion ETL contributes to improved operational efficiency, better decision-making, and ultimately, stronger business outcomes.For company owners and founders looking to scale their businesses, Matillion provides a robust and future-proof solution for data integration, enabling them to navigate the challenges of growth and harness the power of data.More information on Matillion ETL can be found on their website: https://www.matillion.com/

Data Integration Solutions Feature Comparison

| Solution | Core Features | User Experience / Quality Metrics | Value Proposition | Target Audience | Price Points / Notes |

|---|---|---|---|---|---|

| Osher Digital | Custom AI agents, sales & process automation, system integration | 30% productivity ↑, 90% manual work ↓, 30-day satisfaction guarantee | Tailored vendor-agnostic solutions, end-to-end support | Medium to large enterprises | Custom pricing, consultation required |

| Informatica PowerCenter | Visual ETL, 500+ data sources, batch & real-time | Scalable, robust, strong governance | Enterprise-grade data integration platform | Large enterprises | High licensing and implementation costs |

| Talend Data Integration | Open-source/commercial, drag-and-drop, big data support | User-friendly, strong community support | Cost-effective with cloud and big data integration | Small to large businesses | Open-source free; enterprise paid |

| Microsoft Azure Data Factory | Cloud-native ETL/ELT, hybrid integration, auto-scaling | Pay-as-you-use, seamless Azure integration | Managed PaaS with strong security, hybrid-ready | Cloud-focused organizations | Usage-based pricing, can scale costs |

| IBM InfoSphere DataStage | Parallel processing, graphical design, security | High performance, mature platform | Enterprise-class ETL with IBM ecosystem integration | Large enterprises | High license and maintenance fees |

| Fivetran | Automated ELT, pre-built connectors, schema handling | Minimal setup, high reliability | Fully managed zero-maintenance data pipelines | SaaS-heavy cloud users | Higher costs for large data volumes |

| Stitch Data | Simple setup, APIs, transparent pricing | Easy to use, reliable data replication | Developer-friendly ETL, predictable costs | Small to mid-sized teams | Row-based pricing; costs grow with volume |

| Apache NiFi | Visual flow designer, data provenance, cluster support | Open-source, strong data lineage | Free with extensible architecture | Tech-savvy organizations | Free, requires technical resources |

| Pentaho Data Integration | Visual transformation, big data support | User-friendly, strong transformations | Open-source with enterprise options | Small to medium businesses | Free community; enterprise paid |

| Matillion ETL | Cloud-native, drag-and-drop, Git integration | Easy interface, optimized for cloud DW | Focused on cloud data warehouses | Cloud-first companies | Pricing based on instance hours |

Choosing the Right Fit: Your Data Integration Journey

Selecting the ideal data integration solution from the myriad of options available can feel overwhelming.This listicle has explored ten popular platforms, ranging from robust enterprise-grade solutions like Informatica PowerCenter and IBM InfoSphere DataStage to more agile options like Fivetran and Stitch Data.We’ve also looked at cloud-native offerings like Microsoft Azure Data Factory and versatile open-source tools such as Apache NiFi and Pentaho Data Integration (Kettle).Each tool brings unique strengths and caters to specific needs, making careful evaluation crucial.

Key takeaways include understanding the importance of scalability for future growth, aligning your choice with existing technical expertise within your organisation, and budgeting appropriately for implementation and ongoing maintenance.Consider your data volume, the complexity of your integration needs, and the level of automation required.For medium to large enterprises in Australia looking to modernise legacy workflows, platforms like Matillion ETL and Talend Data Integration can offer significant advantages.If your focus is on streamlining customer data for enhanced experiences, exploring customer data integration solutions can provide further valuable insights. This guide, Top Customer Data Integration Solutions for Better Insights from ECORN, offers a comprehensive overview of streamlining data management to improve customer experience.

For organisations prioritising scalable growth and cost optimisation, choosing the right data integration solution is not just a technical decision; it’s a strategic investment.By thoroughly assessing your requirements and researching potential solutions, you can empower your business with the data-driven insights needed to thrive in today’s competitive landscape.

Successfully navigating your data integration journey requires a clear understanding of your objectives and a well-defined roadmap.To streamline your data integration strategy and discover how a tailored solution can transform your business operations, explore the powerful capabilities of Osher Digital.Osher Digital provides expert guidance and implementation services for data integration solutions, helping you unlock the full potential of your data.

Jump to a section

Ready to streamline your operations?

Get in touch for a free consultation to see how we can streamline your operations and increase your productivity.