8 Essential Data Validation Techniques for 2025

Discover 8 essential data validation techniques to improve data quality. Learn practical tips for implementation in enterprise workflows across Australia.

The quality of your information assets directly dictates the success of your operations, from AI-powered insights to daily business process automation. Poor data quality creates a ripple effect of flawed decision-making, operational inefficiencies, and ultimately, a compromised bottom line. It is not just an IT problem; it is a core business challenge that impacts every department. The solution lies in robust data validation, a systematic approach to ensuring data is accurate, consistent, and fit for purpose before it enters your systems.

For businesses prioritising data integrity, understanding and implementing foundational concepts like privacy by design principles is a parallel and equally critical effort, ensuring that validated data is also handled responsibly. This article explores eight powerful data validation techniques, providing a practical blueprint for enterprise workflows. We will delve into how each method works, its specific applications, and actionable tips for implementation, empowering your organisation to build a foundation of trust in its data. From simple checks to sophisticated statistical analysis, mastering these techniques is essential for any business aiming to scale effectively and maintain a competitive edge. This guide offers a clear, actionable overview to strengthen your data governance framework.

1. Range Validation

Range validation is a fundamental data validation technique that confirms whether numerical, date, or time-based data fits within a predefined, acceptable spectrum. It operates by setting minimum and maximum constraints, acting as a crucial first line of defence against erroneous data entry. This method is essential for maintaining the integrity and quality of datasets where values must adhere to logical or physical limits.

By enforcing these boundaries at the point of input, organisations can prevent illogical data from contaminating their systems. For instance, a system that accepts an age of 200 or a negative salary figure is fundamentally flawed. Range validation is one of the most effective and straightforward data validation techniques to implement, delivering immediate improvements in data reliability.

Practical Implementation and Use Cases

This technique is widely applicable across various enterprise workflows. In financial applications, it ensures stock prices or transaction amounts fall within plausible market limits, preventing costly errors. For HR management systems, defining salary bands for specific job roles guarantees that offers are consistent and within budget.

Another powerful example is in IoT and industrial settings. A sensor monitoring manufacturing equipment might have an operational temperature range. Any reading outside this range could signify a critical failure, triggering an immediate alert.

Key Insight: Range validation isn’t just about rejecting bad data; it’s about enforcing business rules and operational logic directly within the data layer, creating a more intelligent and self-regulating system.

Actionable Tips for Implementation

- Establish Realistic Boundaries: Set pragmatic minimum and maximum values. While an age range of 0 to 150 is broad, a payroll system might require a more specific range of 18 to 70 to align with workforce regulations and retirement policies.

- Provide Clear Error Messages: When validation fails, the user feedback should be precise. Instead of a generic “Invalid Input” message, state, “Error: The salary must be between $55,000 and $75,000.”

- Implement Warning Levels: For values approaching the boundary, consider implementing a soft warning. This can alert users to potential issues without outright rejecting the data, which is useful for monitoring trends or anomalies.

- Regularly Review Parameters: Business needs and environmental conditions change. The acceptable price range for a product or the operational tolerance for a machine should be reviewed and updated periodically to remain relevant.

2. Format Validation (Pattern Matching)

Format validation, often implemented through pattern matching, is a critical data validation technique that verifies whether data adheres to a specific structural rule. This method primarily uses regular expressions (regex) to define a required pattern, making it indispensable for validating structured text data like email addresses, phone numbers, postcodes, and national identifiers. It ensures that data is not just present, but correctly structured for processing, storage, and communication.

This technique is essential for maintaining data consistency and usability, particularly in systems that rely on standardised data formats for automation and integration. By rejecting incorrectly formatted data at the point of entry, organisations can prevent downstream application errors, improve user experience, and ensure that collected information is reliable and functional. For example, a CRM system filled with invalid email formats is useless for marketing automation.

Practical Implementation and Use Cases

Format validation is ubiquitous in digital systems. E-commerce platforms use it to check that credit card numbers match the expected format for Visa, Mastercard, or AMEX before sending them for payment processing. User registration forms rely on it to confirm that an email address contains an “@” symbol and a domain, ensuring communication is possible.

In government and healthcare systems, this technique is vital for validating national identifiers like Social Security Numbers or Medicare numbers, which have strict formatting rules. Similarly, logistics and CRM platforms use it to standardise phone numbers and postcodes, ensuring that addresses are correct for shipping and that international contact numbers can be dialled correctly by automated systems.

Key Insight: Format validation acts as a structural gatekeeper. It doesn’t verify the existence of an email address, only its structural correctness, which is a crucial distinction. It’s the first step in a multi-layered validation process that ensures both form and function.

Actionable Tips for Implementation

- Use Well-Tested Libraries: Instead of writing complex regex from scratch, leverage established libraries like Google’s

libphonenumberfor phone numbers or Apache Commons Validator. These libraries are rigorously tested and account for numerous edge cases and international variations. - Account for International Variations: A postcode format for Australia is different from one for the United States or the United Kingdom. Ensure your validation logic is localised or flexible enough to handle the specific formats relevant to your user base.

- Provide Clear Formatting Guides: In user interfaces, display an example of the expected format next to the input field (e.g., “Date of Birth (DD/MM/YYYY)”). This proactive guidance significantly reduces user entry errors and frustration.

- Test Patterns Against Edge Cases: Thoroughly test your regex patterns with both valid and invalid data, including common mistakes and unusual but plausible inputs. This ensures your validation is robust and does not accidentally reject legitimate data.

3. Type Validation

Type validation is one of the most fundamental data validation techniques, ensuring that a piece of data conforms to its expected data type. It verifies that a value intended to be a number is indeed a number (integer or float), a string is text, and a date is a valid date. This check is crucial for preventing data corruption and runtime errors that occur when an application tries to process a value of an unexpected type.

Without type validation, a system might attempt to perform a mathematical calculation on a string of text, leading to application crashes or unpredictable behaviour. By rigorously enforcing data types at the point of entry or processing, organisations ensure that their data is consistent, reliable, and can be used correctly by downstream systems and analytics platforms. It forms the bedrock of data integrity in any well-designed system.

Practical Implementation and Use Cases

This technique is foundational in software development and data management. In database design, schema enforcement in systems like PostgreSQL or SQL Server strictly defines columns as INTEGER, VARCHAR, BOOLEAN, or TIMESTAMP. Any attempt to insert data of the wrong type is rejected, protecting the database’s structural integrity from the ground up.

In modern web development, type validation is essential for API security and reliability. A REST API expecting a JSON payload with a numeric userID can use a schema to validate incoming requests, immediately rejecting any that provide the userID as a string. Similarly, data pipelines built for ETL (Extract, Transform, Load) processes rely on type checking, often using tools like Apache Avro or JSON Schema, to ensure data flowing from different sources is correctly structured before it lands in a data warehouse.

Key Insight: Type validation acts as a contract between different parts of a system. It guarantees that data passed from one component to another is in a usable format, which dramatically reduces integration errors and simplifies debugging.

Actionable Tips for Implementation

- Define Types Early: Establish and document the expected data types for all fields during the initial design and development phase. This proactive approach prevents ambiguity and rework later on.

- Use Schema Validation Libraries: Leverage established libraries and frameworks like JSON Schema for APIs, Zod for TypeScript applications, or Pydantic for Python. These tools provide a robust, declarative way to enforce complex type rules.

- Implement Graceful Type Conversion: Where appropriate, allow for sensible type conversion. For example, automatically converting a user-input string “500” to the number 500 can improve user experience, but it should be done deliberately and with clear rules.

- Consider Nullable vs. Non-nullable: Clearly distinguish between fields that can be empty (nullable) and those that are mandatory (non-nullable). This is a critical aspect of type validation that prevents errors related to missing data.

4. Constraint Validation

Constraint validation is a sophisticated data validation technique that enforces complex business rules and data integrity requirements beyond simple formats or ranges. It operates by applying predefined constraints such as uniqueness, referential integrity (foreign keys), and custom business logic. This method is fundamental for ensuring that data not only has the correct form but also aligns with the organisation’s operational policies and maintains logical consistency across different data sets.

By implementing these rules directly at the data layer, businesses can maintain the integrity and reliability of their information systems. For example, a system that allows a duplicate customer email address or permits an order to be assigned to a non-existent customer is inherently flawed. Constraint validation acts as the central nervous system for data governance, ensuring that all data interactions adhere to established business protocols.

Practical Implementation and Use Cases

This technique is crucial in systems where data relationships and business rules are paramount. In user management systems, enforcing a unique constraint on email addresses or usernames is a standard practice to prevent duplicate accounts and security issues. In relational databases, foreign key constraints are vital for maintaining referential integrity, ensuring that a record in one table accurately links to a valid record in another, for instance, connecting an order to a valid customer profile.

E-commerce platforms rely heavily on constraint validation. Custom rules can prevent inventory levels from falling below zero or stop a sale if the total exceeds a customer’s predefined budget limit. These are not simple range checks; they are dynamic rules that often involve multiple data points, making constraint validation one of the most powerful data validation techniques for complex enterprise environments.

Key Insight: Constraint validation transforms a database from a passive data repository into an active participant in enforcing business logic, ensuring that data relationships and rules are maintained automatically and consistently.

Actionable Tips for Implementation

- Document All Business Rules: Before implementation, clearly and comprehensively document every business rule. This repository should serve as the single source of truth for developers and data architects, ensuring everyone understands the logic behind each constraint.

- Implement at Multiple Levels: For robust validation, apply constraints at various layers of your application architecture. While the database is the ultimate gatekeeper, implementing checks in the user interface (UI) and API provides faster feedback and a better user experience.

- Use Descriptive Error Messages: When a constraint is violated, provide a clear, context-specific error message. Instead of a generic “Constraint Violation”, a message like “Error: This email address is already registered. Please use a different email or log in.” is far more helpful.

- Plan for Constraint Modifications: Business rules evolve. Establish a clear, controlled procedure for updating or removing constraints. This process should include impact analysis, thorough testing, and communication to all stakeholders to prevent unintended consequences on system operations.

5. Cross-Field Validation

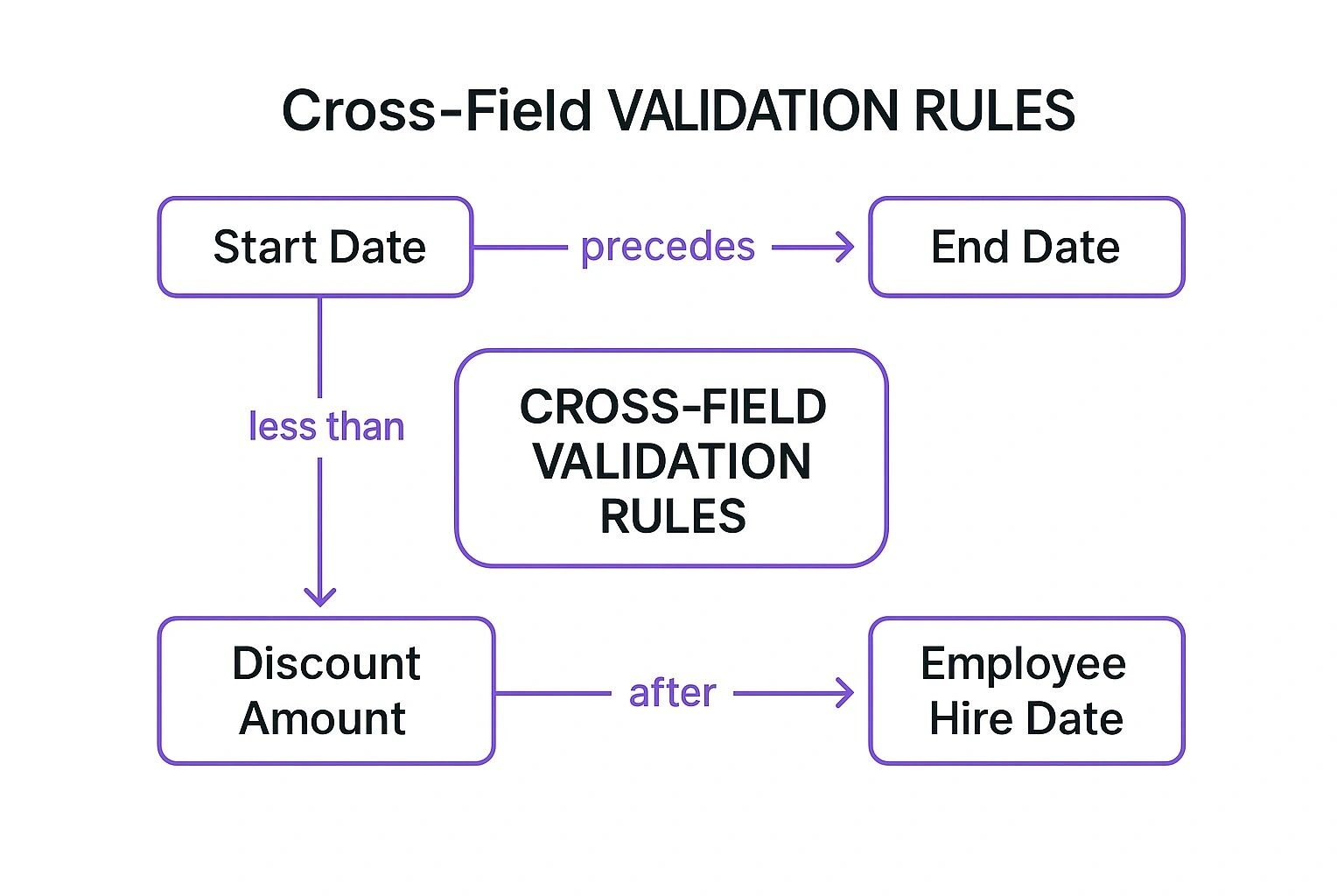

Cross-field validation elevates data integrity by verifying relationships and dependencies between multiple data fields, ensuring logical consistency across an entire record or form. Unlike single-field techniques, it evaluates complex business rules that span interconnected data points. This approach is critical for preventing logical paradoxes in data, such as a project’s end date preceding its start date.

By confirming that related data elements make sense together, organisations can enforce sophisticated business logic directly at the point of entry. For example, in an e-commerce system, a rule preventing a discount amount from exceeding the total order price is a classic cross-field check. Implementing these relational data validation techniques is essential for building robust, error-resistant applications and maintaining a high-quality, reliable dataset.

The concept map below illustrates common cross-field validation rules, showing how different data fields are logically linked.

This visualisation highlights how the validity of one field, like ‘Start Date’, is directly dependent on the value of another, like ‘End Date’, to maintain logical business sense.

Practical Implementation and Use Cases

This technique is indispensable in any system where data inputs are contextually linked. In HR systems, it guarantees an employee’s hire date is logically after their birth date, preventing impossible records. For logistics and shipping, it can validate that a selected shipping method is compatible with the destination country or that a postcode aligns with the entered state.

In financial applications, cross-field validation can ensure that a requested loan amount does not exceed a pre-calculated borrowing limit based on the applicant’s income and credit score. These checks are crucial for automating decisions and minimising risk by enforcing company policies at a granular level.

Key Insight: Cross-field validation transforms a simple data entry form into an intelligent interface that understands and enforces the underlying business processes, significantly reducing downstream data correction efforts.

Actionable Tips for Implementation

- Map Field Dependencies: Before writing any code, visually map the relationships between fields. Understanding these dependencies is a core part of business process mapping and ensures all logical rules are identified. You can learn more about business process mapping techniques on osher.com.au.

- Provide Contextual Error Messages: Instead of a vague “Invalid relationship” error, be specific. Use messages like, “Error: The end date must occur after the start date.” This guides the user to the exact source of the problem.

- Define a Clear Validation Order: When multiple fields are interdependent, the order of validation matters. Validate fields in a logical sequence to avoid conflicting error messages and confusing user experiences.

- Consider User Workflow: Trigger cross-field validation at an appropriate time, such as when the user moves to the next field or upon form submission, to avoid interrupting their flow unnecessarily.

6. Completeness Validation

Completeness validation is a critical data validation technique that checks whether all required data fields are present and populated. It targets missing values, null entries, and incomplete records, acting as a gatekeeper to ensure that datasets are whole and usable. This method is fundamental for maintaining data quality and enabling dependent business processes to function without interruption.

An incomplete record, such as a customer sign-up missing a contact email or a product listing without a price, can halt workflows and compromise analytics. By enforcing that all mandatory fields contain data at the point of entry, organisations can guarantee the basic utility of their information. This makes completeness validation an indispensable tool for building reliable and actionable datasets.

Practical Implementation and Use Cases

This technique is essential across countless enterprise functions. In customer relationship management (CRM) systems, it ensures every new lead record includes a name, company, and contact details, making sales follow-ups possible. For financial compliance, it guarantees that loan applications contain all necessary documentation and financial statements before being submitted for review.

Another key example is in e-commerce. A product cannot be listed for sale without essential details like a name, SKU, price, and inventory count. Completeness validation ensures the product catalogue is functional and provides a satisfactory customer experience. Similarly, healthcare systems rely on it to ensure patient records have all vital information before treatment or billing can proceed.

Key Insight: Completeness validation is more than a simple check for empty fields; it is the process of defining the minimum viable record for a specific business purpose, ensuring that every data entry is immediately valuable and operational.

Actionable Tips for Implementation

- Differentiate Required vs. Optional: Clearly distinguish between fields that are absolutely mandatory and those that are “nice to have”. Overloading forms with required fields can lead to user frustration and poor-quality data entry as people rush to fill them.

- Provide Clear Visual Cues: Use visual indicators, such as asterisks (*) and descriptive text, to clearly mark which fields are required. This manages user expectations and reduces the likelihood of submission errors.

- Implement Progressive Collection: For complex processes like user onboarding, consider collecting information in stages. Ask for the most critical data first to create the record, then prompt for additional details later. This approach improves completion rates.

- Allow Partial Saves with Tracking: In scenarios involving long forms, such as applications or detailed reports, allow users to save a partial record. Combine this with a completion tracker or dashboard that clearly shows what information is still missing.

7. Statistical Validation

Statistical validation is a sophisticated data validation technique that employs statistical methods to identify outliers, anomalies, and patterns that deviate from expected norms. Rather than relying on fixed rules, it leverages descriptive statistics, probability distributions, and anomaly detection algorithms to assess data quality on a more dynamic and contextual basis. It is a powerful method for flagging subtle errors that other techniques might miss.

Pioneered by figures like W. Edwards Deming and John Tukey, this approach moves beyond simple checks to understand the underlying behaviour of a dataset. By establishing a statistical baseline, organisations can automatically detect data points that are improbable, even if they fall within a technically valid range. This makes it an indispensable tool for maintaining high standards of data integrity in complex systems.

Practical Implementation and Use Cases

This technique is crucial in scenarios where data quality is defined by consistency and predictable patterns. In finance, statistical validation is at the core of fraud detection systems, where z-scores or other models can identify transactions that are highly unusual for a specific account, signalling potential unauthorised activity.

In manufacturing, statistical process control (SPC) charts are used to monitor production lines. Any measurement that falls outside statistically defined control limits, even if within engineering tolerances, can indicate a process problem that needs immediate attention. Similarly, marketing teams use it to detect anomalies in website traffic or campaign performance, allowing them to distinguish genuine trends from bot activity or tracking errors. Learn more about how this works in automated data processing.

Key Insight: Statistical validation excels at finding the “unknown unknowns” in your data. It doesn’t just check if data is correct; it checks if data is believable based on historical patterns and expected distributions.

Actionable Tips for Implementation

- Choose Appropriate Methods: Select statistical techniques that suit your data. Use standard deviation and z-scores for normally distributed data, but consider methods like the Interquartile Range (IQR) for skewed distributions.

- Establish a Reliable Baseline: Before deploying anomaly detection, collect enough high-quality historical data to establish a stable and reliable baseline pattern. Your model is only as good as the data it is trained on.

- Combine Multiple Techniques: For robust validation, layer several statistical tests. Combining a moving average with standard deviation analysis can help differentiate between a gradual trend and a sudden, anomalous spike.

- Regularly Update Models: Data patterns evolve over time. Periodically retrain your statistical models with new data to ensure they remain accurate and relevant, preventing model drift and false positives.

8. Data Profiling and Quality Assessment

Data profiling is a comprehensive validation technique that involves systematically examining a dataset to understand its structure, content, and overall quality. Instead of validating individual entries against a single rule, it creates detailed metadata about the data’s characteristics, identifying quality issues like inconsistencies, outliers, and null values across the entire dataset. This analytical approach is foundational for any serious data governance or migration project.

By creating a statistical summary and informational overview of the data, organisations gain a deep understanding of its health and reliability. This insight is crucial before undertaking major initiatives like a system migration or implementing a new analytics platform. To truly ensure your data is flawless and fit for purpose, comprehensive approaches like financial data quality management are essential. This method provides the diagnostic intelligence needed to prioritise and execute data cleansing efforts effectively.

Practical Implementation and Use Cases

This technique is indispensable for large-scale enterprise projects. When preparing for a CRM migration, profiling the existing customer database can reveal hidden issues like duplicate records or incomplete addresses that would otherwise derail the project. In the financial sector, it’s used for regulatory compliance to assess the quality of transactional data and ensure it meets stringent reporting standards.

Another key use case is in preparing datasets for research or machine learning. Profiling healthcare data can identify biases or gaps that could skew analytical outcomes, ensuring the integrity of the research. Similarly, an e-commerce business might profile its product catalogue to standardise attributes and improve searchability, directly impacting customer experience and sales. The insights gained are also vital for refining data integration best practices.

Key Insight: Data profiling moves beyond simple validation; it’s a diagnostic process that reveals the ‘why’ behind data quality issues, enabling targeted, strategic remediation rather than superficial fixes.

Actionable Tips for Implementation

- Prioritise Critical Datasets: Begin by profiling your most valuable data assets, such as customer, product, or financial records. Focus on the critical data fields within these sets that have the highest impact on business operations.

- Establish Quality Metrics: Before you start, define what “good” data looks like. Set clear metrics and thresholds for completeness, uniqueness, consistency, and timeliness to measure against.

- Automate for Regular Monitoring: Manual profiling is not scalable. Use specialised tools to automate the process and schedule regular runs to monitor data quality over time and catch new issues as they arise.

- Visualise and Share Results: Create data quality dashboards to present profiling findings to business stakeholders. Visualisations make complex quality issues easier to understand and drive cross-departmental action.

Data Validation Techniques Comparison

| Validation Type | Implementation Complexity | Resource Requirements | Expected Outcomes | Ideal Use Cases | Key Advantages |

|---|---|---|---|---|---|

| Range Validation | Low | Low | Prevents out-of-bound values | Numerical, date, or time range checks | Simple, fast, real-time feedback |

| Format Validation (Pattern Matching) | Medium | Medium | Ensures data matches structural patterns | Emails, phone numbers, IDs, formatted text | Accurate for structured data, flexible pattern support |

| Type Validation | Low to Medium | Low | Validates data type correctness | Strongly-typed systems, API and database inputs | Prevents type errors, foundational for validation |

| Constraint Validation | Medium to High | Medium to High | Enforces business rules and data integrity | Business rules, relational databases | Automatic rule enforcement, complex scenario support |

| Cross-Field Validation | High | Medium to High | Validates logical consistency across multiple fields | Complex business logic spanning fields | Ensures multi-field consistency, advanced validation |

| Completeness Validation | Low | Low | Detects missing or incomplete data fields | Required field enforcement | Improves data reliability, simple implementation |

| Statistical Validation | High | High | Identifies anomalies and data quality issues | Outlier detection, trend analysis | Detects subtle issues, scalable for large data |

| Data Profiling and Quality Assessment | High | High | Provides holistic data quality insights | Data governance, quality monitoring | Comprehensive, enables proactive management |

Automating Your Way to Superior Data Integrity

Mastering data integrity is not a destination but a continuous journey. As we’ve explored, a robust framework built on diverse data validation techniques is the cornerstone of any successful data-driven organisation. From the fundamental checks of range, format, and type validation to the more nuanced approaches of cross-field, constraint, and completeness validation, each technique plays a specific, vital role. Moving towards more sophisticated methods like statistical validation and comprehensive data profiling elevates your strategy from simple error prevention to proactive data governance.

The true power of these methods is realised when they are woven into the fabric of your daily operations. The ultimate goal is to move beyond reactive, manual clean-up efforts which are both costly and inefficient. Instead, the focus must shift towards creating a resilient, automated data ecosystem. This involves embedding these validation checks at every possible point of data entry and processing, from customer-facing web forms to internal CRM updates and complex data pipeline transformations.

Key Takeaways for Lasting Data Quality

To transition from theory to practice, it’s essential to internalise the core principles we’ve discussed. Your organisation’s approach to data validation should be layered, strategic, and, most importantly, automated.

Here are the critical takeaways to guide your implementation:

- No Single Technique is a Silver Bullet: A multi-layered strategy is essential. Combining simple format checks with complex cross-field validation and regular data profiling creates a comprehensive defence against poor data quality.

- Context is King: The validation rules you apply must align with your specific business logic. A valid entry in one context might be a critical error in another. Tailor your constraints and rules to fit the purpose of the data.

- Automation is Your Greatest Ally: Manual validation is not scalable. Implementing automated checks within your workflows is the only sustainable way to maintain high-quality data as your business grows. This frees up your teams to focus on high-value analysis rather than tedious data cleansing.

- Proactive, Not Reactive: The most effective data validation programs prevent bad data from entering your systems in the first place. By focusing on points of entry and real-time validation, you minimise the need for expensive and time-consuming downstream clean-up projects.

“Ultimately, the quality of your business decisions is a direct reflection of the quality of your data. Investing in a robust, automated validation framework is an investment in clarity, efficiency, and future growth.”

Embracing these data validation techniques is more than an IT initiative; it’s a strategic business imperative. It empowers your teams with reliable information, streamlines operations, enhances customer experiences, and provides the solid foundation needed for advanced analytics and AI. By committing to a culture of data excellence, you position your organisation to not just compete, but to lead in an increasingly data-centric world.

Ready to transform your data validation from a manual chore into an automated, strategic advantage? The expert team at Osher Digital specialises in designing and implementing tailored business process automation and AI solutions that embed these critical checks directly into your workflows. Visit Osher Digital to discover how we can help you build a resilient data ecosystem that fuels reliable insights and drives scalable growth.

Jump to a section

Ready to streamline your operations?

Get in touch for a free consultation to see how we can streamline your operations and increase your productivity.