How to Train AI on Your Own Data: A Practical Guide

Learn how to train AI on your own data with this comprehensive guide covering data prep, model fine-tuning, and getting real results. Start now!

Training an AI on your own data isn’t some single, magic button. It’s really a focused, four-part process. It kicks off with gathering and cleaning up your specific data, then moves to picking a pre-trained model that fits the job, then you get into the fine-tuning… teaching that model with your prepared data. Finally, you have to test it to make sure the thing actually works like you hoped it would. This whole journey is what turns a general-purpose AI into a specialist that genuinely gets your unique world.

The Real Power of Training AI on Your Own Data

It seems like everyone’s talking about AI these days, doesn’t it? It’s everywhere. But here’s the thing… the real game-changer isn’t just using those off-the-shelf tools that everyone has access to.

The true unlock is in teaching an AI to understand your unique world. Your business. Your data.

I get it. You’ve probably played around with a generic AI tool and had that little thought in the back of your mind… ‘what if this thing actually knew my customers? What if it understood the weird, specific language of my industry?’ That one thought is the start of something really big. It’s that moment you realise the generic stuff just isn’t cutting it anymore.

The goal isn’t to have an AI. It’s to have your AI… an assistant that speaks your language, knows your history, and gets what you’re trying to do without you needing to write a ten-page prompt.

Turning ‘What If’ into Reality

This is the exact feeling we’re aiming to solve in this guide. I know it can feel like a massive, super technical challenge. You know, something that’s only for the Silicon Valley giants with rooms full of whirring servers.

But honestly, it’s more doable now than it has ever been. You really don’t need a PhD in machine learning to get your hands dirty.

This guide is your practical, no-nonsense walkthrough for turning that ‘what if’ into something that actually works. We’re going to break down the whole process without the confusing jargon. Just straightforward advice from someone who’s been down this road, tripped over all the rocks, and learned a few things so you don’t have to.

We’ll cover everything you need to know:

- Getting your data sorted so it’s actually useful for training.

- Picking the right model without getting lost in a sea of confusing options.

- The actual process of teaching the model your specific knowledge.

- Checking if it worked and what to do if it didn’t.

Think of it like this. You’ve already done the hard work of collecting years and years of business data… customer emails, support tickets, internal documents, sales reports. All that knowledge is just sitting there, silent.

You have the data. Let’s show you how to give it a voice. A powerful one.

Getting Your Data House in Order

Alright, let’s be honest. This is the part nobody really loves. But it’s where all the magic actually starts. If you mess this up, nothing else we do is going to matter.

Imagine your data is like the ingredients for a really fancy cake. If your ingredients are messy, inconsistent, or just plain wrong, you’re not going to end up with a masterpiece. You’ll just get a mess.

I’ve been there… spending weeks staring at a computer screen, wondering why on earth my model was giving me such bizarre answers, only to trace it all the way back to a disastrously messy dataset. It’s a soul-crushing experience. Truly. So, let’s make sure that doesn’t happen to you.

Cleaning Up the Mess

First up is data cleaning. This means it’s time to roll up your sleeves and do the grunt work. Finding duplicates, fixing typos, and dealing with all those frustrating empty fields. It’s not glamorous, but it is absolutely essential. A model that’s trained on messy data will only ever learn messy, useless patterns.

For instance, if you’re working with a bunch of customer feedback, you need to make sure everything is consistent. Before you even think about feeding text-based data into your AI model, understanding what is text analysis is so important for cleaning it properly and pulling out the right information. This will help you standardise everything so the AI can actually make sense of it.

After the big cleanup, we need to talk about formatting. The AI needs its data in a language it can read, which usually means clean, structured files like CSVs or JSONs. It’s like translating a novel into a language your friend can actually understand.

Your AI model will only ever be as good as the data you feed it. That old saying ‘garbage in, garbage out’ isn’t just a saying; it’s the fundamental law of machine learning.

Here’s a quick checklist to make sure your data is ready for AI training. Think of it as your pre-flight check before we launch this thing.

Essential Data Preparation Checklist

| Task | Why It Matters | Quick Tip |

|---|---|---|

| Remove Duplicates | Prevents the model from getting biased towards data it sees too often. | Use a simple script or even a spreadsheet function to find and get rid of duplicate rows. |

| Handle Missing Values | Empty fields can crash the whole training process or just mess up the results. | Decide on a strategy: you can either remove rows with missing data or fill them in (e.g., with an average or a placeholder). |

| Correct Errors & Typos | Inconsistencies like “Aus” vs. “Australia” will just confuse the model. | Standardise your categories. A simple find-and-replace function can be your best friend here. |

| Standardise Formats | AI needs its data in a consistent format (e.g., all dates as YYYY-MM-DD). | Convert all your relevant data points to a single, uniform format before you start. |

Getting these basics right is non-negotiable. It sets a solid foundation for everything else that’s coming.

Splitting Your Data for Success

Once your data is clean and beautifully formatted, you need to split it into two piles. This is a super critical step.

-

The Training Set: This is the big pile. Think of it as the textbook you give the AI to study from. The model will look at this data over and over and over again to learn the patterns, the connections, and the specific little details of your world.

-

The Testing Set: This is the smaller, protected pile. The AI never gets to see this data while it’s studying. This is the final exam. Once the model is trained, you use this set to see what it actually learned and whether it can apply that knowledge to new information it’s never seen before.

This split is your safety net. It stops the AI from just memorising the answers and forces it to genuinely learn. A solid approach to data quality management at this stage will save you so many headaches later on. Trust me, a little bit of upfront work here makes all the difference in the world.

Choosing the Right AI Model and Tools

Alright, this is where the real fun begins. Your data is clean, structured, and ready to go. Now, it’s time to pick the engine that’s going to power your AI.

Picking an AI model is a lot like choosing a car. Are you hauling massive amounts of data and need a heavy-duty truck, or do you need a quick, zippy little car for a customer service chatbot? The choice you make here will pretty much define the rest of the project.

Fine-Tuning vs. Training From Scratch

Let’s get the biggest question out of the way first. You basically have two paths you can go down: fine-tuning an existing, pre-trained model or trying to train a brand new one from scratch.

Building a model from scratch is a massive, monumental job. Think of it like designing and building a car engine from individual nuts and bolts. It needs a colossal amount of data, an eye-watering amount of computing power, and a whole team of specialists. Honestly, it’s almost never the right choice for most businesses.

Fine-tuning, on the other hand, is like taking a high-performance engine that’s already been built and just tweaking it for your specific race car. You’re starting with a model that big tech companies have already poured billions into, and you’re just teaching it that last, crucial piece of information: your unique business world.

For almost everyone, fine-tuning is the smartest, fastest, and most affordable way to go. It lets you stand on the shoulders of giants and get a powerful result in a fraction of the time.

Where to Find Your Model

So, where do you find these pre-trained models to get started? The great news is the AI community has made this surprisingly easy. You don’t need a secret password or a direct line to a Silicon Valley giant.

A brilliant place for anyone to start is Hugging Face. Think of it like a huge, open-source library for AI models. It’s an absolute goldmine, with thousands of models already trained for different jobs, just waiting for you to adapt them.

Beyond that, the big cloud providers are your best friends. Platforms like Google AI Platform, Amazon SageMaker, and Microsoft Azure AI offer both the models and all the tools you need to train them. They handle a lot of the heavy technical lifting, which lets you focus more on the business problem you’re trying to solve. While they do need a bit of technical know-how, they’re built to make this whole process as straightforward as possible.

For a broader look at what’s out there, you might find our guide on the best AI tools for business helpful, as it covers a whole range of different solutions.

Matching the Tool to the Task

The golden rule here is to always stay focused on what you need the AI to do. Don’t get distracted by brand names or flashy tech.

- Trying to figure out the sentiment in customer emails? Look for a model that’s great at text classification.

- Hoping to predict next quarter’s sales figures? You’ll need a model specifically built for forecasting.

Having the right people to make these decisions is becoming so important. Interestingly, Australia’s AI skills penetration was just a little under the OECD average in 2023, which points to a national skills gap we need to sort out. Building up this local expertise is key for tailoring AI to our specific business environments and rules. You can dig deeper into Australia’s position in the full parliamentary report on AI’s potential impact.

Ultimately, picking the right model and tools is all about being practical. Start small, keep a laser focus on the problem you’re solving, and choose the path of least resistance. In almost every case, that path is fine-tuning.

Breaking Down the AI Training Process

This is the moment. Where the rubber meets the road. All that data you so carefully prepared is about to be introduced to your chosen AI model. It’s less like a mad science experiment and more like the first day of school for your AI.

So, what’s really going on when you click ‘start training’? In simple terms, the AI is studying your dataset. Again and again. With each pass, it makes tiny little tweaks to its internal brain to better recognise the patterns, the language, and the unique details in your information. Think of it like a student cramming for an exam… the more they go over the material, the better they’ll understand it.

You’ll bump into a few technical-sounding terms, but they’re not as complicated as they sound. For example, ‘epochs’ just means how many times the AI will review the entire dataset from start to finish. Another one is the ‘learning rate’, which is basically how big the adjustments are that the model makes after each review.

The learning rate is a bit of a balancing act. If the steps are too big, the model might jump right over the best solution. But if they’re too small, the training could take forever to get anywhere useful. Finding that sweet spot is key.

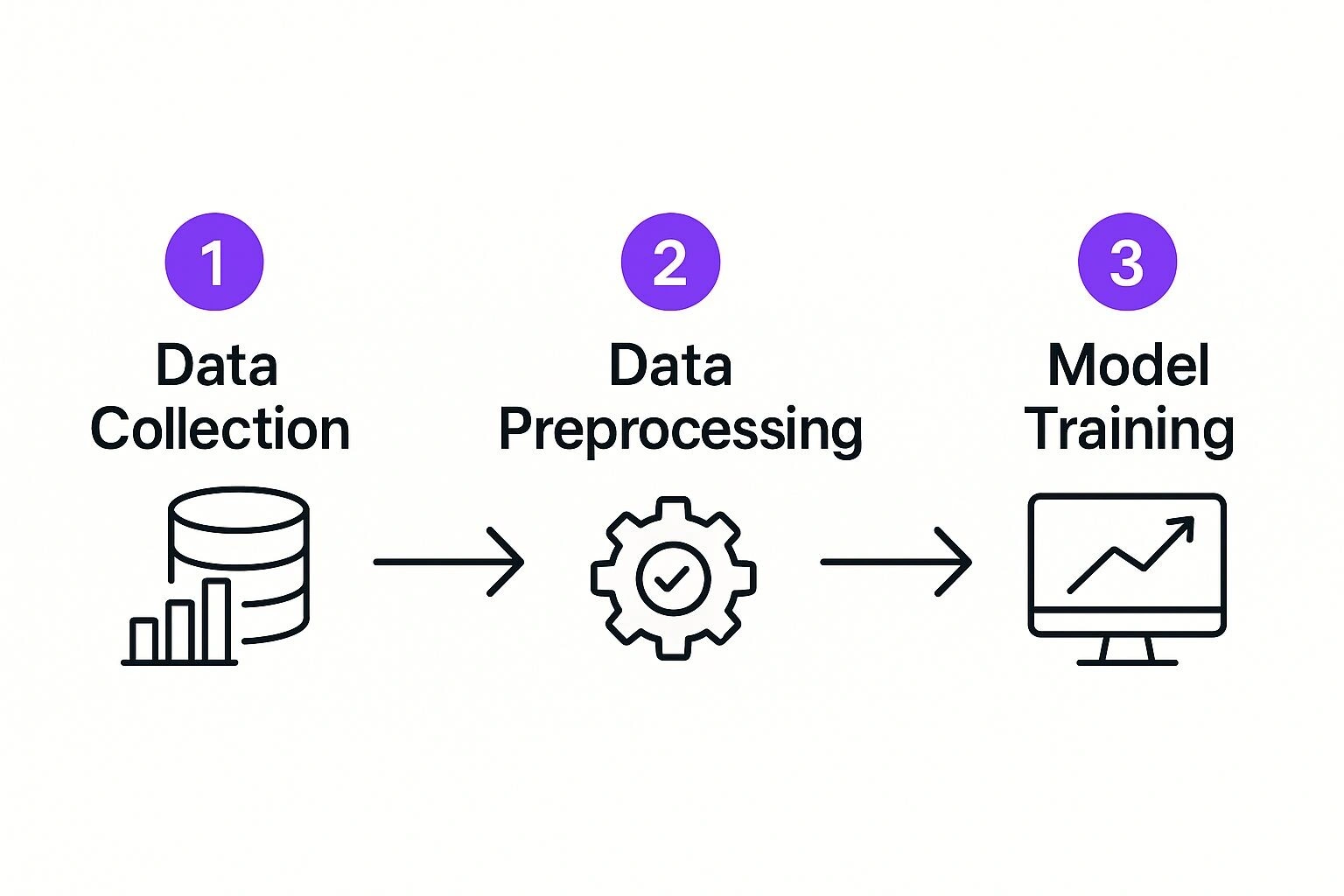

This graphic gives you a clear, high-level view of the whole workflow, from gathering your data right through to the training itself.

As you can see, the actual training is the grand finale of all the foundational work we’ve already talked about… getting your data collected, cleaned up, and organised.

Good Things Take Time and Tuning

Let’s set a realistic expectation right now: your first training run probably won’t give you a perfect model. In fact, it might be a bit of a letdown. And that’s perfectly okay. Honestly, it’s part of the journey. I’ve built models that, on their first try, felt like they were actively trying to misunderstand the data I gave them.

The secret is to treat this like a cycle, not a one-shot deal. It’s a feedback loop between you and the model. You train it, you test what comes out, you see where it’s falling short, and then you go back and adjust your strategy. Maybe it needs more epochs to learn properly, or maybe that learning rate needs a tiny tweak.

This whole process of refining is what we call fine-tuning. If you want to dive deeper into the nuts and bolts of it, our beginner’s guide to fine-tuning large language models gives a more thorough explanation of the techniques.

Ultimately, this whole stage is about pulling back the curtain on what can feel like a mysterious process. It’s not magic. It’s just a methodical approach of teaching, testing, and refining. It takes a little patience, but every adjustment you make gets you one step closer to an AI that truly understands what you’re trying to do.

How to Know If Your Custom AI Is Working

https://www.youtube.com/embed/HdlDYng8g9s

So, the training is finished. The progress bar is finally full. Now what?

You can’t just cross your fingers and assume it all went perfectly. You have to test it. Really test it. You’ve got to challenge it and see if the model has developed actual intelligence or if it’s just gotten very good at mimicking things. This is the moment of truth. And it’s where that ‘testing data’ we so carefully put aside earlier finally gets to have its moment in the sun.

We’re going to feed the AI completely new information. Stuff it has never, ever seen before. Then, we sit back and check if what it produces actually makes sense in the real world.

Putting Your Model to the Test

This process goes way deeper than just looking for a simple ‘correct’ or ‘incorrect’ answer. It’s much more subtle than that. What you’re really looking for is common sense, relevance, and a genuine understanding that goes beyond just matching patterns.

I remember one of my first projects involved a model designed to summarise customer support tickets. The initial results looked fantastic. But when I gave it a completely new type of complaint, it spat out a summary that was technically accurate but emotionally clueless. It had completely missed the customer’s frustration.

That’s exactly the kind of thing we’re trying to catch here. Those little signs that show your AI doesn’t really get it.

The goal of testing isn’t just to chase a high accuracy score. It’s to build trust. You need to be confident that when you let this thing loose on real-world problems, it won’t just be right… it’ll be genuinely helpful.

This is especially important in Australia, where there’s a noticeable skills gap in this area. A recent report found that only 24% of Australians have done any AI-related training, which is well below the global average. This means a lot of us are learning on the job, which makes robust, common-sense testing even more important to make sure we’re building tools that are both effective and ethical. You can dive into the details in the full KPMG and University of Melbourne report on trust in AI.

Avoiding the ‘Cram Session’ Problem

One of the biggest gremlins you’ll bump into is something called overfitting.

Think of it like a student who crams for an exam by memorising every single word in the textbook. They can recite entire chapters perfectly. But if you ask them a question that requires them to think about the subject, they completely fall apart.

That’s overfitting. The AI has basically just memorised your training data instead of learning the concepts underneath. It performs brilliantly on data it’s already seen, but it fails miserably the moment it encounters something new. This testing stage is your quality control… it’s how you separate a genuinely useful AI from a clever but ultimately useless party trick.

Common Questions About Training Your Own AI

Right, let’s just pause for a second and tackle some of the questions that are probably buzzing around in your head. These are the practical, real-world concerns that pop up whenever someone starts thinking seriously about this stuff. You’re not alone in wondering about these things; in fact, asking them is a sign you’re on the right track.

How Much Data Do I Actually Need?

This is always the first question, isn’t it? And the completely honest answer is: it really depends on what you’re trying to do. There isn’t a single magic number.

If your goal is pretty simple… let’s say, sorting customer emails into ‘Urgent’ and ‘Not Urgent’… you might be surprised at how well a model can perform with just a few hundred really good examples. But if you’re building something more complicated, like a chatbot that needs to understand complex insurance policy questions, you’re looking at a dataset in the thousands, or even tens of thousands, to cover all the different situations.

The golden rule here is that quality always, always beats quantity. A smaller, perfectly clean dataset is infinitely more valuable than a huge, messy one. Get your data clean and relevant first; you can worry about getting more of it later.

Is This Going to Be Expensive?

It certainly can be, but it absolutely doesn’t have to be. Training a massive model like GPT-4 from scratch? Yep, that’s a multi-million dollar project. But that’s not the game most businesses are playing.

Fine-tuning an existing, pre-trained model is a totally different story. The cost is dramatically lower because you’re basically just paying for the processing time on a server. Using cloud platforms, an initial experiment could cost you as little as a few dollars, while a more serious project might run you a few hundred. There are even free options on services like Google Colab that are perfect for getting your feet wet without spending a cent.

Frankly, the biggest “cost” in most projects isn’t the computing power… it’s the human hours you spend carefully preparing the dataset.

What Are the Biggest Mistakes to Avoid?

I’ve seen this happen over and over again. The single biggest mistake is rushing the data preparation. Without a doubt. People get so excited about the “AI” part that they just throw messy, inconsistent, or poorly labelled data at the model and hope for the best.

The result is always predictable: a disappointing model that gives you nonsensical or completely useless outputs. It’s that classic ‘garbage in, garbage out’ rule, and it’s the fastest way to get completely fed up with the whole process.

Another trap is not having a crystal-clear goal from day one. Before you write a single line of code or label a single piece of data, you have to be able to define exactly what success looks like. Without that target, you’re just flying blind, with no real way to know if your model is a valuable tool or just an expensive experiment.

Ready to stop wondering and start building? At Osher Digital, we specialise in creating custom AI solutions that understand your business. Let’s turn your unique data into your most powerful asset. Learn more at Osher Digital.

Jump to a section

Ready to streamline your operations?

Get in touch for a free consultation to see how we can streamline your operations and increase your productivity.