8 Critical System Integration Challenges to Overcome in 2025

Discover the top system integration challenges organisations face and learn actionable strategies to overcome them for seamless connectivity and efficiency.

Seamless system integration is the backbone of operational efficiency, data-driven decision-making, and competitive advantage.

However, connecting disparate systems, from legacy mainframes to modern cloud applications, is fraught with complexity. Successfully navigating these system integration challenges is the key differentiator between organisations that thrive and those that stagnate. These hurdles range from technical incompatibilities and security vulnerabilities to spiralling costs and complex governance.

This article provides a comprehensive roundup of the eight most critical system integration challenges organisations in Australia and beyond are facing today. We will dissect each challenge, offering deep insights, practical examples, and actionable strategies to transform these obstacles into opportunities for growth and innovation. For instance, connecting platforms like a CRM and a project management tool requires careful planning. To tackle the inherent complexity of disparate systems and foster seamless operations, exploring effective HubSpot Jira integration strategies can provide a powerful blueprint for aligning sales and development workflows, ensuring data flows smoothly between critical business functions.

By understanding the specific nuances of issues like data format incompatibility, legacy system modernisation, and security across multiple environments, you can develop a robust integration framework. Whether you’re grappling with outdated data formats or striving for real-time data consistency, this guide will equip you with the knowledge to build a resilient, scalable, and efficient integrated architecture. We will move beyond generic advice to deliver specific, actionable insights that empower your teams to overcome these common yet formidable barriers.

1. Data Format and Protocol Incompatibility

One of the most persistent system integration challenges stems from the fundamental differences in how systems structure and transmit data. Applications, especially legacy platforms, often use proprietary or outdated data formats, while modern cloud services communicate via standardised APIs using formats like JSON or XML. This disparity creates a significant barrier to achieving a seamless, real-time flow of information across an organisation’s digital ecosystem.

At its core, this problem means that System A, which speaks in one “language” (e.g., SOAP), cannot directly communicate with System B, which speaks another (e.g., REST). This forces development teams to build complex, custom translators for every point-to-point connection, a process that is both time-consuming and difficult to scale. Without a strategic approach, an organisation can quickly find itself managing a tangled web of brittle, one-off integrations.

How to Overcome Data Incompatibility

The most effective strategy to resolve this challenge is to introduce a standardised, intermediary format known as a Canonical Data Model (CDM). Instead of creating direct translations between every system, each application is configured to translate its native data format into this single, common format.

Key Insight: A canonical data model acts as a universal translator. It decouples systems from one another, meaning a change in one system’s data format only requires updating its single connection to the canonical model, not every system it connects to.

For example, when integrating a legacy SAP ERP system (often using SOAP/IDoc) with a modern Salesforce CRM (using REST/JSON), an integration platform can intercept the data from each. It then transforms both into the predefined canonical model before routing the data to its destination. This approach is commonly facilitated by an Enterprise Service Bus (ESB) or a modern Integration Platform as a Service (iPaaS) like MuleSoft’s Anypoint Platform or Apache Camel.

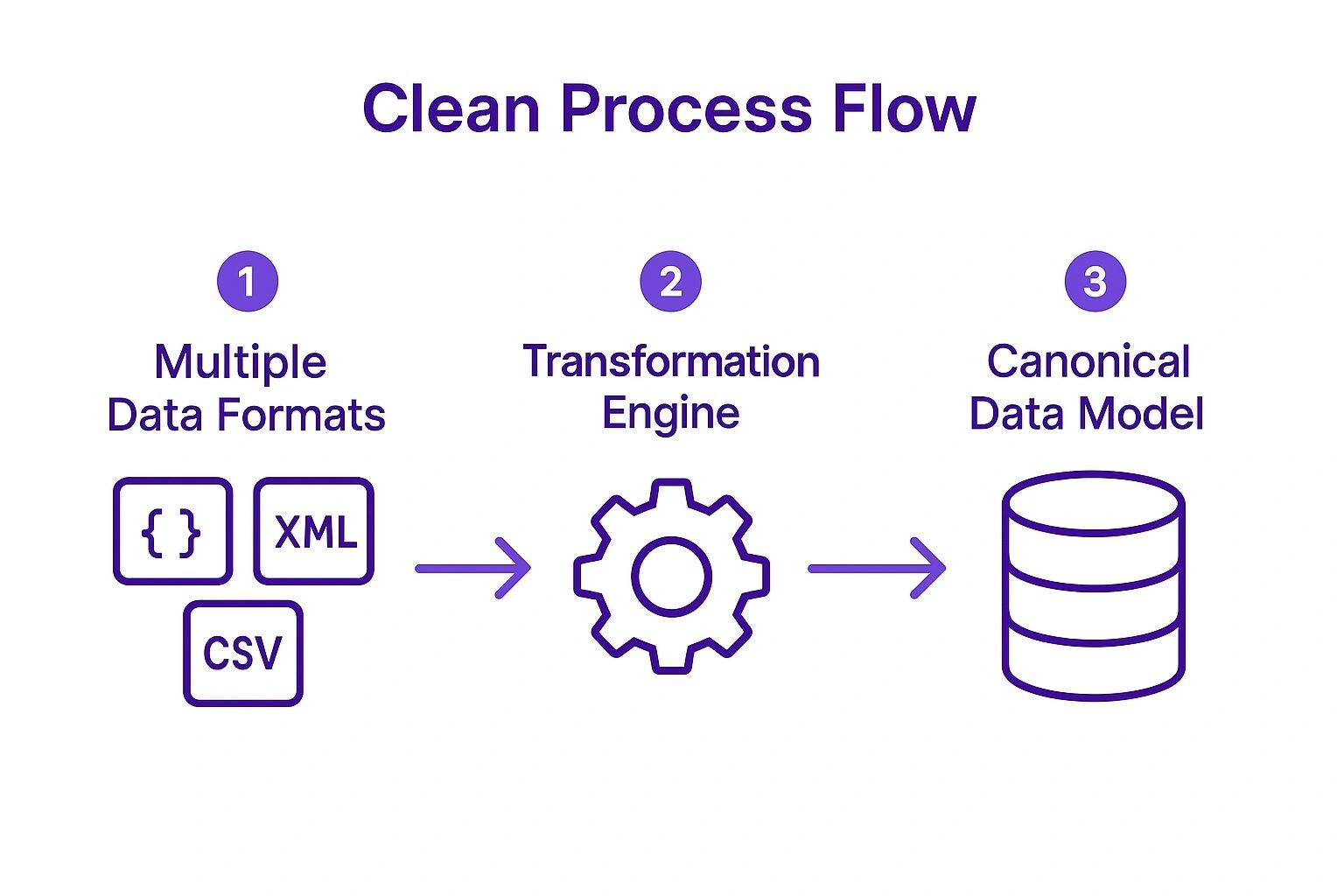

The following infographic illustrates this transformation process, showing how disparate formats are unified.

This diagram highlights how a central transformation engine is the key to normalising diverse data inputs into a consistent, reusable format.

Actionable Tips for Implementation

- Establish a Canonical Data Model: Define a universal, business-centric data structure early in your integration project. This model should represent core business entities like ‘Customer’, ‘Product’, or ‘Order’ in a system-agnostic way.

- Utilise an Integration Hub: Implement an iPaaS or ESB to act as the central hub for all data transformations. These platforms provide pre-built connectors and graphical data mapping tools that accelerate development.

- Prioritise Data Governance: Create clear policies for data ownership, quality, and mapping. Document every transformation rule and data field mapping comprehensively to simplify future maintenance and troubleshooting.

- Implement Robust Error Handling: Design a strategy for managing transformation failures. This includes logging errors, setting up alerts for failed mappings, and defining a process for manual intervention and data reprocessing.

2. Legacy System Modernization and Compatibility

One of the most significant system integration challenges involves interfacing with legacy platforms. Many organisations rely on decades-old systems, often built on mainframes or with languages like COBOL, which were never designed for modern connectivity. These critical systems frequently lack APIs, suffer from poor or non-existent documentation, and use outdated technologies, creating a major hurdle when trying to connect them with cloud-native applications and microservices.

The core issue is bridging the immense architectural gap between a stable, monolithic past and a flexible, distributed future without disrupting mission-critical business operations. For example, a bank needing to connect its core banking system on an IBM z/OS mainframe to a new mobile app faces this exact problem. A direct, full-scale replacement is often too risky and expensive, forcing teams to find ways to modernise incrementally.

How to Overcome Legacy System Compatibility

A proven strategy for tackling this challenge is to build a modern “façade” around the legacy system, effectively insulating the rest of the IT ecosystem from its complexities. This involves introducing an API Gateway or an integration layer that exposes legacy functions as modern, RESTful APIs. This layer acts as a translator, converting modern API calls into the commands or protocols the legacy system understands.

Key Insight: Instead of a high-risk “big bang” replacement, use an abstraction layer to modernise a legacy system’s interfaces. This approach, popularised by thought leaders like Martin Fowler, allows you to gradually and safely migrate functionality over time.

This method allows new applications to interact with the legacy system as if it were a modern service. For instance, a manufacturer can use an integration platform like Software AG’s webMethods to create an API layer over a decades-old SCADA system, enabling its integration with a new IoT platform for real-time production monitoring. This avoids a costly and disruptive overhaul of the core manufacturing process.

For a deeper dive into architectural patterns for modernisation, this video provides valuable context:

This video explains how to evolve legacy architecture without rewriting it entirely, a key principle for successful integration.

Actionable Tips for Implementation

- Adopt the Strangler Fig Pattern: Gradually replace parts of the legacy system with new microservices. Route calls through a façade that directs traffic to either the new service or the old system, slowly “strangling” the legacy monolith until it can be retired.

- Use API Gateways: Implement an API gateway to act as the modern front door to your legacy system. This centralises security, rate limiting, and request transformation, making the legacy asset manageable and secure.

- Prioritise Documentation: Before integration, invest time in reverse-engineering and documenting the legacy system’s behaviour, data structures, and hidden dependencies. This knowledge is invaluable for building a reliable integration layer.

- Plan Data Migration Early: If modernisation involves moving data, develop a comprehensive data migration strategy from the outset. Consider data cleansing, validation, and a phased migration approach to minimise business disruption. For more detailed strategies, you can explore further insights into modernising legacy systems.

3. Security and Authentication Across Systems

One of the most complex and high-stakes system integration challenges involves maintaining consistent security across a distributed network of applications. When systems are integrated, they create new pathways for data to flow and new access points for users, which can introduce significant security vulnerabilities if not managed properly. Each system often has its own authentication protocol (e.g., SAML, OAuth 2.0), authorisation model, and encryption standards, creating a fragmented security landscape that is difficult to govern.

This fragmentation means that a user authenticated in one system may not have seamless or secure access to another, or worse, security gaps can emerge between systems. For example, integrating a modern SaaS application with a legacy on-premise Active Directory requires careful federation of identities to avoid exposing credentials. Without a unified security strategy, organisations risk data breaches, non-compliance with regulations like GDPR or HIPAA, and a poor user experience that requires multiple logins.

How to Overcome Security and Authentication Challenges

The most robust solution is to centralise identity and access management through a dedicated platform and enforce security policies at the integration layer. Instead of managing security for each point-to-point connection, a central Identity and Access Management (IAM) solution, like Okta or Azure Active Directory, becomes the single source of truth for user authentication and authorisation. This is often combined with an API Gateway to enforce security policies for all data traffic between systems.

Key Insight: A centralised security model decouples authentication and authorisation from individual applications. This allows you to apply consistent policies across your entire ecosystem, simplify compliance, and manage user access from a single control plane.

For instance, when integrating multiple cloud services, an organisation can implement a Single Sign-On (SSO) solution. A user logs in once through the central IAM provider, which then issues secure tokens (like JWTs) that grant access to other connected applications without re-authenticating. This approach not only strengthens security by standardising protocols but also dramatically improves the user experience. You can learn more about managing compliance and security through integrated processes.

Actionable Tips for Implementation

- Implement a Zero-Trust Architecture: Adopt a “never trust, always verify” mindset. Assume no user or system is inherently trustworthy and require strict verification for every access request, regardless of its origin.

- Utilise an Identity and Access Management (IAM) Platform: Deploy a dedicated IAM or authentication-as-a-service solution (e.g., Auth0, Ping Identity) to manage user identities, enforce multi-factor authentication (MFA), and provide SSO capabilities.

- Establish an API Gateway: Use an API Gateway to act as a single entry point for all system-to-system communication. Configure it to enforce security policies such as rate limiting, IP whitelisting, and token validation for every API call.

- Implement Comprehensive Audit Logging: Ensure all authentication attempts, authorisation decisions, and data access events are logged across all integrated systems. Centralise these logs to enable effective monitoring, threat detection, and forensic analysis.

4. Real-time Data Synchronization and Consistency

One of the most complex system integration challenges is achieving real-time data synchronisation and maintaining consistency across multiple, distributed systems. When an e-commerce platform needs to update inventory levels simultaneously on its website, mobile app, and in-physical-store point-of-sale systems, any delay or discrepancy can lead to overselling, customer dissatisfaction, and operational chaos. This challenge is magnified by network latency, differing system processing speeds, and the risk of update conflicts.

The core issue is that traditional database transaction models (ACID) are often too slow and rigid for modern, distributed architectures. Forcing every connected system to wait for a confirmation before proceeding creates bottlenecks, undermining the very goal of a responsive, real-time user experience. This forces organisations to adopt more sophisticated architectural patterns that balance speed with data integrity, a difficult trade-off that requires careful design and implementation.

How to Overcome Synchronisation Challenges

A powerful strategy to manage this is to implement an event-driven architecture using patterns like Event Sourcing and Command Query Responsibility Segregation (CQRS). Instead of directly modifying data in multiple systems, changes are recorded as a sequence of immutable events. These events are then published to a central event stream or message queue.

Key Insight: An event-driven approach decouples data producers from data consumers. Systems subscribe to the events they care about and update their own state independently, allowing for asynchronous processing and eventual consistency without compromising system responsiveness.

For instance, in a supply chain management system, a “Shipment Dispatched” event is published to an event streaming platform like Apache Kafka. The inventory system, customer notification service, and billing platform can all subscribe to this event. Each service processes it at its own pace to update its local data store, ensuring all parts of the business eventually reflect the change without being tightly coupled or slowed down by one another. This model, popularised by tech giants like Netflix and Amazon, is fundamental to building scalable, resilient microservices.

Actionable Tips for Implementation

- Implement Event Sourcing and CQRS: Use Event Sourcing to capture all changes as a sequence of events. Pair this with CQRS to create optimised data models for reading (queries) and separate models for writing (commands), improving performance and scalability.

- Leverage Message Queues and Event Streams: Utilise platforms like RabbitMQ, Apache Kafka, or AWS EventBridge to manage the flow of events reliably between systems. These tools provide durability, ordering, and fault tolerance. For more detailed strategies, review these data integration best practices.

- Design for Eventual Consistency: Accept that in a distributed system, immediate consistency is often impractical. Design systems to function correctly with data that will eventually become consistent, and implement mechanisms to handle any temporary discrepancies.

- Use Distributed Caching: Implement a distributed cache like Redis or Hazelcast to provide fast, low-latency access to frequently read data, reducing the load on primary databases and improving response times for end-users.

5. Performance and Scalability Bottlenecks

System integration points are natural chokepoints where data from multiple sources converges for transformation and routing. As data volume and transaction velocity increase, these integration layers can quickly become performance bottlenecks, leading to slow response times, service degradation, and even system-wide failures. This is one of the most critical system integration challenges because poor integration performance can create a domino effect, impacting the responsiveness of every connected application.

This challenge becomes particularly acute during periods of high demand, such as an e-commerce platform during a Black Friday sale or a financial services firm processing end-of-day batch transactions. Without an architecture designed for scale, the integration middleware itself can buckle under the load, failing to process requests in a timely manner. The issue is no longer just about connecting systems; it’s about ensuring the connection is fast, resilient, and capable of handling future growth without a complete re-architecture.

How to Overcome Performance and Scalability Bottlenecks

The key to overcoming this challenge is to design integrations with performance and elasticity in mind from day one, adopting principles popularised by hyperscale companies like Netflix and Amazon. This involves moving away from synchronous, blocking operations towards asynchronous, non-blocking patterns and leveraging cloud-native architectures that can scale horizontally.

Key Insight: Treat your integration layer as a high-performance application, not just plumbing. Applying modern software engineering practices like asynchronous processing and horizontal scaling is essential for building resilient and responsive integrations.

For instance, when an order is placed on an e-commerce site, instead of making the user wait while the integration synchronously updates inventory, billing, and shipping systems, the initial request can be completed immediately. The order data is then published to a message queue. Separate, independent services can then process these updates asynchronously, ensuring the user experience remains fast while the back-end systems catch up. This decoupled, event-driven approach allows each component to scale independently based on its specific load.

Actionable Tips for Implementation

- Implement Asynchronous Processing: Use message queues (e.g., RabbitMQ, Apache Kafka) or event streams to decouple systems. This allows the source system to offload a request and move on, while downstream systems process the data at their own pace.

- Design for Horizontal Scaling: Build your integration services as stateless components that can be easily replicated. This allows you to add more instances to handle increased load, a core principle of cloud-native design and auto-scaling.

- Utilise Intelligent Caching: Implement a caching layer (e.g., Redis, Memcached) to store frequently accessed data that doesn’t change often. This reduces direct calls to back-end systems, lowering latency and decreasing the load on databases.

- Establish Comprehensive Performance Monitoring: Use Application Performance Monitoring (APM) tools like Datadog or New Relic to track key metrics like latency, throughput, and error rates. Set up alerts to proactively identify and address bottlenecks before they impact users.

6. Error Handling and System Resilience

A significant system integration challenge lies in designing for failure. When systems are tightly coupled, a single component outage can trigger a catastrophic cascade, bringing down the entire digital operation. Building robust error handling and ensuring system resilience involves managing partial failures, handling timeouts, and enabling services to degrade gracefully when dependencies are unavailable, a complexity that grows with each new integration point.

In a distributed environment, it’s not a question of if a service will fail, but when. An integration strategy that assumes 100% uptime for all its components is destined for frequent, large-scale disruptions. For instance, if an e-commerce platform’s checkout process relies on a third-party shipping calculator that goes down, the entire sales funnel can grind to a halt. Resilient architecture anticipates these issues and isolates them, preventing a localised problem from becoming a business-wide crisis.

How to Build Resilience and Handle Errors

The most effective approach is to adopt patterns that isolate failures and allow systems to continue operating in a diminished but functional capacity. This involves implementing architectural patterns like the Circuit Breaker and Bulkhead Isolation, popularised by organisations like Netflix and Amazon. These patterns prevent an application from repeatedly trying to connect to a service that is known to be failing.

Key Insight: Resilience isn’t about preventing every failure; it’s about containing the impact of a failure. By designing systems to degrade gracefully, organisations can protect core business functions and maintain a positive user experience even when backend services are experiencing issues.

For example, Amazon’s architecture famously uses circuit breakers to prevent cascading failures. If a non-essential recommendation engine fails, the breaker “trips,” stopping further requests to that service. This allows the primary product pages and checkout flows to remain fully operational, isolating the fault and preserving the core customer journey. This proactive fault tolerance is a cornerstone of modern, scalable system integration.

Actionable Tips for Implementation

- Implement the Circuit Breaker Pattern: Use libraries like Hystrix or native features in service mesh tools to wrap calls to external services. This will automatically halt requests to a failing dependency after a certain threshold of errors, preventing system-wide resource exhaustion.

- Use Bulkhead Isolation: Partition system resources, such as connection pools or thread pools, for each integration. This ensures that a failure or performance issue with one external service cannot consume all available resources and impact other, healthier integrations.

- Design for Graceful Degradation: Identify critical versus non-critical features. If a non-critical component (like a real-time stock checker) fails, the system should default to showing cached data or hiding the feature, rather than returning an error that disrupts the entire user session.

- Establish Clear Error Budgets: Based on Service Level Agreements (SLAs), define an “error budget” – the acceptable level of unavailability for a service. This data-driven approach, central to Google’s Site Reliability Engineering (SRE), helps teams make informed decisions about feature velocity versus reliability work.

7. Governance and Change Management

As an integration ecosystem expands, managing the interconnected web of services, APIs, and data flows presents a formidable governance challenge. Without disciplined change management, the entire structure becomes brittle and prone to failure. This issue involves coordinating updates across multiple teams, managing API versions to prevent breaking changes, and ensuring that modifications in one system do not have unintended, catastrophic consequences for others.

This problem is magnified in complex environments like microservices architectures, where a single business process might rely on dozens of independently deployed services. A seemingly minor, uncoordinated change to one service’s API can trigger a cascade of failures across the network. Poor governance transforms a flexible, integrated system into a high-risk liability, where every update carries the threat of widespread instability. This is a critical and often underestimated aspect of system integration challenges.

How to Overcome Governance and Change Management Issues

The solution lies in establishing a formal, centralised governance framework combined with robust change management protocols. This involves creating clear rules for how integrations are designed, deployed, and modified, with a strong emphasis on communication and coordination between teams. Standardising processes like API versioning and dependency tracking is key to maintaining control.

Key Insight: Effective governance is not about restricting change, but about enabling it safely. It provides the guardrails that allow development teams to innovate and deploy updates with confidence, knowing that a structured process is in place to prevent system-wide disruptions.

For instance, an e-commerce platform managing integrations with numerous third-party payment gateways and shipping providers must enforce strict API versioning. When a payment provider updates its API, a clear governance policy ensures that the platform can support both the old and new versions simultaneously for a transition period. This prevents service interruptions for merchants and customers while allowing for a gradual, controlled migration.

Actionable Tips for Implementation

- Implement Semantic Versioning (SemVer): Adopt a strict MAJOR.MINOR.PATCH versioning scheme for all your APIs. This immediately communicates the nature of a change, with major versions indicating breaking changes, minor versions adding functionality in a backward-compatible manner, and patches for backward-compatible bug fixes.

- Establish a Governance Council: Form a cross-functional team responsible for defining and enforcing integration policies, reviewing new integration proposals, and mediating disputes between development teams.

- Use Contract Testing: Implement contract testing to validate that an API provider has not introduced a breaking change that would impact its consumers. This automated approach ensures dependencies remain compatible without needing full end-to-end tests.

- Maintain Comprehensive Integration Documentation: Use standards like the OpenAPI Specification to document every API. This documentation should be treated as code-maintained, versioned, and automatically updated as part of your CI/CD pipeline.

8. Cost Management and ROI Optimisation

One of the most complex system integration challenges extends beyond technical execution into financial governance. Managing the total cost of ownership (TCO) and justifying the return on investment (ROI) for integration projects is a persistent hurdle. Costs are multifaceted, encompassing platform licensing, development resources, ongoing maintenance, and variable cloud service fees, making it difficult to forecast and control expenditure.

The core of this challenge lies in balancing the immediate costs of integration with its long-term strategic value. An organisation might invest heavily in connecting its supply chain management (SCM) and enterprise resource planning (ERP) systems, but without clear metrics, the business impact remains anecdotal. This ambiguity makes it difficult to secure ongoing funding and prove the project’s worth, especially when faced with competing business priorities.

How to Overcome Cost Management Challenges

A proactive and data-driven approach to financial oversight is essential. This involves establishing a framework for tracking all integration-related costs from day one and tying them directly to measurable business outcomes. The goal is to move from viewing integration as a pure cost centre to recognising it as a value-generating investment.

Key Insight: Optimising integration ROI is not just about reducing costs; it’s about maximising value. The most successful organisations define clear Key Performance Indicators (KPIs) like reduced manual effort, faster order processing, or improved customer satisfaction, and continuously measure the integration’s impact against them.

For example, a healthcare provider integrating a new Electronic Medical Record (EMR) system can justify the significant upfront cost by tracking metrics such as reduced administrative errors, faster patient data access for clinicians, and improved patient outcomes. Similarly, a retail company implementing an omnichannel integration can measure ROI through increased customer lifetime value and higher conversion rates. Effective system integration must also factor in financial viability; exploring cloud cost optimisation strategies can help control expenses associated with cloud-based platforms.

Actionable Tips for Implementation

- Establish a Clear ROI Framework: Before starting a project, define specific, measurable, achievable, relevant, and time-bound (SMART) goals. Quantify the expected business value, whether in cost savings, revenue growth, or efficiency gains.

- Implement Comprehensive Cost Monitoring: Use cost management tools provided by cloud vendors like AWS or Azure to track spending in real time. Set up alerts to notify stakeholders when budgets are at risk of being exceeded.

- Conduct ‘Build vs. Buy’ Analysis: Regularly evaluate whether it is more cost-effective to build custom integrations or purchase a subscription to an Integration Platform as a Service (iPaaS). Consider not just the initial cost but the long-term maintenance and skill requirements.

- Adopt a Chargeback Model: For shared integration platforms, implement a usage-based chargeback or showback model. This assigns costs to the business units that benefit from the services, promoting accountability and discouraging inefficient resource consumption.

System Integration Challenges Comparison

| Aspect | Data Format and Protocol Incompatibility | Legacy System Modernization and Compatibility | Security and Authentication Across Systems | Real-time Data Synchronization and Consistency | Performance and Scalability Bottlenecks | Error Handling and System Resilience | Governance and Change Management | Cost Management and ROI Optimization |

|---|---|---|---|---|---|---|---|---|

| Implementation Complexity | High – Requires extensive transformation logic | High – Bridging old and new tech without disruption | High – Managing diverse security protocols | Very High – Distributed consistency and latency | High – Complex scaling and tuning needs | High – Designing for fault tolerance and retries | Medium to High – Coordinating versions and releases | Medium – Tracking costs alongside integration value |

| Resource Requirements | Significant ETL tools and integration platforms | Skilled legacy developers and modernization tools | IAM platforms, security tools, ongoing audits | Event streaming platforms, messaging infrastructure | Scalable infrastructure, monitoring, and tuning experts | Infrastructure for retries, monitoring, and resilience | Governance frameworks, testing tools, documentation | Cost monitoring, optimization tools, and analytics |

| Expected Outcomes | Standardized data exchange and long-term maintainability | Preservation of legacy logic with modern connectivity | Enhanced security and compliance | Near real-time data accuracy for better decisions | Improved responsiveness and ability to handle load | Increased system uptime and graceful failure handling | Reduced integration failures and improved stability | Controlled costs and optimized ROI |

| Ideal Use Cases | Systems with multiple incompatible formats and protocols | Integrating critical legacy systems with modern apps | Environments requiring strong authentication & compliance | Businesses needing up-to-the-second consistent data | High traffic systems requiring scalable integration | Systems where fault tolerance is critical | Large, complex integration ecosystems with multiple teams | Organizations focused on cost control and ROI measurement |

| Key Advantages | Simplifies future integration; supports standards | Gradual modernization reduces risk; leverages legacy | Centralized identity management; regulatory compliance | Real-time insights; improved customer experience | Better user experience; supports business growth | Limits cascading failures; improves reliability | Better team coordination; stable integration landscape | Improves budget control; better resource allocation |

From Challenge to Catalyst: Building a Future-Ready Integration Strategy

Navigating the landscape of system integration challenges can feel like traversing a complex and often unpredictable terrain. From the technical intricacies of data format translation and legacy system modernisation to the strategic imperatives of security, governance, and cost management, the obstacles are both numerous and significant. However, as we have explored, these challenges are not insurmountable roadblocks; they are strategic inflection points that, when addressed proactively, can unlock profound organisational value and competitive advantage.

The journey from disjointed systems to a cohesive digital ecosystem is fundamentally a shift in perspective. It involves moving away from reactive, project-by-project integrations and toward a holistic, future-focused strategy. The most successful organisations are those that realise integration is not merely a technical task but a core business capability that underpins agility, innovation, and growth.

Synthesising the Solutions: Key Takeaways

To effectively transform system integration challenges into catalysts for progress, it is crucial to internalise several core principles we’ve discussed.

- Embrace Strategic Governance: A robust governance framework is the bedrock of successful integration. This means establishing clear ownership, standardised processes for change management, and comprehensive documentation to prevent knowledge silos and ensure consistency across all projects.

- Prioritise Security by Design: Security cannot be an afterthought. Integrating security protocols from the initial design phase, implementing centralised identity and access management, and ensuring end-to-end data encryption are non-negotiable practices for protecting sensitive information across interconnected systems.

- Architect for Scalability and Resilience: Your integration architecture must be built to handle future growth and withstand inevitable disruptions. Employing modern patterns like microservices and APIs, alongside rigorous performance testing and sophisticated error-handling mechanisms, creates a resilient foundation that can adapt to changing business demands.

- Focus on Data Consistency: The true power of integration is realised when data flows seamlessly and reliably between systems. Prioritising a single source of truth, implementing real-time or near-real-time synchronisation where necessary, and establishing clear data quality rules are essential for empowering data-driven decisions.

Your Actionable Path Forward

Moving from theory to practice requires a deliberate and measured approach. Instead of attempting to solve every integration issue at once, focus on creating a strategic roadmap. Begin by conducting a thorough audit of your existing systems and workflows to identify the most critical pain points and the highest-impact opportunities. Which legacy system is causing the most significant bottleneck? Where are data inconsistencies creating the most friction for your teams?

From there, prioritise a pilot project that addresses a well-defined problem and offers a clear, measurable return on investment. This initial success will not only deliver immediate value but also build organisational momentum and secure stakeholder buy-in for more ambitious integration initiatives. By treating each challenge not as an isolated fire to be extinguished but as a piece of a larger strategic puzzle, you can systematically build a powerful, interconnected, and future-ready digital infrastructure. This methodical approach transforms the complex web of system integration challenges from a source of organisational friction into a powerful engine for efficiency and innovation.

Ready to turn your integration challenges into strategic assets? The experts at Osher Digital specialise in designing and implementing bespoke automation and integration solutions that align with your unique business goals. Visit Osher Digital to learn how we can help you build a robust, scalable, and secure digital foundation for future growth.

Jump to a section

Ready to streamline your operations?

Get in touch for a free consultation to see how we can streamline your operations and increase your productivity.