What Is Tokenization in AI?

Ever wonder what is tokenization in AI? We explain how AI learns language in simple terms, using real-world examples to show you how it works.

So, you’ve probably heard the term ‘tokenization’ thrown around with all the buzz about AI and wondered what it actually means. You’re not alone. It sounds super technical, right? But I promise you, it’s not.

In simple terms, tokenization is just the process of breaking down a big piece of text into smaller, bite-sized units called tokens. It’s the very first, and maybe the most important, step an AI takes to even begin to understand what we’re saying.

Breaking Down Language The AI Way

Ttokenization is actually a concept you already get. It’s not nearly as complicated as it sounds.

Think about how you’d teach a kid to read. You wouldn’t just hand them a massive novel and say, “good luck with that.” You’d start with the building blocks. Letters. Then simple words. And eventually, you’d build up to full sentences. You build understanding piece by piece.

Tokenization is basically the same idea, but for an artificial intelligence. It’s the first thing that has to happen. It’s where a machine takes a big chunk of our language, like this very paragraph you’re reading, and chops it down into smaller, digestible pieces. We call these little pieces ‘tokens’.

So What’s a Token Exactly?

Now, a ‘token’ isn’t always a neat, whole word. It can be a few different things, and it all depends on how the AI model was designed:

- A whole word (like “house” or “car”)

- Part of a word, like a subword (imagine splitting “running” into “run” and “ning”)

- Even just a single letter (like ‘a’, ‘b’, or ‘c’)

Imagine you have a big, complicated idea. Tokenization is like turning that idea into a neat pile of Lego bricks. It’s only once the AI has these individual bricks that it can start to figure out the structure… the context… and the real meaning behind what we’ve written. If you want to get a bit more technical, it helps to understand exactly what a token is in the AI world.

This whole process is absolutely fundamental for pretty much everything an AI does with language, from answering your customer service questions to translating Spanish on the fly. Without it, our rich, messy, and often weird human language is just a meaningless jumble of letters to a machine. Honestly, if you’re new to this whole space, it can be a lifesaver to get your head around the common terms. We’ve got a great definitive AI glossary of terms that might help.

And this isn’t just some abstract tech concept. It’s happening right here. AI adoption in Australia is growing like crazy, and clever techniques like tokenization are at the heart of it all. Fun fact… Australia’s AI research output has more than doubled recently, jumping from 5.3% to 11.6% of total scholarly publications. That shows a real national focus on getting these foundational AI bits and pieces right.

Why Can’t AI Just Read Like a Human?

It’s a totally fair question. Computers are getting smarter every single day, so why do we need this whole process of chopping up sentences? Can’t an AI just… read?

The short answer is no. Not in the way you and I do.

Our brains are amazing things, aren’t they? When you read this sentence, you’re not just seeing letters. You’re seeing patterns, picking up on my tone, and you probably skimmed right over a typo without even noticing. You just know that ‘run’, ‘running’, and ‘ran’ are all different versions of the same basic idea. For us, it’s just second nature.

An AI, on the other hand, starts with nothing. A blank slate. To a machine, those three words are just unique, completely unrelated strings of characters. It has absolutely no built in understanding that they all point back to the same action. That connection has to be learned from scratch.

Translating Our World for a Machine

This is exactly where tokenization comes in as the crucial translator. It’s the bridge. By breaking down our wonderfully messy human language into predictable, consistent units, these ‘tokens’, we create a structured vocabulary that an AI can actually work with. It’s how we turn our world of meaning into its world of numbers and logic.

Think of it like building a dictionary for a computer. But before you can even start defining anything, you first need to decide what a ‘word’ even is. That’s the job tokenization does. It’s the process that turns a flowing sentence into a neat list of items the machine can count, analyse, and eventually attach meaning to.

This isn’t just a helpful step; it’s the only first step. Without it, an AI just sees our language as an impossible wall of text. Tokenization is the key that opens the door.

The whole field dedicated to teaching computers to understand us is called natural language processing (NLP). It’s a pretty fascinating area that’s all about how machines can interpret and respond to our language. To really get the big picture, it’s worth taking a look at what natural language processing is and seeing how it powers so many of the tools we use every single day.

At the end of the day, an AI can’t read like a person because it doesn’t experience the world like one. It doesn’t have our lifetime of context, cultural inside jokes, or the deep intuition we’ve been building since we learned our first words. Tokenization is our way of giving the machine a logical, structured starting point for its learning journey.

The Different Ways We Can Break Down Language

Okay, so we’ve established we need to chop up human language into predictable little pieces for an AI. But that brings up a pretty important question… what exactly is a piece? Do we split by the word? By the syllable? Or do we go right down to single letters?

This is where the real art of tokenization comes in. There’s no single, perfect answer. Over the years, people have developed a few different methods, each with its own way of slicing up text and its own pros and cons.

Let’s check out the main approaches you’ll bump into.

The Old-School Classic: Word Tokenization

The most obvious way to do it is word tokenization. Just like it sounds, you take a sentence and split it up based on spaces and punctuation. Simple.

The sentence “AI is learning fast” becomes four separate tokens: ["AI", "is", "learning", "fast"]. For a long time, this was the standard way of doing things, and for languages like English, it works okay… some of the time.

But it’s got some big problems. What happens when the AI sees a word it’s never seen before, like a new brand name or just a typo? It creates what’s called an “out of vocabulary” problem. The AI just freezes. It also struggles with long, complex words. It sees “antidisestablishmentarianism” as one huge, mysterious block, and completely misses the meaningful little parts inside it like ‘anti-‘, ‘dis-‘, ‘establish’, and ‘-ism’.

A More Flexible Approach: Subword Tokenization

This is where things got really clever. Subword tokenization is a much smarter way to look at language. Instead of treating every word like it’s a solid, unbreakable thing, this approach breaks words down into smaller parts that show up all the time.

Think of it like this. Word tokenization gives you a box of pre built Lego models. Subword tokenization gives you a massive collection of individual bricks. Things like prefixes (‘un-‘, ‘re-‘), suffixes (‘-ing’, ‘-ly’), and common root words. This means the AI can actually build a word it has never officially seen before, just by piecing together the subword tokens it already knows. Pretty neat, huh?

This solves the out of vocabulary problem and gives the model a much deeper feel for how words are actually put together. Two of the most popular ways to do this are:

- Byte-Pair Encoding (BPE): This starts by treating every single character as a token. Then, it looks through all the text and finds the pair of tokens that appears most often next to each other, and merges them into a new, single token. It just keeps doing this over and over until it has a vocabulary of the size it wants.

- WordPiece: Google’s BERT model made this one famous. It’s a lot like BPE, but when it decides what to merge, it doesn’t just look at what’s most common. It looks for the merge that makes the training data most “likely,” which is a slightly more mathematical way of finding the most meaningful combinations. The result is the same: a super efficient vocabulary of word parts.

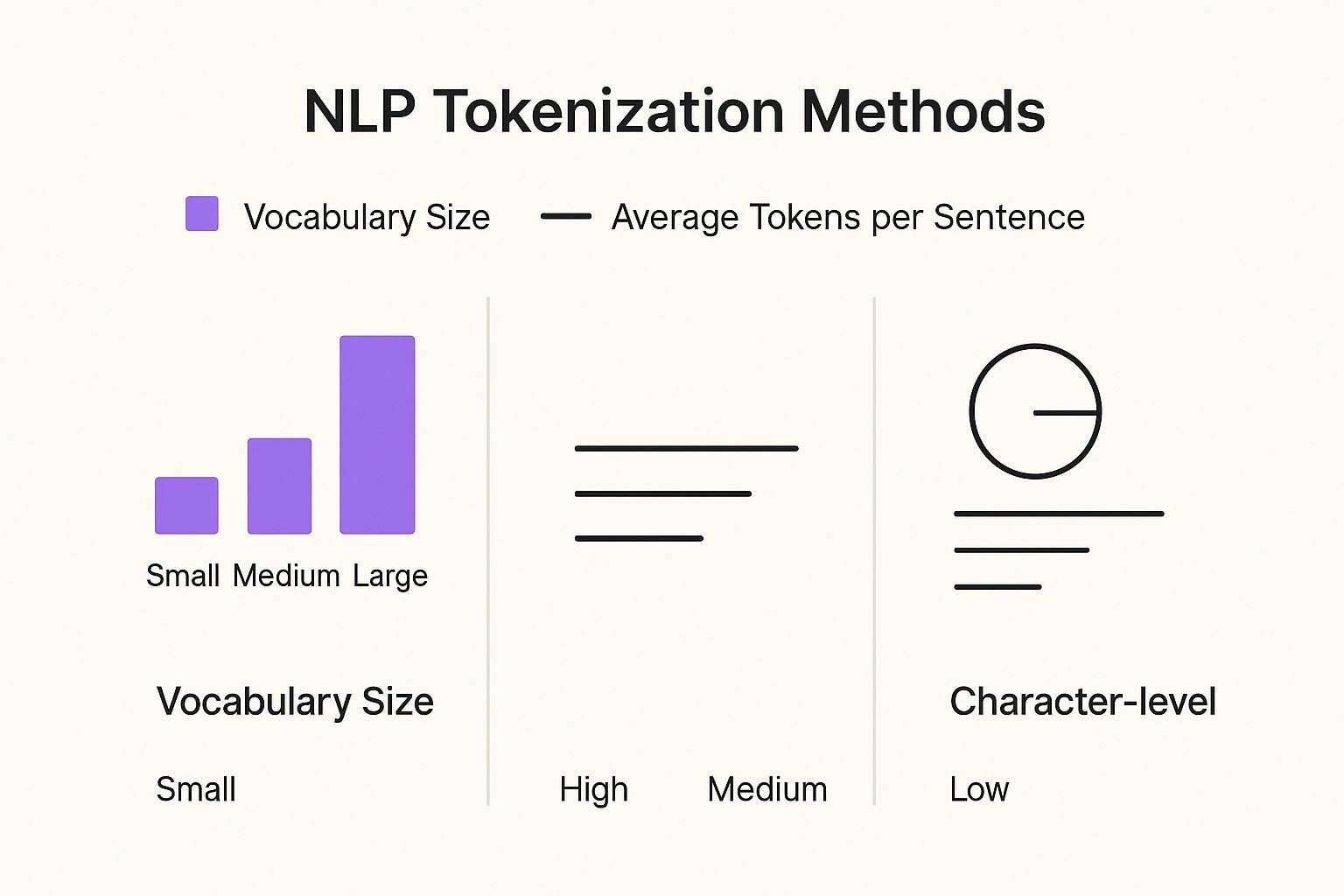

The image below gives a great visual of how these different levels of chopping things up can affect the model’s vocabulary.

As you can see, breaking text down into these smaller subword or character pieces lets a model represent a basically infinite number of words with a finite, manageable vocabulary. This makes the AI tougher, more efficient, and way better at handling the messy reality of human language.

These advanced tokenization methods are the cornerstone of modern Natural Language Processing (NLP), which is really just the fancy name for making AI understand us. It’s a huge part of how AI is Simplifying Life with Natural Language Processing.

Getting this step right is so important for training powerful AI models. If you’re curious how these basic ideas lead to real results for businesses, this beginner’s guide to fine-tuning large language models for business is a great next read.

Comparing Popular Tokenization Methods

To make it a bit clearer, here’s a simple table breaking down the three main ways of doing it. They all have their place, but you’ll see why the subword methods are the go to for most modern AI.

| Method Type | How It Works (In a Nutshell) | Best For | A Potential Downside |

|---|---|---|---|

| Word | Splits text by spaces and punctuation. One word = one token. | Simple text where you know all the words that will ever be used. | Can’t handle words it hasn’t seen before and creates a massive vocabulary list. |

| Subword | Breaks words into smaller, common parts (e.g., ‘tokenization’ → ‘token’, ‘ization’). | The modern standard for most big AI models. It’s the perfect balance of size and meaning. | Can sometimes split words in ways that don’t make perfect grammatical sense. |

| Character | Treats every single letter, number, and symbol as a token. | Languages without spaces (like Chinese) or when you need to analyse character patterns. | Creates super long strings of tokens, which takes a lot of computer power to process. |

At the end of the day, choosing the right way to tokenize is a bit of a balancing act. You’re trading off the size of your AI’s dictionary against how much text it has to read, and for now, subword tokenization seems to be the sweet spot for most of today’s impressive AI systems.

How Tokenization Shows Up in Your Daily Life

Okay, let’s pull this whole idea out of the theory and see where it actually pops up. Because tokenization isn’t some dusty concept from a computer science textbook… it’s quietly working in the background of the apps you use every single day.

It’s the invisible glue making so much of modern tech feel intuitive and, well, smart.

You’ve probably already used it dozens of times today without even thinking about it.

From Your Search Bar to Your Streaming Queue

Ever typed a messy, half finished question into Google and been amazed that it knew exactly what you meant? That’s tokenization. It grabs your jumble of words, chops them into meaningful tokens, and uses them to figure out what you’re really trying to ask. It’s that critical first step that lets it search through billions of web pages to find the perfect answer.

A similar thing is happening when you get a surprisingly good movie recommendation.

Streaming services are powered by AI models that have tokenized countless movie reviews, plot summaries, and even dialogue from subtitles. By breaking all that text down into manageable chunks, the system can spot tiny patterns and connections… which helps it predict what you might want to watch next. It’s not magic. Just very clever text analysis.

Here are a few other places it’s working hard behind the scenes:

- Customer Service Chatbots: When you type out your problem in a chat window, tokenization helps the bot pull your sentences apart to figure out the core issue and find the right help article.

- Email Spam Filters: Your inbox is constantly tokenizing the content of incoming emails to spot the classic words and phrases that scream “spam” or “phishing attack”.

- Language Translation Apps: These tools work by instantly tokenizing the phrase you’ve typed, figuring out its structure, and then putting together a new sentence in the other language, token by token.

The Australian Business Connection

This isn’t just a global tech thing; it’s a massive deal right here in Australia, especially in places like finance. Local banks and financial institutions are using AI to comb through huge reports and customer messages, all to spot potential fraud or identify new market trends.

And this use of AI is only speeding up. A recent study found that 72% of Australian financial organisations are now using AI technologies that rely on understanding language… a process where tokenization is always the first step. The interesting part is that even with so many companies using it, there’s still a bit of a trust gap. It shows how important it is for people to actually understand how this stuff works. You can read the full study on AI trust and adoption in Australia to see the data for yourself.

From your search history to your bank’s security, tokenization is the simple, powerful idea that lets AI make sense of our world. It’s the essential first step that turns our messy human language into structured data a machine can actually use.

So, the next time you get that perfect search result or a helpful answer from a chatbot, you’ll know the first thing that happened was a little bit of digital chopping and sorting. That’s what tokenization in AI is all about.

The Evolving Future of Tokenization

So, we’ve talked a lot about how tokenization breaks down language for AI. But the really cool part? The basic idea isn’t just for text anymore. This core concept of slicing up something big and complicated into small, machine friendly chunks is now showing up in some seriously exciting new areas.

Just step back from language for a second. What about a photo? Or a song? The same logic applies. An AI can learn to ‘tokenize’ an image by splitting it into a grid of tiny patches, or an audio clip by chopping it into short little bits of sound. Each of these pieces becomes a token, a building block the AI can use to understand the whole thing.

And this is where it starts to get really interesting.

More Than Just Words on a Page

We’re now seeing this idea of tokenization stretch into completely new areas, especially finance. Picture this: you take a real-world asset, like the deed to a house or a share in a company, and you create a unique, secure digital version of it. That digital version is a token.

This isn’t some sci fi idea from the future… it’s already happening right here in Australia.

The real shift is seeing tokenisation move from representing abstract things like words to representing real, tangible value. It’s blurring the line between the physical and digital worlds in a way we’ve never really seen before.

This is the new frontier where AI and modern finance are starting to meet, creating completely new ways to manage and trade assets. A great example is Project Acacia, a team effort between the Reserve Bank of Australia and several big financial players.

They’re looking at how to turn things like fixed income assets and trade deals into digital tokens. The project is looking at 19 different ways banks like ANZ and Westpac can run actual transactions with these tokenised assets. We’re a long way from just processing text now. You can read more about Australia’s tokenised asset pilot to get a sense of just how far this idea has come.

This evolution just proves that tokenization is one of the most basic and flexible ideas in the AI toolkit. It’s a simple but powerful idea: break things down to understand them. And we’re only just beginning to see where it can go.

Common Questions About Tokenization

Alright, we’ve covered a lot. It’s totally normal if you’ve still got a few questions buzzing around. That’s what happens when you start digging into how AI really works… one answer just leads to three new questions. It’s a rabbit hole.

So, let’s wrap this up by hitting some of the most common ones that come up. I’ll keep the answers simple, just like we’re chatting. This is all about clearing up any last bits of confusion so you can walk away feeling like you’ve really got a handle on this.

Ready? Let’s do it.

Is Tokenization the Same Thing as Encryption?

This is a big one, and it’s a great question. The short answer is no. They’re completely different things with completely different jobs.

Encryption is all about security. Its only goal is to scramble data so that only someone with the right key can unscramble it and read it. Think of it like putting a letter in a locked box. The content is still in there, it’s just hidden from anyone who shouldn’t see it.

Tokenization, on the other hand, is all about meaning and structure for an AI. It breaks language down into useful pieces so a machine can analyse it. It’s not trying to hide anything… it’s actually trying to reveal the patterns in the language. They both work with data, but for totally different reasons.

Does the Choice of Tokenizer Really Matter?

Yep. It matters a whole lot. You could almost think of the tokenizer as the pair of glasses an AI wears to see the world.

Choosing the wrong one is like giving the AI a blurry or distorted view of language. If a tokenizer splits words in a really weird, unnatural way, it can make it much harder for the model to learn how concepts relate to each other. This can directly mess with its performance, leading to less accurate answers, strangely worded sentences, or just a total failure to get what you’re asking.

The choice of tokenizer fundamentally shapes how an AI model sees language. A good one can seriously boost performance, while a bad one can hold the whole system back, no matter how powerful the rest of the model is.

Getting it right is a huge step in building a smart and effective AI. It’s one of those foundational decisions that sends ripples all the way through to the final result.

How Do AI Models Handle Languages Without Spaces?

This is where tokenization gets really clever. In English, we have a big advantage: spaces. They give us obvious places to cut words apart. But what about languages like Chinese, Japanese, or Thai, where words often just flow into each other without any clear breaks?

This is the perfect job for subword tokenization.

Instead of looking for spaces, these advanced tokenizers are trained to find the most common strings of characters or syllables in that language. For example, a tokenizer for Japanese would learn to see common particles and kanji radicals as their own tokens, even if there aren’t any spaces around them. It learns the statistical patterns of a language to figure out the most logical places to make a cut.

It’s a really elegant solution to a tricky problem, and it’s what allows these big AI models to work so well across so many different languages, no matter how they’re structured. It just goes to show how flexible the core idea of what is tokenization in AI can really be.

At Osher Digital, we get that the details matter. From choosing the right tokenization strategy to building custom AI agents that solve real business problems, we focus on creating solutions that are not just powerful but perfectly matched to what you actually need. If you’re ready to move past the theory and see how AI-driven automation can transform your business, let’s have a chat.

Jump to a section

Ready to streamline your operations?

Get in touch for a free consultation to see how we can streamline your operations and increase your productivity.