What Is RAG in AI?

Learn what is RAG and how it functions. Explore its significance and applications in a clear way. Click to find out more!

So, you’ve heard the acronym RAG floating around and you’re thinking, what is it, really?

In the simplest terms, Retrieval-Augmented Generation (RAG) is a clever trick that gives an AI model a live connection to a specific, trusted knowledge base before it answers your question. It basically stops the AI from just making stuff up and forces it to use up-to-date, factual information that you control.

Let’s Talk About RAG Without the Tech Jargon

Alright, let’s cut through all the noise. You’ve probably seen the acronym RAG popping up everywhere and wondered what it actually means for you. Forget the dense diagrams and buzzwords for a moment. Let’s just have a real chat about it, like we’re grabbing a coffee.

At its heart, RAG is a way to make AI… well, smarter and a whole lot more reliable. Think of a standard Large Language Model (LLM) as a brilliant uni student who crammed like crazy for last year’s exams. They know a lot. An incredible amount, actually. But all their knowledge is fundamentally frozen in time, based on whatever textbooks and notes they studied back then.

The Open-Book Test Analogy

Now, imagine giving that same brilliant student an open book for their next test.

But it’s not just any old book. It’s a perfectly organised folder, full of the latest facts, your company’s private documents, and up-to-the-minute reports. Everything they need to know… is right there.

This is exactly what RAG does for an AI.

When you ask a question, the AI doesn’t just dive into its old, static memory. Nope. First, it does a super-fast search through the specific information you’ve given it access to. It finds the most relevant little snippets of text, kind of like highlighting the key paragraphs in a textbook right before you write your answer.

Only then does it start crafting its response, using that fresh, reliable info as its foundation.

The real magic here is the shift from remembering to knowing. A standard AI remembers its training data, but a RAG-powered AI knows the answer because it just looked it up from a source you trust.

This whole process dramatically changes the quality and reliability of what the AI spits out. And it’s probably why you’re looking into what generative AI is and how it could be genuinely useful for your business. The RAG approach tackles some of the biggest frustrations people have with AI right now.

- Hallucinations: You know, that fancy industry term for when an AI just confidently makes things up. RAG seriously cuts this down by grounding the AI in actual facts from your documents.

- Outdated Information: A normal AI’s knowledge has an expiry date. RAG lets it tap into real-time information, so its answers are actually current and relevant.

- Lack of Specificity: You can feed a RAG system your internal company policies or detailed product manuals. This means it can answer super specific questions that a general AI would have absolutely no clue about.

Ultimately, RAG isn’t about replacing the AI’s brain. It’s about giving it the perfect cheat sheet before every single question. It turns the AI from a creative storyteller into a trustworthy, specialist assistant. Which is a total game-changer if you’re using AI in a professional setting.

How RAG Works Under the Hood

Alright, so we’ve established that RAG is like giving an AI an open-book test. But what’s actually happening behind the curtain? How does the model get the book, find the right page, and then use it to answer your question?

It’s basically a two-step dance. And it’s more intuitive than it sounds. Think of it less like complex computer science and more like a very efficient workflow.

The Two-Step Process: Retrieval Then Generation

First up, when you ask a question, your query doesn’t go straight to the creative, sentence-building part of the Large Language Model (LLM). Instead, it takes a crucial detour.

Your query is sent to a component called the ‘retriever’. You can think of this as a lightning-fast research assistant. Its only job is to scan a specific, trusted knowledge base you’ve provided. This could be your company’s internal wiki, a library of product manuals, or thousands of past customer support tickets.

The retriever’s task is to find the exact bits of text that are most relevant to your query. It’s incredibly good at understanding the meaning behind your question, not just matching keywords. This first step is all about finding the raw materials.

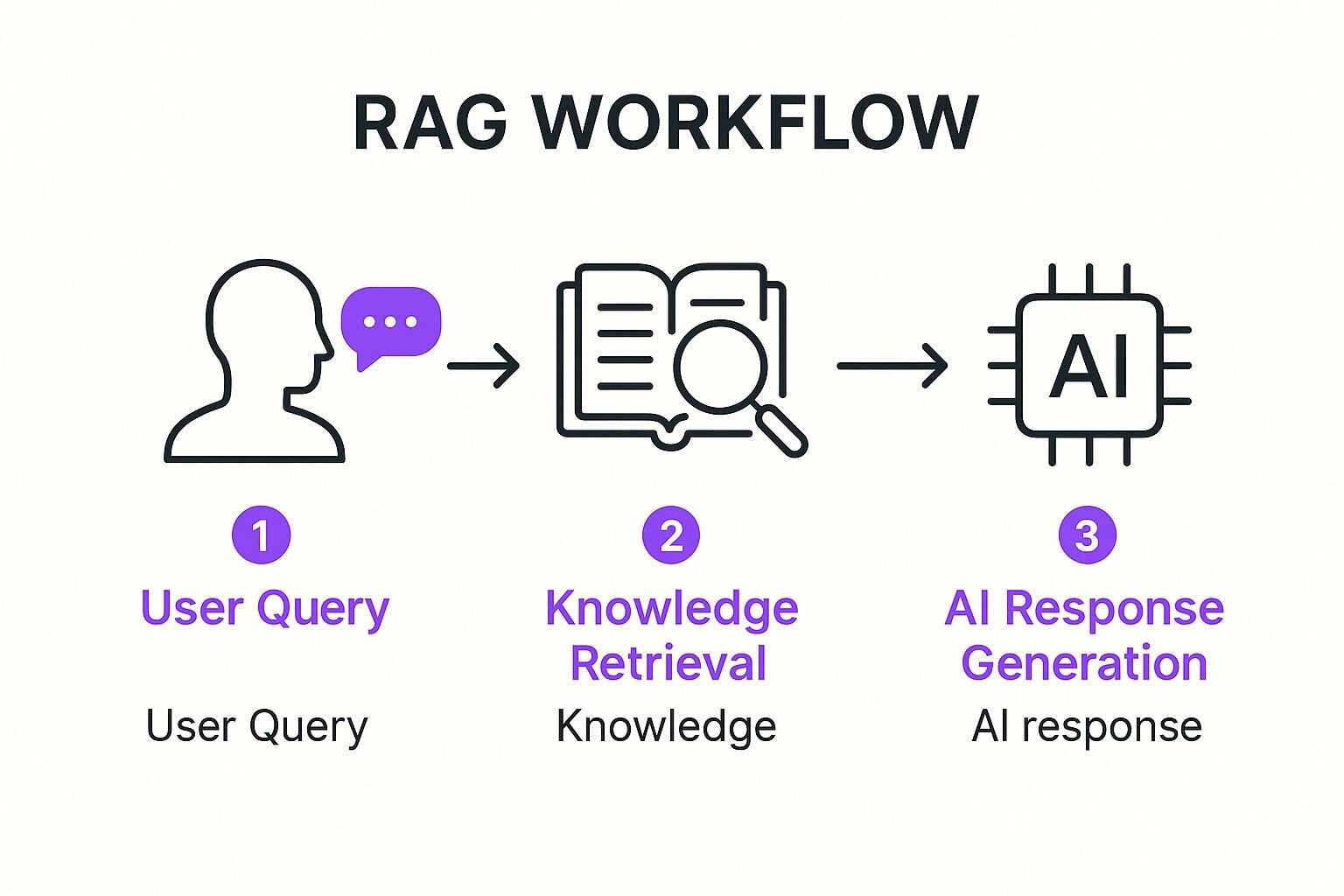

This simple infographic below shows how that process flows from your query to the AI’s final answer.

As you can see, the key is separating the ‘finding’ from the ‘answering’. It’s this separation that makes the final response so much more reliable.

The Magic of Generation

Now for the second step. This is where the real magic happens.

The system bundles your original question together with the relevant context the retriever just found. Then it hands this entire package over to the LLM… the part of the AI that’s great at writing, summarising, and explaining things in a human-like way.

At this point, the LLM isn’t just winging it based on its vast, but generic, training data. It has the facts right there in front of it. Fresh. And totally relevant to your specific query.

It’s the difference between asking a chef to cook a dish from memory versus asking them to cook it with the recipe book wide open on the counter. The result is going to be far more accurate and consistent.

This ‘retrieve-then-generate’ process is what makes RAG so powerful. It’s an elegant solution to the problem of AI “hallucinations” because it forces the model to ground its response in real, verifiable information that you control.

This improved reliability is a big reason why AI adoption is growing so quickly in Australia. Recent data shows 49% of Australians now use generative AI, which is a big jump from 38% the previous year. People are getting more comfortable because technologies like RAG make AI more useful for practical tasks where accuracy is everything.

Frameworks and tools are also making this process easier to set up. For instance, understanding what LangChain is can show you how developers connect these different components… the retriever, your data, and the LLM… into a single, smooth workflow. It’s all about creating a reliable pipeline from question to trustworthy answer.

Why RAG Is a Must-Have for Modern AI

So, the theory behind RAG sounds pretty smart. But let’s cut to the chase: what’s the real-world payoff? Why are so many businesses getting genuinely excited about what RAG is and how it can help them?

It all boils down to solving some of the most common… and frankly, frustrating… problems we’ve all hit with standard AI models. The goal is to build trust and turn AI into a reliable tool you can actually count on for important work.

Putting an End to AI “Hallucinations”

The single biggest win with RAG is accuracy. You’ve almost certainly seen it happen: you ask an AI a question, and it gives you a beautifully written, confident-sounding answer that is completely, utterly wrong. These are called ‘hallucinations,’ and they’re the biggest barrier to trusting AI with serious tasks.

RAG dramatically cuts down the chances of this happening. Because it forces the AI to base its answers on real, provided data, it can’t just invent things on the fly. It’s grounded in your reality, not just the vast, sometimes messy world of its training data.

Think of it like this: it’s the difference between an employee who guesses the answer to a client’s question versus one who says, “Let me quickly check our official documentation for you.” You trust the second person infinitely more, and that’s precisely the kind of reliability RAG brings to the table.

This foundational benefit is what makes everything else possible.

Keeping Your AI’s Knowledge Fresh and Current

Another massive advantage is how RAG keeps your information up to date. A standard Large Language Model is like a snapshot in time; its knowledge is locked to the moment it was last trained. Teaching it something new means going through a complex and incredibly expensive retraining process.

With RAG, it’s so much simpler. New company policy? A product just launched? You just add the new documents to your knowledge base.

That’s it. The AI can use that new information instantly. This agility is a game-changer because your business doesn’t stand still, and neither should your AI’s knowledge. RAG offers a far more efficient way to embed specific knowledge, providing a powerful alternative to the complexities of training large language models on custom data.

Protecting Your Private Data

This one is huge for any organisation. When you use a public AI tool, there’s always a nagging concern about where your data is going. RAG offers a much more secure way forward.

You aren’t changing the core global AI model at all. You’re just letting it securely and temporarily reference your private documents to answer a specific question. Your data stays within your control, inside your own system. The AI gets the context it needs for a moment, and then it’s gone.

This directly addresses major privacy concerns, making it feasible to use powerful AI with sensitive internal information.

When you put the two approaches side-by-side, the benefits of RAG become crystal clear.

Standard LLM vs RAG-Powered LLM

This table gives a direct comparison, showing the practical advantages of using a RAG system over a standard Large Language Model.

| Feature | Standard LLM (Without RAG) | LLM with RAG |

|---|---|---|

| Accuracy | Prone to making up facts (“hallucinating”) when it doesn’t know the answer. | High, as answers are based on specific, verifiable documents you provide. |

| Data Freshness | Knowledge is static and becomes outdated. Updating requires costly retraining. | Always current. Just update your source documents, and the AI knows the new info. |

| Data Privacy | Can expose sensitive queries and data to third-party model providers. | High. Your proprietary data stays in your secure environment and is only referenced. |

| Transparency | Acts like a “black box”. You don’t know why it gave a certain answer. | Can often cite the exact source documents it used, providing auditable answers. |

Ultimately, RAG isn’t just a technical upgrade. It’s a fundamental shift that makes AI more practical, trustworthy, and secure for real business applications. It helps AI graduate from a fascinating novelty to an indispensable and reliable part of your team.

RAG in Action: Practical Use Cases

Alright, theory is one thing, but where does the rubber actually meet the road? Let’s talk about how RAG is being used right now to solve real problems for real businesses.

It’s easy to dismiss new tech as just another buzzword. We’ve all seen them come and go. But RAG feels different because its applications are so grounded and practical.

A New Era for Customer Service

Take customer service, for instance. We’ve all dealt with a frustrating chatbot that just doesn’t get it. It spits out generic answers or, even worse, sends you in circles. A RAG-powered chatbot, on the other hand, can be a complete game-changer.

Imagine connecting a chatbot directly to your entire knowledge base… product manuals, past support tickets, company policies, the lot. Suddenly, when a customer asks a highly specific question about a feature on a three-year-old product, the bot doesn’t have to guess.

It finds the exact paragraph in the right manual and gives a perfect, accurate answer. Instantly. This isn’t just about keeping customers happy. It’s about freeing up your human support team to tackle the truly complex, high-touch issues that need their expertise.

Unlocking Insights in Healthcare and Professional Services

In highly specialised fields, the impact is even more profound. Think about healthcare. Doctors and researchers are constantly navigating a tidal wave of new information from medical journals, clinical trials, and patient histories. It’s simply impossible for any one person to keep up.

Imagine a doctor asking, “What are the latest non-invasive treatment options for this specific patient profile?” and getting a synthesised answer drawn from the most current research papers. This is already happening, and it’s leading to faster, more accurate diagnoses and better patient outcomes.

This is an area where Australia has seen significant progress. In fact, retrieval-augmented generation technology has been widely adopted in Australia’s healthcare sector, which holds a commanding market share of about 36.61% in the global RAG market. Healthcare organisations are using RAG systems to make sense of huge volumes of medical data, which is vital for evidence-based decision-making.

But it’s not just healthcare. This same principle applies right across the board:

- Legal Teams: Instead of spending days sifting through case law, a lawyer can find relevant precedents in minutes.

- Financial Analysts: They can query years of market data and financial reports to spot trends a human might miss.

- Internal Helpdesks: An employee can ask, “How do I submit an expense report for international travel?” and get a precise answer based on the company’s latest HR policies.

Powering Smarter Content and Marketing

And then there’s the world of content. How do you create articles, reports, or marketing copy that is not only well-written but also factually accurate and reflects your brand’s unique voice?

This is where RAG becomes a secret weapon. It allows a business to ground its AI-generated content in its own case studies, white papers, and research. RAG is a powerful component in the evolving landscape of AI tools for content marketing, providing the ground truth that turns generic text into genuinely valuable assets.

The core idea is simple but powerful. You’re not just asking an AI to write about a topic; you’re asking it to write about that topic using your specific knowledge and data as the source material.

These examples show that RAG isn’t some futuristic, abstract concept. It’s a practical tool being used today to solve tangible business problems, making AI more accurate, more helpful, and ultimately, more trustworthy.

How to Prepare Your Business for RAG

So, you’re sold on the idea. You’re thinking, “Okay, this RAG thing sounds genuinely useful. How do we get started?” It’s a great question, and it’s where the real work begins. Moving from simply experimenting with a public AI tool to building a proper RAG solution for your business is a pretty significant leap.

This isn’t a deeply technical guide. Think of it more like a strategic chat, as if we’re mapping this out on a whiteboard together.

The single most critical first step has nothing to do with fancy AI models. It’s all about getting your own house in order. Specifically, your knowledge base.

The Unavoidable Truth of Data Hygiene

Let’s be honest. For most businesses, internal documentation is… a bit of a mess. It’s often scattered across different drives, riddled with outdated policies, and likely has contradictory information lurking in a forgotten folder somewhere.

A RAG system is only as good as the information you feed it. That’s the golden rule.

If your knowledge base is messy, your AI will spit out messy, unreliable answers. The old saying ‘garbage in, garbage out’ has never been more true. Before you even think about platforms or models, you need to focus on what we call data hygiene.

Think of it like cooking. You can have the best chef in the world (the AI model), but if you give them expired ingredients and a dirty kitchen (your data), you’re still going to get a terrible meal. The preparation is everything.

This means you’ll need to:

- Centralise your knowledge: Pull all your important documents, manuals, and policies into one structured, accessible place.

- Clean and update everything: This is the tedious but essential part. It involves archiving old files, updating procedures, and ensuring there’s one single source of truth for key information.

- Establish a process for the future: How will you keep this data clean and current moving forward? Who is responsible for updating it?

This initial clean-up is the absolute foundation of your entire RAG project. We have a detailed guide on preparing your business for LLM-based search that dives deeper into this crucial groundwork.

Getting the Right Team and Tools in Place

Once your data is in good shape, you can start thinking about the technology and the people. You don’t necessarily need a team of AI researchers, but you do need people with the right skills to manage the system. This often includes someone who understands data pipelines and someone who can oversee the AI’s performance and fine-tune it.

You’ll also need to select the right platforms. This could involve choosing a vector database to store your prepared knowledge and picking the frameworks that connect everything together.

It’s a big shift from just using AI to strategically managing it. This is a journey that many Australian organisations are on right now. A recent survey of IT managers found that 83% were from Australian organisations actively involved in AI management.

However, that same survey highlighted a sense of caution, noting challenges with engagement and a growing awareness of the infrastructure and energy demands of AI. It’s a useful reminder that this isn’t just a switch you can flip; it’s a strategic investment that requires proper planning for infrastructure, costs, and sustainable growth.

The Future Is Augmented, Not Replaced

So, where does this all leave us? It’s easy to get caught up in the whirlwind of AI hype, with sensational headlines suggesting a complete overhaul of work as we know it.

But the rise of RAG points to a far more practical… and ultimately, more powerful… way forward.

This isn’t about AI replacing human experts. That’s a narrative straight out of science fiction. The reality is about augmenting their skills and giving them capabilities they never had before.

A Partner, Not a Replacement

Think of it like this: RAG-powered AI acts as the ultimate research assistant. It takes on the most mind-numbing part of any knowledge-based role… the painstaking search for the right piece of information… and does it in an instant. This frees up human professionals to double down on what they do best.

- Critical thinking

- Strategic decision-making

- Creative problem-solving

- Connecting disparate ideas in novel ways

The AI becomes a partner with a perfect memory, capable of finding the needle in any haystack you throw at it. But you’re still the expert who knows what to do with that needle once it’s found. This is more than just another tech trend; it’s a fundamental shift in how we work with information.

RAG helps us move toward AI that works with us, not just for us. It becomes a reliable colleague grounded in the facts you provide, making it a trustworthy source in a way general-purpose models often are not.

This human-AI collaboration is where the real value is unlocked.

As we look ahead, this partnership is only going to get deeper. The technology will improve its ability to understand not just the words in your documents, but the complex relationships and nuances between different concepts. Imagine an AI that doesn’t just pull up a sales contract but also flags a potential conflict with a clause in a compliance document from three years ago.

That’s the direction we’re headed. We’re steering away from the notion of an all-knowing, independent AI and toward building focused, accurate, and grounded systems that amplify our own intelligence. The most effective combination for tackling complex challenges won’t be human intelligence alone or artificial intelligence alone.

It will always be the two working together.

Common Questions About RAG Answered

We’ve certainly covered a lot of ground. It’s completely normal if you still have a few questions buzzing around… when you first start digging into what RAG really is, some of the concepts can feel a bit abstract.

So, let’s clear up a few of the most common queries people have.

Is RAG the Same as Fine-Tuning an AI Model?

That’s a great question, and getting the distinction right is crucial. Both are methods for making an AI model more knowledgeable about a specific subject, but they go about it in fundamentally different ways.

Think of fine-tuning like sending an expert to university for a year to earn a new degree. It’s an intensive, deep-learning process that permanently alters the model’s internal parameters… its core “knowledge.” This approach is powerful but also tends to be slow, expensive, and a major project to update.

RAG, on the other hand, is more like giving that same expert access to a perfectly curated library and letting them consult it for an open-book exam. The model’s core intelligence doesn’t change. Instead, it’s given the exact information it needs, right when it needs it. This makes RAG faster, much more cost-effective, and far simpler to keep current.

Do I Need to Be a Developer to Use RAG?

Not necessarily, and that’s what makes this technology so promising for businesses.

While building a RAG system from scratch is absolutely a technical task for developers, a new wave of platforms is making this capability far more accessible. It’s a bit like how website builders evolved. Twenty years ago, you needed to be a coder to build a website; today, tools like Squarespace or Wix empower anyone to create something professional.

Many modern RAG-powered applications now offer simple, user-friendly interfaces that let you connect your own data sources… like a company Google Drive, SharePoint, or Confluence… with just a few clicks. So, while developers are building the engine, almost anyone in the business can learn to drive the car.

The biggest challenge isn’t usually the technology anymore; it’s the data. The old saying ‘garbage in, garbage out’ has never been more accurate.

What Is the Biggest Challenge in Implementing RAG?

This leads directly to the most significant hurdle, and it’s almost never the AI itself. The real challenge is the quality and organisation of your source information.

It’s pretty simple, really. If your internal knowledge base is a tangled mess of outdated documents, contradictory policies, and files scattered across ten different systems, the AI will be hobbled from the start. It can’t produce clear, trustworthy answers if its source material is unreliable.

That’s why the most critical first step for any business is a thorough ‘data cleanup’ project. This involves:

- Organising: Consolidating essential knowledge into a central, structured repository.

- Updating: Archiving old files and ensuring all active documents reflect current information.

- Validating: Establishing a single, clear source of truth for key company facts and processes.

Getting this foundation right is everything. It’s the unglamorous but essential work that makes all the powerful AI capabilities possible.

Feeling ready to explore how AI-driven automation could work for your business? At Osher Digital, we specialise in creating custom AI solutions that connect to your unique business knowledge, turning your data into a powerful asset.

Jump to a section

Ready to streamline your operations?

Get in touch for a free consultation to see how we can streamline your operations and increase your productivity.